前言:为什么学习爬虫?

在当今大数据时代,网络数据采集(爬虫)已成为数据分析和人工智能领域的重要技能。无论是市场调研、竞品分析,还是学术研究,爬虫技术都能帮助我们高效获取所需信息。本文将带领Python初学者一步步掌握爬虫核心技术。

一、爬虫基础准备

1.1 环境搭建

# 创建虚拟环境(推荐)

python –m venv spider_env

source spider_env/bin/activate # Linux/Mac

spider_env\\Scripts\\activate # Windows

# 安装必要库

pip install requests beautifulsoup4 lxml pandas

# 可选:安装更强大的解析库

pip install parsel selenium playwright

1.2 爬虫核心库介绍

- requests:发送HTTP请求

- BeautifulSoup:解析HTML/XML

- lxml:高效解析库

- pandas:数据处理和存储

二、第一个爬虫:获取网页内容

2.1 简单的GET请求

import requests

from bs4 import BeautifulSoup

def get_webpage(url):

"""获取网页内容"""

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36'

}

try:

response = requests.get(url, headers=headers, timeout=10)

response.raise_for_status() # 检查请求是否成功

response.encoding = response.apparent_encoding

return response.text

except requests.RequestException as e:

print(f"请求失败: {e}")

return None

# 测试爬取豆瓣电影TOP250

url = "https://movie.douban.com/top250"

html = get_webpage(url)

print(f"获取到 {len(html)} 个字符")

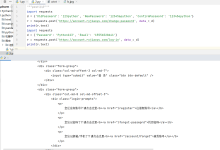

2.2 解析HTML内容

def parse_movie_info(html):

"""解析电影信息"""

if not html:

return []

soup = BeautifulSoup(html, 'lxml')

movies = []

# 查找所有电影项目

items = soup.find_all('div', class_='item')

for item in items[:5]: # 只取前5部电影

# 提取电影标题

title_elem = item.find('span', class_='title')

title = title_elem.text if title_elem else "无标题"

# 提取评分

rating_elem = item.find('span', class_='rating_num')

rating = rating_elem.text if rating_elem else "无评分"

# 提取简介

quote_elem = item.find('span', class_='inq')

quote = quote_elem.text if quote_elem else "无简介"

movies.append({

'title': title,

'rating': rating,

'quote': quote

})

return movies

# 执行解析

movies = parse_movie_info(html)

for i, movie in enumerate(movies, 1):

print(f"{i}. {movie['title']} – 评分: {movie['rating']}")

print(f" 简介: {movie['quote']}\\n")

三、实战项目:爬取天气预报数据

3.1 分析目标网站

我们以中国天气网为例:http://www.weather.com.cn/weather/101010100.shtml

3.2 完整爬虫代码

import requests

from bs4 import BeautifulSoup

import pandas as pd

import time

import random

class WeatherSpider:

def __init__(self):

self.headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36',

'Referer': 'http://www.weather.com.cn/'

}

def get_weather(self, city_code='101010100'):

"""获取指定城市的天气数据"""

url = f'http://www.weather.com.cn/weather/{city_code}.shtml'

try:

response = requests.get(url, headers=self.headers, timeout=15)

response.encoding = 'utf-8'

if response.status_code == 200:

return self.parse_weather(response.text)

else:

print(f"请求失败,状态码:{response.status_code}")

return None

except Exception as e:

print(f"发生错误:{e}")

return None

def parse_weather(self, html):

"""解析天气数据"""

soup = BeautifulSoup(html, 'lxml')

weather_data = []

# 查找7天天气预报

days = soup.select('ul.t.clearfix > li')

for day in days[:7]: # 取7天数据

# 提取日期

date_elem = day.select_one('h1')

date = date_elem.text if date_elem else "未知日期"

# 提取天气状况

wea_elem = day.select_one('p.wea')

weather = wea_elem.text if wea_elem else "未知天气"

# 提取温度

temp_elem = day.select_one('p.tem')

if temp_elem:

high_temp = temp_elem.select_one('span').text if temp_elem.select_one('span') else "未知"

low_temp = temp_elem.select_one('i').text if temp_elem.select_one('i') else "未知"

else:

high_temp = low_temp = "未知"

# 提取风向风力

win_elem = day.select_one('p.win')

if win_elem:

wind = win_elem.select_one('i').text if win_elem.select_one('i') else "未知"

else:

wind = "未知"

weather_data.append({

'日期': date,

'天气': weather,

'最高温': high_temp,

'最低温': low_temp,

'风向风力': wind

})

return weather_data

def save_to_csv(self, data, filename='weather.csv'):

"""保存数据到CSV文件"""

if data:

df = pd.DataFrame(data)

df.to_csv(filename, index=False, encoding='utf-8-sig')

print(f"数据已保存到 {filename}")

return True

return False

# 使用示例

if __name__ == "__main__":

spider = WeatherSpider()

# 城市代码:北京101010100,上海101020100,广州101280101

weather_data = spider.get_weather('101010100')

if weather_data:

print("北京7天天气预报:")

print("="*60)

for day in weather_data:

print(f"{day['日期']:10} {day['天气']:10} "

f"温度:{day['最低温']}~{day['最高温']} "

f"风力:{day['风向风力']}")

# 保存数据

spider.save_to_csv(weather_data)

四、高级技巧与注意事项

4.1 处理动态加载内容

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

def dynamic_crawler(url):

"""使用Selenium处理动态页面"""

options = webdriver.ChromeOptions()

options.add_argument('–headless') # 无头模式

options.add_argument('–disable-gpu')

driver = webdriver.Chrome(options=options)

try:

driver.get(url)

# 等待元素加载

wait = WebDriverWait(driver, 10)

element = wait.until(

EC.presence_of_element_located((By.CLASS_NAME, "content"))

)

# 获取页面源码

html = driver.page_source

return html

finally:

driver.quit()

4.2 反爬虫策略应对

class AntiAntiSpider:

def __init__(self):

self.session = requests.Session()

self.delay_range = (1, 3) # 随机延迟1-3秒

def random_delay(self):

"""随机延迟,避免请求过快"""

time.sleep(random.uniform(*self.delay_range))

def rotate_user_agent(self):

"""轮换User-Agent"""

user_agents = [

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/605.1.15',

'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36'

]

return random.choice(user_agents)

def make_request(self, url, max_retries=3):

"""带有重试机制的请求"""

for attempt in range(max_retries):

try:

headers = {'User-Agent': self.rotate_user_agent()}

response = self.session.get(url, headers=headers, timeout=15)

if response.status_code == 200:

return response

elif response.status_code == 429: # 请求过多

print("触发频率限制,等待后重试…")

time.sleep(10)

else:

print(f"请求失败,状态码:{response.status_code}")

except Exception as e:

print(f"请求异常:{e}")

self.random_delay()

return None

4.3 数据存储方案

import json

import sqlite3

from datetime import datetime

class DataStorage:

"""数据存储管理类"""

@staticmethod

def save_json(data, filename):

"""保存为JSON格式"""

with open(filename, 'w', encoding='utf-8') as f:

json.dump(data, f, ensure_ascii=False, indent=2)

print(f"JSON数据已保存到 {filename}")

@staticmethod

def save_sqlite(data, db_name='spider_data.db'):

"""保存到SQLite数据库"""

conn = sqlite3.connect(db_name)

cursor = conn.cursor()

# 创建表

cursor.execute('''

CREATE TABLE IF NOT EXISTS weather (

id INTEGER PRIMARY KEY AUTOINCREMENT,

date TEXT,

weather TEXT,

high_temp TEXT,

low_temp TEXT,

wind TEXT,

crawl_time TIMESTAMP

)

''')

# 插入数据

for item in data:

cursor.execute('''

INSERT INTO weather (date, weather, high_temp, low_temp, wind, crawl_time)

VALUES (?, ?, ?, ?, ?, ?)

''', (

item['日期'],

item['天气'],

item['最高温'],

item['最低温'],

item['风向风力'],

datetime.now()

))

conn.commit()

conn.close()

print(f"数据已保存到数据库 {db_name}")

五、伦理与法律注意事项

5.1 爬虫道德规范

5.2 检查robots.txt

import urllib.robotparser

def check_robots_permission(url, user_agent='*'):

"""检查robots.txt权限"""

rp = urllib.robotparser.RobotFileParser()

base_url = '/'.join(url.split('/')[:3])

rp.set_url(base_url + "/robots.txt")

rp.read()

return rp.can_fetch(user_agent, url)

# 使用示例

url = "https://www.example.com/data"

if check_robots_permission(url):

print("允许爬取")

else:

print("禁止爬取,请尊重网站规则")

六、完整项目:书籍信息爬虫

import requests

from bs4 import BeautifulSoup

import csv

import time

class BookSpider:

def __init__(self):

self.base_url = "http://books.toscrape.com/"

self.books_data = []

def scrape_all_books(self):

"""爬取所有书籍信息"""

page = 1

while True:

url = f"{self.base_url}catalogue/page-{page}.html"

print(f"正在爬取第 {page} 页…")

html = self.get_page(url)

if not html:

break

books = self.parse_books(html)

if not books:

break

self.books_data.extend(books)

page += 1

time.sleep(1) # 礼貌延迟

return self.books_data

def get_page(self, url):

"""获取页面内容"""

try:

response = requests.get(url, timeout=10)

if response.status_code == 200:

return response.content

return None

except:

return None

def parse_books(self, html):

"""解析书籍信息"""

soup = BeautifulSoup(html, 'html.parser')

books = soup.find_all('article', class_='product_pod')

book_list = []

for book in books:

title = book.h3.a['title']

price = book.select_one('p.price_color').text

rating = book.p['class'][1] # 评分等级

book_list.append({

'title': title,

'price': price,

'rating': rating

})

return book_list

def export_data(self, format='csv'):

"""导出数据"""

if format == 'csv':

with open('books.csv', 'w', newline='', encoding='utf-8') as f:

writer = csv.DictWriter(f, fieldnames=['title', 'price', 'rating'])

writer.writeheader()

writer.writerows(self.books_data)

print(f"已导出 {len(self.books_data)} 本书籍信息到 books.csv")

# 运行爬虫

if __name__ == "__main__":

spider = BookSpider()

books = spider.scrape_all_books()

print(f"\\n共爬取到 {len(books)} 本书籍")

for i, book in enumerate(books[:5], 1):

print(f"{i}. 《{book['title']}》 – 价格: {book['price']} – 评分: {book['rating']}")

spider.export_data()

七、常见问题与解决方案

Q1: 遇到403 Forbidden错误怎么办?

- 添加合适的请求头(User-Agent、Referer等)

- 使用代理IP

- 降低请求频率

Q2: 数据编码混乱怎么办?

- 使用response.encoding = response.apparent_encoding

- 尝试不同编码:utf-8、gbk、gb2312

- 使用chardet库自动检测编码

Q3: 如何提高爬虫效率?

- 使用concurrent.futures实现多线程

- 使用aiohttp实现异步爬虫

- 合理设置延迟,避免被封

八、学习资源推荐

8.1 进一步学习

官方文档:

- requests:https://docs.python-requests.org/

- BeautifulSoup:https://www.crummy.com/software/BeautifulSoup/bs4/doc/

进阶库:

- Scrapy:专业爬虫框架

- Playwright:现代化浏览器自动化

- PyQuery:jQuery风格的解析库

8.2 练习网站

- http://books.toscrape.com/

- http://quotes.toscrape.com/

- https://httpbin.org/

总结

本文从零开始介绍了Python爬虫的完整流程,涵盖了基础请求、数据解析、反爬应对、数据存储等关键环节。爬虫技术的学习需要理论与实践结合,建议初学者:

记住,爬虫不仅仅是技术活,更是对耐心和细致程度的考验。遇到问题时,多查阅文档、多调试代码,你一定会成为爬虫高手!

声明:本文仅供学习交流使用,请遵守相关法律法规和网站规定,不得用于非法用途。

希望这篇教程能帮助你顺利入门Python爬虫!如果有任何问题,欢迎在评论区留言讨论。

网硕互联帮助中心

网硕互联帮助中心

评论前必须登录!

注册