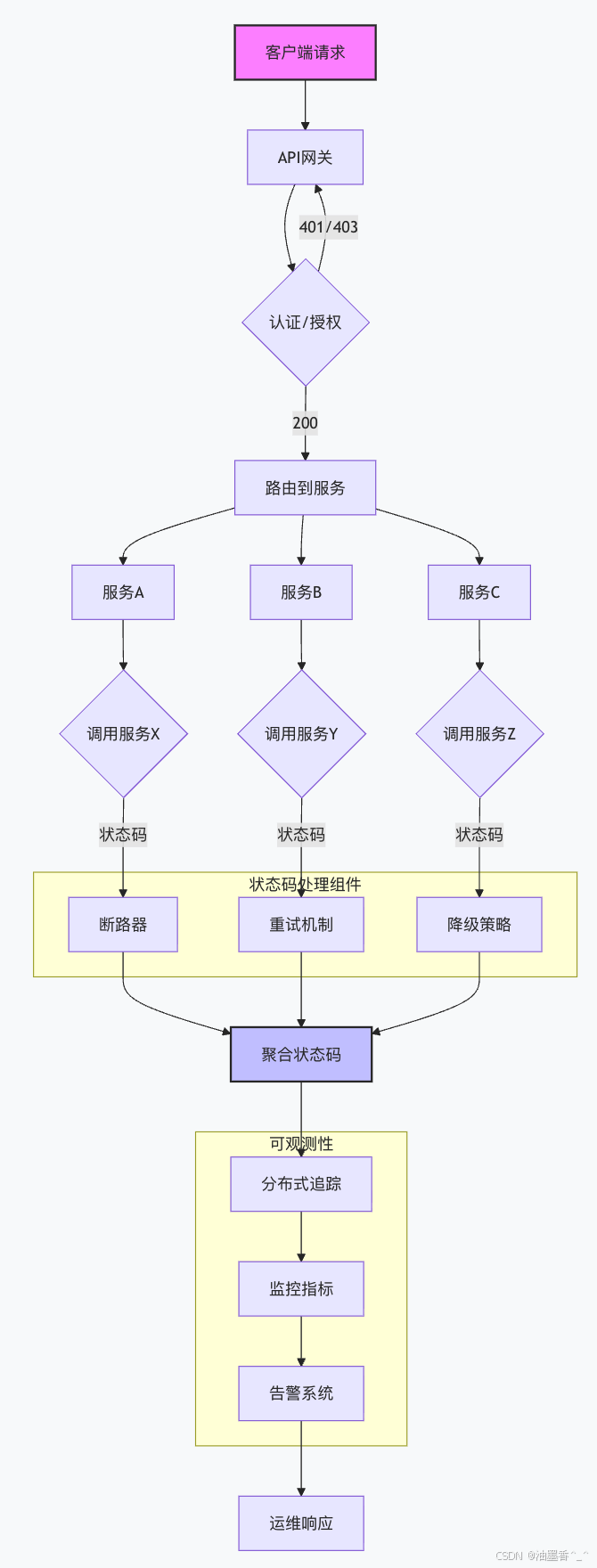

第33章:微服务架构中的状态码传播

33.1 微服务架构中的状态码挑战

33.1.1 分布式系统的复杂性

微服务架构带来了状态码处理的全新挑战:

yaml

# 微服务调用链示例

request:

path: /api/order/123

flow:

– API Gateway (nginx/ingress)

– Authentication Service

– Order Service

– calls: User Service

– calls: Inventory Service

– calls: Payment Service

– calls: Notification Service

– Load Balancer

– Service Mesh (Istio/Linkerd)

# 每个服务都可能返回不同的状态码

status_codes_chain:

– API Gateway: 200

– Authentication Service: 401 (token expired)

– Order Service: 500 (dependency failed)

– User Service: 404 (user not found)

– Inventory Service: 409 (insufficient stock)

– Payment Service: 422 (payment failed)

– Notification Service: 503 (service unavailable)

33.1.2 状态码传播原则

java

// 微服务状态码传播的核心原则

public class StatusCodePropagationPrinciples {

// 原则1: 透明的错误传播

public static class TransparentPropagation {

// 错误应该尽可能透明地传播到调用链的上游

// 但同时需要避免敏感信息泄露

}

// 原则2: 上下文完整性

public static class ContextIntegrity {

// 每个错误都应该携带完整的调用链上下文

// 包括服务名称、调用路径、时间戳等

}

// 原则3: 降级策略

public static class GracefulDegradation {

// 部分服务的失败不应该导致整个系统崩溃

// 需要实现优雅的降级策略

}

// 原则4: 可追溯性

public static class Traceability {

// 每个状态码都应该能够追溯到具体的服务和操作

}

}

33.2 服务间状态码传播模式

33.2.1 直接传播模式

go

// Go语言实现的状态码直接传播

package main

import (

"context"

"encoding/json"

"fmt"

"net/http"

"time"

"github.com/opentracing/opentracing-go"

"go.uber.org/zap"

)

// 直接传播中间件

func DirectPropagationMiddleware(next http.Handler) http.Handler {

return http.HandlerFunc(func(w http.ResponseWriter, r *http.Request) {

// 1. 从上游服务接收请求上下文

ctx := r.Context()

// 2. 提取上游的状态码信息

upstreamStatusCode := r.Header.Get("X-Upstream-Status-Code")

upstreamService := r.Header.Get("X-Upstream-Service")

// 3. 创建响应包装器以捕获状态码

rw := &responseWriter{ResponseWriter: w}

// 4. 处理请求

next.ServeHTTP(rw, r)

// 5. 向下游传播状态码

if rw.statusCode >= 400 {

// 记录错误上下文

logErrorContext(r, upstreamStatusCode, upstreamService, rw.statusCode)

// 设置响应头,为可能的下游服务提供上下文

w.Header().Set("X-Error-Propagated", "true")

w.Header().Set("X-Failed-Service", getServiceName())

// 如果有上游服务,记录调用链

if upstreamService != "" {

w.Header().Set("X-Error-Chain",

fmt.Sprintf("%s->%s:%d",

upstreamService, getServiceName(), rw.statusCode))

}

}

})

}

// 响应包装器

type responseWriter struct {

http.ResponseWriter

statusCode int

wroteHeader bool

}

func (rw *responseWriter) WriteHeader(code int) {

if !rw.wroteHeader {

rw.statusCode = code

rw.ResponseWriter.WriteHeader(code)

rw.wroteHeader = true

}

}

func (rw *responseWriter) Write(b []byte) (int, error) {

if !rw.wroteHeader {

rw.WriteHeader(http.StatusOK)

}

return rw.ResponseWriter.Write(b)

}

// 日志记录错误上下文

func logErrorContext(r *http.Request, upstreamCode, upstreamService string, currentCode int) {

logger := zap.L()

errorContext := map[string]interface{}{

"timestamp": time.Now().UTC(),

"request_id": r.Header.Get("X-Request-ID"),

"trace_id": r.Header.Get("X-Trace-ID"),

"current_service": getServiceName(),

"current_status": currentCode,

"upstream_service": upstreamService,

"upstream_status": upstreamCode,

"endpoint": r.URL.Path,

"method": r.Method,

"user_agent": r.UserAgent(),

"client_ip": getClientIP(r),

}

// 添加分布式追踪信息

if span := opentracing.SpanFromContext(r.Context()); span != nil {

errorContext["span_id"] = fmt.Sprintf("%v", span)

}

logger.Error("Service error with propagation context",

zap.Any("context", errorContext))

}

// HTTP客户端支持状态码传播

type PropagatingHTTPClient struct {

client *http.Client

serviceName string

}

func NewPropagatingHTTPClient(serviceName string) *PropagatingHTTPClient {

return &PropagatingHTTPClient{

client: &http.Client{

Timeout: 30 * time.Second,

Transport: &http.Transport{

MaxIdleConns: 100,

MaxIdleConnsPerHost: 10,

IdleConnTimeout: 90 * time.Second,

},

},

serviceName: serviceName,

}

}

func (c *PropagatingHTTPClient) Do(req *http.Request) (*http.Response, error) {

// 添加传播头

req.Header.Set("X-Caller-Service", c.serviceName)

req.Header.Set("X-Caller-Request-ID", req.Header.Get("X-Request-ID"))

// 执行请求

resp, err := c.client.Do(req)

if err != nil {

return nil, fmt.Errorf("HTTP request failed: %w", err)

}

// 如果响应是错误,记录传播信息

if resp.StatusCode >= 400 {

errorChain := resp.Header.Get("X-Error-Chain")

if errorChain == "" {

resp.Header.Set("X-Error-Chain",

fmt.Sprintf("%s:%d", c.serviceName, resp.StatusCode))

} else {

resp.Header.Set("X-Error-Chain",

fmt.Sprintf("%s->%s:%d", errorChain, c.serviceName, resp.StatusCode))

}

}

return resp, nil

}

33.2.2 聚合传播模式

python

# Python聚合传播实现

from typing import Dict, List, Optional, Any, Tuple

from dataclasses import dataclass, asdict

import json

import time

from enum import Enum

import logging

from concurrent.futures import ThreadPoolExecutor, as_completed

logger = logging.getLogger(__name__)

class ErrorSeverity(Enum):

CRITICAL = 100 # 5xx errors

ERROR = 200 # 4xx errors that affect user

WARNING = 300 # 4xx errors that don't affect user

INFO = 400 # 2xx with warnings

@dataclass

class ServiceError:

service: str

status_code: int

message: str

endpoint: str

timestamp: float

severity: ErrorSeverity

retryable: bool

dependencies: List[str] = None

def to_dict(self) -> Dict:

return {

**asdict(self),

'severity': self.severity.value,

'timestamp': time.strftime('%Y-%m-%d %H:%M:%S',

time.localtime(self.timestamp))

}

class StatusCodeAggregator:

def __init__(self, service_name: str):

self.service_name = service_name

self.errors: List[ServiceError] = []

self.successes: List[Dict] = []

def add_service_result(self, service: str, status_code: int,

message: str, endpoint: str,

retryable: bool = False):

"""添加服务调用结果"""

# 确定错误严重性

if 500 <= status_code < 600:

severity = ErrorSeverity.CRITICAL

elif status_code == 401 or status_code == 403:

severity = ErrorSeverity.ERROR

elif 400 <= status_code < 500:

severity = ErrorSeverity.WARNING

elif 200 <= status_code < 300:

severity = ErrorSeverity.INFO

else:

severity = ErrorSeverity.WARNING

error = ServiceError(

service=service,

status_code=status_code,

message=message,

endpoint=endpoint,

timestamp=time.time(),

severity=severity,

retryable=retryable

)

if status_code >= 400:

self.errors.append(error)

else:

self.successes.append(error.to_dict())

return error

def aggregate_status_code(self) -> Tuple[int, Dict[str, Any]]:

"""聚合多个服务的状态码"""

if not self.errors:

return 200, {

'status': 'success',

'data': self.successes,

'timestamp': time.time()

}

# 按严重性排序错误

self.errors.sort(key=lambda x: x.severity.value)

# 获取最高严重性的错误

primary_error = self.errors[0]

# 确定最终状态码

final_status_code = self._determine_final_status_code()

# 构建响应

response = {

'status': 'partial_failure' if len(self.errors) < len(self.errors) + len(self.successes) else 'failure',

'error': {

'code': f"AGGREGATED_ERROR_{final_status_code}",

'message': self._generate_aggregated_message(),

'timestamp': time.time(),

'details': {

'primary_error': primary_error.to_dict(),

'all_errors': [e.to_dict() for e in self.errors],

'successful_calls': self.successes,

'aggregation_strategy': 'highest_severity'

}

}

}

return final_status_code, response

def _determine_final_status_code(self) -> int:

"""根据聚合策略确定最终状态码"""

# 策略1: 如果有5xx错误,返回500

if any(e.severity == ErrorSeverity.CRITICAL for e in self.errors):

return 500

# 策略2: 如果有用户相关的4xx错误,返回最相关的那个

user_errors = [e for e in self.errors

if e.severity == ErrorSeverity.ERROR]

if user_errors:

# 优先返回认证/授权错误

for error in user_errors:

if error.status_code in [401, 403]:

return error.status_code

# 否则返回第一个用户错误

return user_errors[0].status_code

# 策略3: 返回第一个警告级别的错误

return self.errors[0].status_code

def _generate_aggregated_message(self) -> str:

"""生成聚合错误消息"""

if len(self.errors) == 1:

return f"Service {self.errors[0].service} failed: {self.errors[0].message}"

error_count = len(self.errors)

critical_count = sum(1 for e in self.errors

if e.severity == ErrorSeverity.CRITICAL)

if critical_count > 0:

return f"{critical_count} critical service(s) failed out of {error_count} errors"

return f"{error_count} service(s) reported errors"

# 使用示例:订单处理服务

class OrderProcessingService:

def __init__(self):

self.aggregator = StatusCodeAggregator("order-service")

def process_order(self, order_data: Dict) -> Tuple[int, Dict]:

"""处理订单,调用多个微服务"""

# 并行调用多个服务

with ThreadPoolExecutor(max_workers=4) as executor:

futures = {

executor.submit(self._validate_user, order_data['user_id']): 'user-service',

executor.submit(self._check_inventory, order_data['items']): 'inventory-service',

executor.submit(self._calculate_shipping, order_data['address']): 'shipping-service',

executor.submit(self._validate_payment, order_data['payment']): 'payment-service'

}

for future in as_completed(futures):

service_name = futures[future]

try:

status_code, message = future.result(timeout=10)

self.aggregator.add_service_result(

service_name, status_code, message,

f"/api/{service_name.replace('-', '/')}"

)

except TimeoutError:

self.aggregator.add_service_result(

service_name, 504, "Service timeout",

f"/api/{service_name.replace('-', '/')}",

retryable=True

)

except Exception as e:

self.aggregator.add_service_result(

service_name, 500, str(e),

f"/api/{service_name.replace('-', '/')}",

retryable=True

)

# 聚合结果

final_status, response = self.aggregator.aggregate_status_code()

# 如果所有必需服务都成功,创建订单

if final_status == 200:

order_result = self._create_order(order_data)

response['data']['order'] = order_result

return final_status, response

def _validate_user(self, user_id: str) -> Tuple[int, str]:

# 调用用户服务

# 返回 (status_code, message)

pass

def _check_inventory(self, items: List) -> Tuple[int, str]:

# 调用库存服务

pass

def _calculate_shipping(self, address: Dict) -> Tuple[int, str]:

# 调用物流服务

pass

def _validate_payment(self, payment: Dict) -> Tuple[int, str]:

# 调用支付服务

pass

def _create_order(self, order_data: Dict) -> Dict:

# 创建订单

pass

33.3 分布式追踪与状态码

33.3.1 OpenTelemetry集成

typescript

// TypeScript OpenTelemetry集成

import { NodeTracerProvider } from '@opentelemetry/node';

import { SimpleSpanProcessor } from '@opentelemetry/tracing';

import { JaegerExporter } from '@opentelemetry/exporter-jaeger';

import { ZipkinExporter } from '@opentelemetry/exporter-zipkin';

import { context, Span, SpanStatusCode } from '@opentelemetry/api';

import { HttpInstrumentation } from '@opentelemetry/instrumentation-http';

import { ExpressInstrumentation } from '@opentelemetry/instrumentation-express';

import { Resource } from '@opentelemetry/resources';

import { SemanticResourceAttributes } from '@opentelemetry/semantic-conventions';

// 配置追踪提供商

const provider = new NodeTracerProvider({

resource: new Resource({

[SemanticResourceAttributes.SERVICE_NAME]: process.env.SERVICE_NAME || 'unknown-service',

[SemanticResourceAttributes.DEPLOYMENT_ENVIRONMENT]: process.env.NODE_ENV || 'development',

}),

});

// 添加导出器

const jaegerExporter = new JaegerExporter({

endpoint: process.env.JAEGER_ENDPOINT || 'http://localhost:14268/api/traces',

});

const zipkinExporter = new ZipkinExporter({

url: process.env.ZIPKIN_ENDPOINT || 'http://localhost:9411/api/v2/spans',

});

provider.addSpanProcessor(new SimpleSpanProcessor(jaegerExporter));

provider.addSpanProcessor(new SimpleSpanProcessor(zipkinExporter));

// 注册提供商

provider.register();

// 仪表化HTTP和Express

const httpInstrumentation = new HttpInstrumentation();

const expressInstrumentation = new ExpressInstrumentation();

// 状态码追踪中间件

export function statusCodeTracingMiddleware(req: any, res: any, next: Function) {

const tracer = provider.getTracer('http-server');

const span = tracer.startSpan(`${req.method} ${req.path}`, {

attributes: {

'http.method': req.method,

'http.url': req.url,

'http.route': req.path,

'http.user_agent': req.get('user-agent'),

'http.client_ip': req.ip,

},

});

// 将span存储在上下文中

const ctx = context.active();

context.bind(ctx, span);

// 存储span在请求对象上以便后续访问

req.span = span;

// 监听响应完成

const originalEnd = res.end;

res.end = function(…args: any[]) {

// 记录状态码

span.setAttribute('http.status_code', res.statusCode);

span.setAttribute('http.status_text', res.statusMessage);

// 根据状态码设置span状态

if (res.statusCode >= 400) {

span.setStatus({

code: SpanStatusCode.ERROR,

message: `HTTP ${res.statusCode}`,

});

// 添加错误属性

span.setAttribute('error', true);

span.setAttribute('error.type', `HTTP_${res.statusCode}`);

// 如果有错误信息,记录它

if (res.locals.error) {

span.setAttribute('error.message', res.locals.error.message);

span.recordException(res.locals.error);

}

} else {

span.setStatus({ code: SpanStatusCode.OK });

}

// 记录响应大小

const contentLength = res.get('content-length');

if (contentLength) {

span.setAttribute('http.response_size', parseInt(contentLength));

}

// 记录持续时间

const startTime = req._startTime || Date.now();

const duration = Date.now() – startTime;

span.setAttribute('http.duration_ms', duration);

// 结束span

span.end();

// 调用原始的end方法

return originalEnd.apply(this, args);

};

// 记录请求开始时间

req._startTime = Date.now();

next();

}

// HTTP客户端仪表化

export class TracedHttpClient {

private tracer: any;

constructor(private baseURL: string, serviceName: string) {

this.tracer = provider.getTracer(serviceName);

}

async request<T>(

method: string,

path: string,

data?: any,

options: any = {}

): Promise<{ status: number; data: T; headers: any }> {

const span = this.tracer.startSpan(`${method} ${path}`, {

attributes: {

'http.method': method,

'http.url': `${this.baseURL}${path}`,

'peer.service': this.baseURL.replace(/https?:\\/\\//, ''),

},

});

try {

const url = `${this.baseURL}${path}`;

const headers = {

'Content-Type': 'application/json',

…options.headers,

};

// 传播追踪上下文

const carrier: any = {};

const ctx = context.active();

const activeSpan = context.getActiveSpan();

if (activeSpan) {

const traceparent = activeSpan.spanContext().traceId;

headers['traceparent'] = `00-${traceparent}-${span.spanContext().spanId}-01`;

headers['tracestate'] = activeSpan.spanContext().traceState?.serialize();

}

const response = await fetch(url, {

method,

headers,

body: data ? JSON.stringify(data) : undefined,

…options,

});

// 记录响应信息

span.setAttribute('http.status_code', response.status);

span.setAttribute('http.status_text', response.statusText);

const responseData = await response.json().catch(() => null);

// 根据状态码设置span状态

if (response.status >= 400) {

span.setStatus({

code: SpanStatusCode.ERROR,

message: `HTTP ${response.status}`,

});

// 记录错误详情

span.setAttribute('error', true);

span.setAttribute('error.type', `HTTP_${response.status}`);

if (responseData?.error) {

span.setAttribute('error.message', responseData.error.message);

}

} else {

span.setStatus({ code: SpanStatusCode.OK });

}

// 记录响应头中的追踪信息

const serverTraceId = response.headers.get('x-trace-id');

if (serverTraceId) {

span.setAttribute('peer.trace_id', serverTraceId);

}

return {

status: response.status,

data: responseData,

headers: Object.fromEntries(response.headers.entries()),

};

} catch (error: any) {

// 记录异常

span.setStatus({

code: SpanStatusCode.ERROR,

message: error.message,

});

span.recordException(error);

span.setAttribute('error', true);

span.setAttribute('error.type', 'NETWORK_ERROR');

throw error;

} finally {

span.end();

}

}

// 批量请求追踪

async batchRequest<T>(

requests: Array<{ method: string; path: string; data?: any }>

): Promise<Array<{ status: number; data: T; error?: any }>> {

const batchSpan = this.tracer.startSpan('batch_request', {

attributes: {

'batch.request_count': requests.length,

'batch.service': this.baseURL,

},

});

try {

const results = await Promise.all(

requests.map(async (req) => {

const span = this.tracer.startSpan(`${req.method} ${req.path}`, {

attributes: {

'http.method': req.method,

'http.url': `${this.baseURL}${req.path}`,

},

});

try {

const result = await this.request<T>(req.method, req.path, req.data);

span.setAttribute('http.status_code', result.status);

if (result.status >= 400) {

span.setStatus({ code: SpanStatusCode.ERROR });

} else {

span.setStatus({ code: SpanStatusCode.OK });

}

return result;

} catch (error: any) {

span.setStatus({

code: SpanStatusCode.ERROR,

message: error.message,

});

span.recordException(error);

return {

status: 500,

data: null as any,

error: error.message,

};

} finally {

span.end();

}

})

);

// 统计批量结果

const successCount = results.filter(r => r.status < 400).length;

const errorCount = results.length – successCount;

batchSpan.setAttribute('batch.success_count', successCount);

batchSpan.setAttribute('batch.error_count', errorCount);

if (errorCount > 0) {

batchSpan.setStatus({

code: SpanStatusCode.ERROR,

message: `${errorCount} requests failed in batch`,

});

} else {

batchSpan.setStatus({ code: SpanStatusCode.OK });

}

return results;

} finally {

batchSpan.end();

}

}

}

// 状态码分析中间件

export function statusCodeAnalyticsMiddleware(req: any, res: any, next: Function) {

const tracer = provider.getTracer('analytics');

const span = tracer.startSpan('status_code_analysis', {

attributes: {

'analysis.type': 'status_code_distribution',

'service.name': process.env.SERVICE_NAME,

},

});

// 收集指标

const metrics = {

request_count: 0,

status_2xx: 0,

status_3xx: 0,

status_4xx: 0,

status_5xx: 0,

errors_by_code: {} as Record<string, number>,

avg_response_time: 0,

};

// 监听响应完成

const originalEnd = res.end;

res.end = function(…args: any[]) {

metrics.request_count++;

// 分类状态码

if (res.statusCode >= 200 && res.statusCode < 300) {

metrics.status_2xx++;

} else if (res.statusCode >= 300 && res.statusCode < 400) {

metrics.status_3xx++;

} else if (res.statusCode >= 400 && res.statusCode < 500) {

metrics.status_4xx++;

metrics.errors_by_code[res.statusCode] =

(metrics.errors_by_code[res.statusCode] || 0) + 1;

} else if (res.statusCode >= 500) {

metrics.status_5xx++;

metrics.errors_by_code[res.statusCode] =

(metrics.errors_by_code[res.statusCode] || 0) + 1;

}

// 记录响应时间

const startTime = req._startTime || Date.now();

const duration = Date.now() – startTime;

// 更新平均响应时间

metrics.avg_response_time =

(metrics.avg_response_time * (metrics.request_count – 1) + duration) /

metrics.request_count;

// 更新span属性

span.setAttributes({

'analysis.request_count': metrics.request_count,

'analysis.status_2xx': metrics.status_2xx,

'analysis.status_3xx': metrics.status_3xx,

'analysis.status_4xx': metrics.status_4xx,

'analysis.status_5xx': metrics.status_5xx,

'analysis.avg_response_time_ms': metrics.avg_response_time,

…Object.entries(metrics.errors_by_code).reduce((acc, [code, count]) => ({

…acc,

[`analysis.errors.${code}`]: count,

}), {}),

});

// 如果错误率过高,记录警告

const errorRate = (metrics.status_4xx + metrics.status_5xx) / metrics.request_count;

if (errorRate > 0.1 && metrics.request_count > 10) {

span.setStatus({

code: SpanStatusCode.ERROR,

message: `High error rate: ${(errorRate * 100).toFixed(1)}%`,

});

span.setAttribute('analysis.high_error_rate', true);

span.setAttribute('analysis.error_rate', errorRate);

}

return originalEnd.apply(this, args);

};

// 定期提交指标

const interval = setInterval(() => {

if (metrics.request_count > 0) {

span.addEvent('metrics_snapshot', metrics);

// 重置计数器(保留错误分布)

metrics.request_count = 0;

metrics.status_2xx = 0;

metrics.status_3xx = 0;

metrics.status_4xx = 0;

metrics.status_5xx = 0;

metrics.avg_response_time = 0;

}

}, 60000); // 每分钟提交一次

// 清理定时器

req.on('close', () => {

clearInterval(interval);

span.end();

});

next();

}

33.3.2 Jaeger追踪可视化

yaml

# jaeger-service-map.yaml

# Jaeger服务依赖图配置

service_dependencies:

enabled: true

storage: elasticsearch

# 状态码相关的标签

tags:

– "http.status_code"

– "error"

– "span.kind"

# 服务级别指标

metrics:

– name: "error_rate"

query: "sum(rate(http_server_requests_seconds_count{status=~\\"4..|5..\\"}[5m])) / sum(rate(http_server_requests_seconds_count[5m]))"

threshold: 0.05

severity: "warning"

– name: "p95_latency"

query: "histogram_quantile(0.95, rate(http_server_requests_seconds_bucket[5m]))"

threshold: 1.0

severity: "warning"

# 状态码分布仪表板

dashboard:

panels:

– title: "Status Code Distribution"

type: "heatmap"

query: "sum(rate(http_server_requests_seconds_count[5m])) by (status, service)"

– title: "Error Propagation Chain"

type: "graph"

query: |

trace_id, span_id, parent_id,

operationName, serviceName,

tags.http.status_code as status_code,

tags.error as error

filter: "tags.http.status_code >= 400"

– title: "Service Dependency Health"

type: "dependency_graph"

nodes:

– service: "api-gateway"

health: "sum(rate(http_server_requests_seconds_count{service=\\"api-gateway\\", status=~\\"2..\\"}[5m])) / sum(rate(http_server_requests_seconds_count{service=\\"api-gateway\\"}[5m]))"

– service: "order-service"

health: "sum(rate(http_server_requests_seconds_count{service=\\"order-service\\", status=~\\"2..\\"}[5m])) / sum(rate(http_server_requests_seconds_count{service=\\"order-service\\"}[5m]))"

– service: "payment-service"

health: "sum(rate(http_server_requests_seconds_count{service=\\"payment-service\\", status=~\\"2..\\"}[5m])) / sum(rate(http_server_requests_seconds_count{service=\\"payment-service\\"}[5m]))"

33.4 断路器与状态码处理

33.4.1 Resilience4j断路器

java

// Java Resilience4j断路器配置

@Configuration

public class CircuitBreakerConfiguration {

private final MeterRegistry meterRegistry;

private final Tracer tracer;

public CircuitBreakerConfiguration(MeterRegistry meterRegistry,

Tracer tracer) {

this.meterRegistry = meterRegistry;

this.tracer = tracer;

}

@Bean

public CircuitBreakerRegistry circuitBreakerRegistry() {

CircuitBreakerConfig config = CircuitBreakerConfig.custom()

// 基于状态码的失败判断

.recordExceptions(

HttpClientErrorException.class, // 4xx

HttpServerErrorException.class, // 5xx

TimeoutException.class,

IOException.class

)

.ignoreExceptions(

// 忽略某些特定的4xx错误,如404(资源不存在)

NotFoundException.class

)

// 滑动窗口配置

.slidingWindowType(SlidingWindowType.COUNT_BASED)

.slidingWindowSize(100)

// 失败率阈值

.failureRateThreshold(50)

// 慢调用阈值

.slowCallRateThreshold(30)

.slowCallDurationThreshold(Duration.ofSeconds(2))

// 半开状态配置

.permittedNumberOfCallsInHalfOpenState(10)

.maxWaitDurationInHalfOpenState(Duration.ofSeconds(10))

// 自动从开启状态转换

.automaticTransitionFromOpenToHalfOpenEnabled(true)

.waitDurationInOpenState(Duration.ofSeconds(30))

.build();

CircuitBreakerRegistry registry = CircuitBreakerRegistry.of(config);

// 添加指标

TaggedCircuitBreakerMetrics.ofCircuitBreakerRegistry(registry)

.bindTo(meterRegistry);

return registry;

}

@Bean

public RetryRegistry retryRegistry() {

RetryConfig config = RetryConfig.custom()

.maxAttempts(3)

.waitDuration(Duration.ofMillis(500))

.intervalFunction(IntervalFunction.ofExponentialBackoff())

.retryOnException(e -> {

// 只在特定状态码下重试

if (e instanceof HttpClientErrorException) {

HttpClientErrorException ex = (HttpClientErrorException) e;

return ex.getStatusCode().is5xxServerError() ||

ex.getStatusCode() == HttpStatus.REQUEST_TIMEOUT ||

ex.getStatusCode() == HttpStatus.TOO_MANY_REQUESTS;

}

return e instanceof IOException ||

e instanceof TimeoutException;

})

.failAfterMaxAttempts(true)

.build();

return RetryRegistry.of(config);

}

@Bean

public BulkheadRegistry bulkheadRegistry() {

BulkheadConfig config = BulkheadConfig.custom()

.maxConcurrentCalls(100)

.maxWaitDuration(Duration.ofMillis(500))

.build();

return BulkheadRegistry.of(config);

}

// 状态码感知的断路器装饰器

@Bean

public RestTemplate statusCodeAwareRestTemplate(

CircuitBreakerRegistry circuitBreakerRegistry,

RetryRegistry retryRegistry,

BulkheadRegistry bulkheadRegistry) {

RestTemplate restTemplate = new RestTemplate();

// 添加拦截器

restTemplate.getInterceptors().add((request, body, execution) -> {

String serviceName = extractServiceName(request.getURI());

// 获取或创建断路器

CircuitBreaker circuitBreaker = circuitBreakerRegistry

.circuitBreaker(serviceName, serviceName);

Retry retry = retryRegistry.retry(serviceName, serviceName);

Bulkhead bulkhead = bulkheadRegistry.bulkhead(serviceName, serviceName);

// 创建追踪span

Span span = tracer.buildSpan("http_request")

.withTag("http.method", request.getMethod().name())

.withTag("http.url", request.getURI().toString())

.withTag("peer.service", serviceName)

.start();

try (Scope scope = tracer.activateSpan(span)) {

// 使用Resilience4j装饰调用

Supplier<ClientHttpResponse> supplier = () -> {

try {

return execution.execute(request, body);

} catch (IOException e) {

throw new RuntimeException(e);

}

};

// 组合 Resilience4j 装饰器

Supplier<ClientHttpResponse> decoratedSupplier = Decorators.ofSupplier(supplier)

.withCircuitBreaker(circuitBreaker)

.withRetry(retry)

.withBulkhead(bulkhead)

.decorate();

ClientHttpResponse response = decoratedSupplier.get();

// 记录状态码

span.setTag("http.status_code", response.getRawStatusCode());

if (response.getRawStatusCode() >= 400) {

span.setTag("error", true);

span.log(Map.of(

"event", "error",

"message", "HTTP error response",

"status_code", response.getRawStatusCode(),

"status_text", response.getStatusText()

));

// 根据状态码决定是否应该触发断路器

if (response.getRawStatusCode() >= 500) {

// 5xx错误应该被记录为失败

circuitBreaker.onError(

response.getRawStatusCode(),

new HttpServerErrorException(

response.getStatusCode(),

response.getStatusText()

)

);

} else if (response.getRawStatusCode() == 429) {

// 429 Too Many Requests,可能应该等待

circuitBreaker.onError(

response.getRawStatusCode(),

new HttpClientErrorException(

response.getStatusCode(),

response.getStatusText()

)

);

}

} else {

circuitBreaker.onSuccess(

response.getRawStatusCode(),

response.getStatusCode()

);

}

return response;

} catch (Exception e) {

span.setTag("error", true);

span.log(Map.of(

"event", "error",

"message", e.getMessage(),

"error.object", e.getClass().getName()

));

// 记录到断路器

circuitBreaker.onError(

-1, // 未知状态码

e

);

throw e;

} finally {

span.finish();

}

});

return restTemplate;

}

private String extractServiceName(URI uri) {

return uri.getHost();

}

}

// 状态码感知的断路器监控

@Component

public class CircuitBreakerMonitor {

private final CircuitBreakerRegistry circuitBreakerRegistry;

private final MeterRegistry meterRegistry;

private final Map<String, CircuitBreaker.State> previousStates = new ConcurrentHashMap<>();

public CircuitBreakerMonitor(CircuitBreakerRegistry circuitBreakerRegistry,

MeterRegistry meterRegistry) {

this.circuitBreakerRegistry = circuitBreakerRegistry;

this.meterRegistry = meterRegistry;

// 定期监控断路器状态

ScheduledExecutorService scheduler = Executors.newSingleThreadScheduledExecutor();

scheduler.scheduleAtFixedRate(this::monitorCircuitBreakers,

0, 10, TimeUnit.SECONDS);

}

private void monitorCircuitBreakers() {

circuitBreakerRegistry.getAllCircuitBreakers().forEach((name, cb) -> {

CircuitBreaker.Metrics metrics = cb.getMetrics();

CircuitBreaker.State currentState = cb.getState();

// 记录指标

Gauge.builder("circuitbreaker.state", cb,

circuitBreaker -> circuitBreaker.getState().getOrder())

.tag("name", name)

.register(meterRegistry);

Counter.builder("circuitbreaker.transitions")

.tag("name", name)

.tag("from", previousStates.getOrDefault(name, CircuitBreaker.State.CLOSED).name())

.tag("to", currentState.name())

.register(meterRegistry)

.increment();

// 记录基于状态码的失败统计

Map<Integer, Long> statusCodeFailures = getStatusCodeFailures(name);

statusCodeFailures.forEach((statusCode, count) -> {

Counter.builder("circuitbreaker.failures_by_status")

.tag("name", name)

.tag("status_code", String.valueOf(statusCode))

.register(meterRegistry)

.increment(count);

});

// 状态变更通知

if (previousStates.containsKey(name) &&

previousStates.get(name) != currentState) {

logStateChange(name, previousStates.get(name), currentState, metrics);

// 发送告警

if (currentState == CircuitBreaker.State.OPEN) {

sendAlert(name, "Circuit breaker OPENED", metrics);

} else if (currentState == CircuitBreaker.State.HALF_OPEN) {

sendAlert(name, "Circuit breaker HALF_OPEN", metrics);

} else if (currentState == CircuitBreaker.State.CLOSED &&

previousStates.get(name) == CircuitBreaker.State.OPEN) {

sendAlert(name, "Circuit breaker CLOSED", metrics);

}

}

previousStates.put(name, currentState);

});

}

private Map<Integer, Long> getStatusCodeFailures(String circuitBreakerName) {

// 这里应该从断路器的事件流中提取基于状态码的失败统计

// 实际实现可能需要自定义事件处理器

return new HashMap<>();

}

private void logStateChange(String name, CircuitBreaker.State from,

CircuitBreaker.State to, CircuitBreaker.Metrics metrics) {

logger.info("Circuit breaker state changed: {} {} -> {} (failureRate: {}%)",

name, from, to,

metrics.getFailureRate());

}

private void sendAlert(String circuitBreakerName, String message,

CircuitBreaker.Metrics metrics) {

// 发送告警到监控系统

Map<String, Object> alert = Map.of(

"circuit_breaker", circuitBreakerName,

"message", message,

"failure_rate", metrics.getFailureRate(),

"slow_call_rate", metrics.getSlowCallRate(),

"timestamp", Instant.now().toString()

);

// 发送到监控系统

// monitoringService.sendAlert(alert);

}

}

33.5 API网关中的状态码处理

33.5.1 Kong网关配置

yaml

# kong-status-code-handling.yaml

_format_version: "2.1"

_transform: true

# 服务定义

services:

– name: order-service

url: http://order-service:8080

routes:

– name: order-routes

paths:

– /orders

– /orders/

strip_path: true

# 插件配置

plugins:

# 1. 请求转换插件

– name: request-transformer

config:

add:

headers:

– "X-Request-ID:$ {request_id}"

– "X-Client-IP:$ {real_ip_remote_addr}"

– "X-Forwarded-For:$ {proxy_add_x_forwarded_for}"

# 2. 响应转换插件

– name: response-transformer

config:

add:

headers:

– "X-Service-Name:order-service"

– "X-Response-Time:$ {latency}"

remove:

headers:

– "Server"

– "X-Powered-By"

# 3. 状态码重写插件

– name: status-code-rewrite

enabled: true

config:

rules:

# 将特定的后端错误转换为标准错误

– backend_status: 502

gateway_status: 503

message: "Service temporarily unavailable"

body: '{"error":{"code":"SERVICE_UNAVAILABLE","message":"The service is temporarily unavailable"}}'

– backend_status: 504

gateway_status: 503

message: "Service timeout"

body: '{"error":{"code":"TIMEOUT","message":"The service did not respond in time"}}'

# 隐藏内部错误详情

– backend_status: 500

gateway_status: 500

message: "Internal server error"

body: '{"error":{"code":"INTERNAL_ERROR","message":"An internal server error occurred"}}'

hide_details: true

# 4. 断路器插件

– name: circuit-breaker

config:

window_size: 60

window_type: sliding

failure_threshold: 5

unhealthy:

http_statuses:

– 500

– 502

– 503

– 504

tcp_failures: 2

timeouts: 3

healthy:

http_statuses:

– 200

– 201

– 202

successes: 1

healthcheck:

active:

type: http

http_path: /health

timeout: 5

concurrency: 10

healthy:

interval: 30

http_statuses:

– 200

– 302

successes: 2

unhealthy:

interval: 30

http_statuses:

– 429

– 500

– 503

tcp_failures: 2

timeouts: 3

http_failures: 2

passive:

type: http

healthy:

http_statuses:

– 200

– 201

– 202

– 203

– 204

– 205

– 206

– 207

– 208

– 226

successes: 5

unhealthy:

http_statuses:

– 500

– 502

– 503

– 504

tcp_failures: 2

timeouts: 7

http_failures: 5

# 全局插件

plugins:

– name: correlation-id

config:

header_name: X-Request-ID

generator: uuid

echo_downstream: true

– name: prometheus

config:

status_code_metrics: true

latency_metrics: true

bandwidth_metrics: true

upstream_health_metrics: true

– name: zipkin

config:

http_endpoint: http://zipkin:9411/api/v2/spans

sample_ratio: 1

include_credential: true

traceid_byte_count: 16

header_type: preserve

# 全局错误处理器

– name: error-handler

config:

default_response:

status_code: 500

content_type: application/json

body: '{"error":{"code":"INTERNAL_ERROR","message":"An unexpected error occurred"}}'

custom_responses:

– status_code: 404

content_type: application/json

body: '{"error":{"code":"NOT_FOUND","message":"The requested resource was not found"}}'

– status_code: 429

content_type: application/json

headers:

Retry-After: "60"

body: '{"error":{"code":"RATE_LIMITED","message":"Too many requests, please try again later"}}'

– status_code: 503

content_type: application/json

headers:

Retry-After: "30"

body: '{"error":{"code":"SERVICE_UNAVAILABLE","message":"Service is temporarily unavailable"}}'

# 上游健康检查

upstreams:

– name: order-service-upstream

algorithm: round-robin

healthchecks:

active:

type: http

http_path: /health

timeout: 5

healthy:

interval: 30

http_statuses:

– 200

– 302

successes: 2

unhealthy:

interval: 30

http_statuses:

– 429

– 500

– 503

tcp_failures: 2

timeouts: 3

http_failures: 2

passive:

healthy:

http_statuses:

– 200

– 201

– 202

– 203

– 204

– 205

– 206

– 207

– 208

– 226

successes: 5

unhealthy:

http_statuses:

– 500

– 502

– 503

– 504

tcp_failures: 2

timeouts: 7

http_failures: 5

targets:

– target: order-service-1:8080

weight: 100

– target: order-service-2:8080

weight: 100

33.5.2 Envoy代理配置

yaml

# envoy-status-code-config.yaml

static_resources:

listeners:

– name: api_listener

address:

socket_address:

address: 0.0.0.0

port_value: 8080

filter_chains:

– filters:

– name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

stat_prefix: ingress_http

route_config:

name: local_route

virtual_hosts:

– name: api

domains: ["*"]

routes:

– match:

prefix: "/orders"

route:

cluster: order_service

timeout: 30s

retry_policy:

retry_on: "5xx,gateway-error,connect-failure,retriable-4xx"

num_retries: 3

per_try_timeout: 10s

retry_back_off:

base_interval: 0.1s

max_interval: 10s

# 状态码重写

upgrade_configs:

– upgrade_type: "websocket"

# HTTP过滤器

http_filters:

# 1. 状态码重写过滤器

– name: envoy.filters.http.status_code_rewrite

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.http.status_code_rewrite.v3.StatusCodeRewrite

rules:

– upstream_status: "502"

gateway_status: "503"

headers_to_add:

– header:

key: "X-Status-Reason"

value: "Bad Gateway converted to Service Unavailable"

– upstream_status: "504"

gateway_status: "503"

headers_to_add:

– header:

key: "X-Status-Reason"

value: "Gateway Timeout converted to Service Unavailable"

# 2. 断路器过滤器

– name: envoy.filters.http.circuit_breaker

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.http.circuit_breaker.v3.CircuitBreaker

max_connections: 1024

max_pending_requests: 1024

max_requests: 1024

max_retries: 3

track_remaining: true

# 3. 故障注入过滤器

– name: envoy.filters.http.fault

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.http.fault.v3.HTTPFault

abort:

http_status: 503

percentage:

numerator: 5 # 5%的请求会收到503错误

denominator: HUNDRED

delay:

fixed_delay: 1s

percentage:

numerator: 10 # 10%的请求会有1秒延迟

denominator: HUNDRED

# 4. 外部授权过滤器

– name: envoy.filters.http.ext_authz

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.http.ext_authz.v3.ExtAuthz

http_service:

server_uri:

uri: auth-service:9000

cluster: auth_service

timeout: 0.25s

authorization_request:

allowed_headers:

patterns:

– exact: "content-type"

– exact: "authorization"

– exact: "x-request-id"

authorization_response:

allowed_upstream_headers:

patterns:

– exact: "x-user-id"

– exact: "x-user-role"

allowed_client_headers:

patterns:

– exact: "x-auth-status"

# 5. 速率限制过滤器

– name: envoy.filters.http.ratelimit

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.http.ratelimit.v3.RateLimit

domain: api-gateway

failure_mode_deny: false

timeout: 0.05s

rate_limit_service:

grpc_service:

envoy_grpc:

cluster_name: rate_limit_service

# 6. 路由器过滤器(必须最后一个)

– name: envoy.filters.http.router

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.http.router.v3.Router

suppress_envoy_headers: true

start_child_span: true

# 访问日志

access_log:

– name: envoy.access_loggers.file

typed_config:

"@type": type.googleapis.com/envoy.extensions.access_loggers.file.v3.FileAccessLog

path: /dev/stdout

log_format:

json_format:

timestamp: "%START_TIME%"

request_id: "%REQ(X-REQUEST-ID)%"

client_ip: "%DOWNSTREAM_REMOTE_ADDRESS%"

method: "%REQ(:METHOD)%"

path: "%REQ(X-ENVOY-ORIGINAL-PATH?:PATH)%"

protocol: "%PROTOCOL%"

response_code: "%RESPONSE_CODE%"

response_flags: "%RESPONSE_FLAGS%"

bytes_received: "%BYTES_RECEIVED%"

bytes_sent: "%BYTES_SENT%"

duration: "%DURATION%"

upstream_service_time: "%RESP(X-ENVOY-UPSTREAM-SERVICE-TIME)%"

upstream_host: "%UPSTREAM_HOST%"

upstream_cluster: "%UPSTREAM_CLUSTER%"

upstream_local_address: "%UPSTREAM_LOCAL_ADDRESS%"

downstream_local_address: "%DOWNSTREAM_LOCAL_ADDRESS%"

downstream_remote_address: "%DOWNSTREAM_REMOTE_ADDRESS%"

requested_server_name: "%REQUESTED_SERVER_NAME%"

route_name: "%ROUTE_NAME%"

# 追踪配置

tracing:

provider:

name: envoy.tracers.zipkin

typed_config:

"@type": type.googleapis.com/envoy.config.trace.v3.ZipkinConfig

collector_cluster: zipkin

collector_endpoint: "/api/v2/spans"

collector_endpoint_version: HTTP_JSON

shared_span_context: false

trace_id_128bit: true

# 集群定义

clusters:

– name: order_service

type: STRICT_DNS

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: order_service

endpoints:

– lb_endpoints:

– endpoint:

address:

socket_address:

address: order-service

port_value: 8080

circuit_breakers:

thresholds:

– priority: DEFAULT

max_connections: 1024

max_pending_requests: 1024

max_requests: 1024

max_retries: 3

– priority: HIGH

max_connections: 2048

max_pending_requests: 2048

max_requests: 2048

max_retries: 3

outlier_detection:

consecutive_5xx: 10

interval: 30s

base_ejection_time: 30s

max_ejection_percent: 50

health_checks:

– timeout: 5s

interval: 30s

unhealthy_threshold: 3

healthy_threshold: 2

http_health_check:

path: /health

expected_statuses:

start: 200

end: 299

– name: auth_service

type: STRICT_DNS

connect_timeout: 0.25s

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: auth_service

endpoints:

– lb_endpoints:

– endpoint:

address:

socket_address:

address: auth-service

port_value: 9000

– name: rate_limit_service

type: STRICT_DNS

connect_timeout: 0.05s

lb_policy: ROUND_ROBIN

http2_protocol_options: {}

load_assignment:

cluster_name: rate_limit_service

endpoints:

– lb_endpoints:

– endpoint:

address:

socket_address:

address: rate-limit-service

port_value: 8081

– name: zipkin

type: STRICT_DNS

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: zipkin

endpoints:

– lb_endpoints:

– endpoint:

address:

socket_address:

address: zipkin

port_value: 9411

# 管理接口

admin:

address:

socket_address:

address: 0.0.0.0

port_value: 9901

33.6 服务网格中的状态码传播

33.6.1 Istio服务网格配置

yaml

# istio-status-code-policies.yaml

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: order-service

spec:

hosts:

– order-service

http:

– match:

– uri:

prefix: /orders

route:

– destination:

host: order-service

port:

number: 8080

weight: 100

# 重试策略

retries:

attempts: 3

retryOn: "5xx,gateway-error,connect-failure,retriable-4xx"

perTryTimeout: 10s

# 超时配置

timeout: 30s

# 故障注入

fault:

abort:

percentage:

value: 0.1 # 0.1%的请求会收到503错误

httpStatus: 503

delay:

percentage:

value: 1 # 1%的请求会有100ms延迟

fixedDelay: 100ms

# 状态码重写

headers:

request:

set:

X-Request-ID: "%REQ(X-REQUEST-ID)%"

X-Client-IP: "%DOWNSTREAM_REMOTE_ADDRESS%"

response:

set:

X-Service-Version: "v1.0.0"

X-Response-Time: "%RESPONSE_DURATION%"

remove:

– "Server"

# CORS策略

corsPolicy:

allowOrigin:

– "*"

allowMethods:

– POST

– GET

– OPTIONS

– PUT

– DELETE

allowHeaders:

– content-type

– authorization

– x-request-id

maxAge: "24h"

—

apiVersion: networking.istio.io/v1beta1

kind: DestinationRule

metadata:

name: order-service

spec:

host: order-service

trafficPolicy:

# 连接池设置

connectionPool:

tcp:

maxConnections: 100

connectTimeout: 30ms

http:

http1MaxPendingRequests: 1024

http2MaxRequests: 1024

maxRequestsPerConnection: 1024

maxRetries: 3

idleTimeout: 3600s

# 负载均衡

loadBalancer:

simple: ROUND_ROBIN

# 异常检测

outlierDetection:

consecutive5xxErrors: 10

interval: 30s

baseEjectionTime: 30s

maxEjectionPercent: 50

# TLS设置

tls:

mode: ISTIO_MUTUAL

—

# 状态码监控配置

apiVersion: telemetry.istio.io/v1alpha1

kind: Telemetry

metadata:

name: status-code-metrics

spec:

metrics:

– providers:

– name: prometheus

overrides:

# 请求计数,按状态码分类

– match:

metric: REQUEST_COUNT

mode: CLIENT_AND_SERVER

tagOverrides:

response_code:

value: "%RESPONSE_CODE%"

# 请求持续时间,按状态码分类

– match:

metric: REQUEST_DURATION

mode: CLIENT_AND_SERVER

tagOverrides:

response_code:

value: "%RESPONSE_CODE%"

# 请求大小,按状态码分类

– match:

metric: REQUEST_SIZE

mode: CLIENT_AND_SERVER

tagOverrides:

response_code:

value: "%RESPONSE_CODE%"

# 响应大小,按状态码分类

– match:

metric: RESPONSE_SIZE

mode: CLIENT_AND_SERVER

tagOverrides:

response_code:

value: "%RESPONSE_CODE%"

# 自定义指标

customMetrics:

– name: error_rate_by_service

dimensions:

destination_service: "string(destination.service)"

response_code: "string(response.code)"

source_service: "string(source.service)"

value: "double(1)"

– name: latency_by_status_code

dimensions:

destination_service: "string(destination.service)"

response_code: "string(response.code)"

percentile: "string(percentile)"

value: "double(response.duration)"

—

# 状态码告警规则

apiVersion: monitoring.coreos.com/v1

kind: PrometheusRule

metadata:

name: status-code-alerts

spec:

groups:

– name: status-code-monitoring

rules:

# 高错误率告警

– alert: High5xxErrorRate

expr: |

sum(rate(istio_requests_total{

response_code=~"5..",

reporter="destination"

}[5m])) by (destination_service)

/

sum(rate(istio_requests_total{

reporter="destination"

}[5m])) by (destination_service)

> 0.05

for: 5m

labels:

severity: critical

annotations:

summary: "High 5xx error rate for {{ $labels.destination_service }}"

description: "5xx error rate is {{ $value | humanizePercentage }} for service {{ $labels.destination_service }}"

# 4xx错误率增加

– alert: High4xxErrorRate

expr: |

sum(rate(istio_requests_total{

response_code=~"4..",

reporter="destination"

}[5m])) by (destination_service)

/

sum(rate(istio_requests_total{

reporter="destination"

}[5m])) by (destination_service)

> 0.10

for: 10m

labels:

severity: warning

annotations:

summary: "High 4xx error rate for {{ $labels.destination_service }}"

description: "4xx error rate is {{ $value | humanizePercentage }} for service {{ $labels.destination_service }}"

# 服务不可用

– alert: ServiceUnavailable

expr: |

sum(rate(istio_requests_total{

response_code=~"5..",

reporter="destination"

}[5m])) by (destination_service) == 0

and

sum(rate(istio_requests_total{

reporter="destination"

}[5m])) by (destination_service) == 0

for: 2m

labels:

severity: critical

annotations:

summary: "Service {{ $labels.destination_service }} is unavailable"

description: "Service {{ $labels.destination_service }} has not received any requests in the last 2 minutes"

# 慢响应告警

– alert: SlowResponses

expr: |

histogram_quantile(0.95,

sum(rate(istio_request_duration_milliseconds_bucket{

reporter="destination"

}[5m])) by (le, destination_service)

) > 1000

for: 5m

labels:

severity: warning

annotations:

summary: "Slow responses for {{ $labels.destination_service }}"

description: "95th percentile response time is {{ $value }}ms for service {{ $labels.destination_service }}"

33.7 错误恢复与降级策略

33.7.1 多级降级策略

python

# 多级降级策略实现

from enum import Enum

from typing import Dict, Any, Optional, Callable

import time

import logging

from dataclasses import dataclass

from functools import wraps

logger = logging.getLogger(__name__)

class DegradationLevel(Enum):

NORMAL = 1 # 正常模式

DEGRADED = 2 # 降级模式

LIMITED = 3 # 限制模式

MAINTENANCE = 4 # 维护模式

FAILSAFE = 5 # 安全模式

@dataclass

class ServiceHealth:

name: str

status_code: int

response_time: float

error_rate: float

last_check: float

degradation_level: DegradationLevel = DegradationLevel.NORMAL

class MultiLevelDegradation:

def __init__(self):

self.service_health: Dict[str, ServiceHealth] = {}

self.degradation_strategies: Dict[DegradationLevel, Callable] = {}

self._setup_strategies()

def _setup_strategies(self):

"""设置各级降级策略"""

self.degradation_strategies = {

DegradationLevel.NORMAL: self._normal_strategy,

DegradationLevel.DEGRADED: self._degraded_strategy,

DegradationLevel.LIMITED: self._limited_strategy,

DegradationLevel.MAINTENANCE: self._maintenance_strategy,

DegradationLevel.FAILSAFE: self._failsafe_strategy,

}

def assess_health(self, service_name: str,

status_code: int,

response_time: float) -> DegradationLevel:

"""评估服务健康状况并确定降级级别"""

if service_name not in self.service_health:

self.service_health[service_name] = ServiceHealth(

name=service_name,

status_code=status_code,

response_time=response_time,

error_rate=0.0,

last_check=time.time()

)

health = self.service_health[service_name]

# 更新健康指标

is_error = status_code >= 400

error_count = 1 if is_error else 0

total_count = 1

# 计算滑动窗口错误率(简化实现)

window_size = 100

health.error_rate = (

health.error_rate * (window_size – 1) + error_count

) / window_size

health.status_code = status_code

health.response_time = response_time

health.last_check = time.time()

# 确定降级级别

if status_code == 503:

return DegradationLevel.MAINTENANCE

elif status_code >= 500:

if health.error_rate > 0.5:

return DegradationLevel.FAILSAFE

elif health.error_rate > 0.2:

return DegradationLevel.LIMITED

else:

return DegradationLevel.DEGRADED

elif status_code == 429: # Rate limited

return DegradationLevel.LIMITED

elif status_code >= 400:

if health.error_rate > 0.3:

return DegradationLevel.DEGRADED

else:

return DegradationLevel.NORMAL

elif response_time > 5.0: # 5秒超时

return DegradationLevel.DEGRADED

elif response_time > 2.0: # 2秒延迟

if health.error_rate > 0.1:

return DegradationLevel.DEGRADED

else:

return DegradationLevel.NORMAL

else:

return DegradationLevel.NORMAL

def apply_strategy(self, service_name: str,

original_call: Callable,

*args, **kwargs) -> Any:

"""应用降级策略执行调用"""

health = self.service_health.get(service_name)

if not health:

# 首次调用,正常执行

return self._execute_with_monitoring(service_name, original_call, *args, **kwargs)

# 获取降级策略

strategy = self.degradation_strategies.get(

health.degradation_level,

self._normal_strategy

)

return strategy(service_name, original_call, *args, **kwargs)

def _execute_with_monitoring(self, service_name: str,

original_call: Callable,

*args, **kwargs) -> Any:

"""执行调用并监控结果"""

start_time = time.time()

try:

result = original_call(*args, **kwargs)

response_time = time.time() – start_time

# 假设result有status_code属性

status_code = getattr(result, 'status_code', 200)

# 评估健康状况

degradation_level = self.assess_health(

service_name, status_code, response_time

)

# 更新降级级别

if service_name in self.service_health:

self.service_health[service_name].degradation_level = degradation_level

return result

except Exception as e:

response_time = time.time() – start_time

# 根据异常类型确定状态码

status_code = self._exception_to_status_code(e)

# 评估健康状况

degradation_level = self.assess_health(

service_name, status_code, response_time

)

# 更新降级级别

if service_name in self.service_health:

self.service_health[service_name].degradation_level = degradation_level

raise

def _exception_to_status_code(self, e: Exception) -> int:

"""将异常转换为状态码"""

if hasattr(e, 'status_code'):

return e.status_code

elif isinstance(e, TimeoutError):

return 504

elif isinstance(e, ConnectionError):

return 503

else:

return 500

# 各级策略实现

def _normal_strategy(self, service_name: str,

original_call: Callable,

*args, **kwargs) -> Any:

"""正常策略:完整功能"""

logger.debug(f"Normal strategy for {service_name}")

return original_call(*args, **kwargs)

def _degraded_strategy(self, service_name: str,

original_call: Callable,

*args, **kwargs) -> Any:

"""降级策略:基本功能,重试机制"""

logger.warning(f"Degraded strategy for {service_name}")

# 实现重试机制

max_retries = 2

for attempt in range(max_retries + 1):

try:

return original_call(*args, **kwargs)

except Exception as e:

if attempt == max_retries:

raise

# 指数退避

delay = 2 ** attempt

time.sleep(delay)

logger.info(f"Retry {attempt + 1} for {service_name} after {delay}s")

def _limited_strategy(self, service_name: str,

original_call: Callable,

*args, **kwargs) -> Any:

"""限制策略:简化功能,使用缓存"""

logger.warning(f"Limited strategy for {service_name}")

# 尝试使用缓存

cache_key = f"{service_name}:{str(args)}:{str(kwargs)}"

cached_result = self._get_from_cache(cache_key)

if cached_result:

logger.info(f"Using cached result for {service_name}")

return cached_result

# 有限重试

try:

result = original_call(*args, **kwargs)

self._save_to_cache(cache_key, result)

return result

except Exception as e:

# 返回降级结果

return self._get_fallback_result(service_name, *args, **kwargs)

def _maintenance_strategy(self, service_name: str,

original_call: Callable,

*args, **kwargs) -> Any:

"""维护策略:返回维护信息,不尝试调用"""

logger.error(f"Maintenance strategy for {service_name}")

# 直接返回维护信息

return {

"status": "maintenance",

"service": service_name,

"message": "Service is under maintenance",

"timestamp": time.time()

}

def _failsafe_strategy(self, service_name: str,

original_call: Callable,

*args, **kwargs) -> Any:

"""安全模式:返回默认值,保护系统"""

logger.critical(f"Failsafe strategy for {service_name}")

# 返回安全默认值

return self._get_failsafe_result(service_name, *args, **kwargs)

def _get_from_cache(self, key: str) -> Optional[Any]:

"""从缓存获取结果(简化实现)"""

# 实际实现应该使用Redis、Memcached等

return None

def _save_to_cache(self, key: str, value: Any) -> None:

"""保存结果到缓存(简化实现)"""

pass

def _get_fallback_result(self, service_name: str,

*args, **kwargs) -> Any:

"""获取降级结果"""

# 根据服务类型返回不同的降级结果

if "user" in service_name:

return {

"status": "degraded",

"user": {"id": "unknown", "name": "Guest"},

"message": "User service is degraded"

}

elif "order" in service_name:

return {

"status": "degraded",

"order": {"status": "processing"},

"message": "Order service is degraded"

}

else:

return {

"status": "degraded",

"message": f"Service {service_name} is degraded"

}

def _get_failsafe_result(self, service_name: str,

*args, **kwargs) -> Any:

"""获取安全模式结果"""

return {

"status": "failsafe",

"service": service_name,

"message": "Service is in failsafe mode",

"timestamp": time.time()

}

# 使用装饰器实现

def degradation_aware(service_name: str):

"""降级感知装饰器"""

degradation_manager = MultiLevelDegradation()

def decorator(func):

@wraps(func)

def wrapper(*args, **kwargs):

return degradation_manager.apply_strategy(

service_name, func, *args, **kwargs

)

return wrapper

return decorator

# 使用示例

class UserService:

@degradation_aware("user-service")

def get_user_profile(self, user_id: str):

# 调用实际的用户服务

response = self._call_user_service(f"/users/{user_id}")

return response

def _call_user_service(self, endpoint: str):

# 模拟HTTP调用

# 实际实现应该使用HTTP客户端

pass

class OrderService:

@degradation_aware("order-service")

def create_order(self, order_data: Dict) -> Dict:

# 调用订单服务

response = self._call_order_service("/orders", order_data)

return response

@degradation_aware("inventory-service")

def check_inventory(self, product_id: str) -> Dict:

# 调用库存服务

response = self._call_inventory_service(f"/inventory/{product_id}")

return response

def _call_order_service(self, endpoint: str, data: Dict):

pass

def _call_inventory_service(self, endpoint: str):

pass

33.8 监控与告警

33.8.1 Prometheus状态码监控

yaml

# prometheus-status-code-rules.yaml

global:

scrape_interval: 15s

evaluation_interval: 15s

scrape_configs:

– job_name: 'api-gateway'

static_configs:

– targets: ['api-gateway:9090']

metrics_path: '/metrics'

– job_name: 'order-service'

static_configs:

– targets: ['order-service:8080']

metrics_path: '/actuator/prometheus'

– job_name: 'user-service'

static_configs:

– targets: ['user-service:8080']

metrics_path: '/actuator/prometheus'

– job_name: 'payment-service'

static_configs:

– targets: ['payment-service:8080']

metrics_path: '/metrics'

# 记录规则

rule_files:

– "status-code-rules.yml"

# 告警规则

alerting:

alertmanagers:

– static_configs:

– targets: ['alertmanager:9093']

# 状态码规则文件(status-code-rules.yml)

groups:

– name: status_code_recording_rules

interval: 30s

rules:

# 按服务统计状态码

– record: http_requests_total:rate5m

expr: |

sum(rate(http_requests_total[5m])) by (service, status_code)

# 错误率

– record: http_error_rate:rate5m

expr: |

sum(rate(http_requests_total{status_code=~"4..|5.."}[5m])) by (service)

/

sum(rate(http_requests_total[5m])) by (service)

# 5xx错误率

– record: http_5xx_error_rate:rate5m

expr: |

sum(rate(http_requests_total{status_code=~"5.."}[5m])) by (service)

/

sum(rate(http_requests_total[5m])) by (service)

# 4xx错误率

– record: http_4xx_error_rate:rate5m

expr: |

sum(rate(http_requests_total{status_code=~"4.."}[5m])) by (service)

/

sum(rate(http_requests_total[5m])) by (service)

# 按状态码分类的响应时间百分位

– record: http_response_time_percentile:status

expr: |

histogram_quantile(0.95,

sum(rate(http_request_duration_seconds_bucket[5m])) by (le, service, status_code)

)

# 服务依赖健康度

– record: service_dependency_health

expr: |

(

sum(rate(http_requests_total{status_code=~"2.."}[5m])) by (caller, target)

/

sum(rate(http_requests_total[5m])) by (caller, target)

) * 100

# 错误传播链

– record: error_propagation_chain

expr: |

count by (error_chain) (

http_requests_total{status_code=~"4..|5.."}

)

– name: status_code_alerts

rules:

# 全局错误率告警

– alert: GlobalHighErrorRate

expr: |

sum(rate(http_requests_total{status_code=~"4..|5.."}[5m]))

/

sum(rate(http_requests_total[5m]))

> 0.05

for: 5m

labels:

severity: critical

team: platform

annotations:

summary: "Global error rate is high"

description: "Global error rate is {{ $value | humanizePercentage }}"

# 服务级错误率告警

– alert: ServiceHighErrorRate

expr: |

http_error_rate:rate5m > 0.10

for: 5m

labels:

severity: warning

annotations:

summary: "High error rate for service {{ $labels.service }}"

description: "Error rate is {{ $value | humanizePercentage }} for service {{ $labels.service }}"

# 5xx错误率告警

– alert: ServiceHigh5xxErrorRate

expr: |

http_5xx_error_rate:rate5m > 0.05

for: 3m

labels:

severity: critical

annotations:

summary: "High 5xx error rate for service {{ $labels.service }}"

description: "5xx error rate is {{ $value | humanizePercentage }} for service {{ $labels.service }}"

# 服务完全不可用

– alert: ServiceCompletelyUnavailable

expr: |

up{job=~".*"} == 0

for: 2m

labels:

severity: critical

annotations:

summary: "Service {{ $labels.job }} is completely unavailable"

description: "Service {{ $labels.job }} has been down for more than 2 minutes"

# 错误传播链检测

– alert: ErrorPropagationDetected

expr: |

increase(error_propagation_chain[5m]) > 10

for: 2m

labels:

severity: warning

annotations:

summary: "Error propagation detected in the system"

description: "Error chain {{ $labels.error_chain }} has propagated {{ $value }} times in the last 5 minutes"

# 慢响应告警

– alert: SlowServiceResponses

expr: |

http_response_time_percentile:status > 2

for: 5m

labels:

severity: warning

annotations:

summary: "Slow responses for service {{ $labels.service }}"

description: "95th percentile response time is {{ $value }}s for status code {{ $labels.status_code }} in service {{ $labels.service }}"

# 依赖服务健康度下降

– alert: ServiceDependencyDegraded

expr: |

service_dependency_health < 95

for: 10m

labels:

severity: warning

annotations:

summary: "Service dependency health is degraded"

description: "Dependency from {{ $labels.caller }} to {{ $labels.target }} has health of {{ $value }}%"

33.9 总结

33.9.1 微服务状态码传播最佳实践

透明传播与封装平衡

-

内部错误细节不应该直接暴露给客户端

-

但需要足够的上下文进行故障诊断

-

使用标准化的错误格式(如RFC 7807)

分布式追踪集成

-

所有服务调用都应该有唯一的追踪ID

-

状态码应该作为span标签记录

-

错误传播链应该在追踪中可视化

智能断路器策略

-

基于状态码的失败检测

-

不同状态码应该有不同的重试策略

-

断路器状态应该被监控和告警

多级降级策略

-

根据错误类型和频率实施不同级别的降级

-

降级策略应该是可配置的

-

降级状态应该被监控和报告

统一的监控告警

-

所有服务应该暴露标准化的指标

-

状态码分布应该被实时监控

-

基于状态码的告警应该及时准确

33.9.2 架构模式总结

通过本章的学习,我们深入了解了微服务架构中状态码传播的复杂性和重要性。在分布式系统中,状态码不仅仅是单个服务的响应,而是整个调用链健康状况的体现。合理的状态码传播策略、智能的断路器模式、完善的监控告警系统,都是构建可靠微服务架构的关键组成部分。

第33章要点总结:

微服务状态码传播需要考虑调用链上下文

分布式追踪是理解错误传播的关键工具

断路器应该基于状态码智能决策

API网关在状态码转换中起重要作用

多级降级策略可以提高系统韧性

统一的监控告警体系是运维的基础

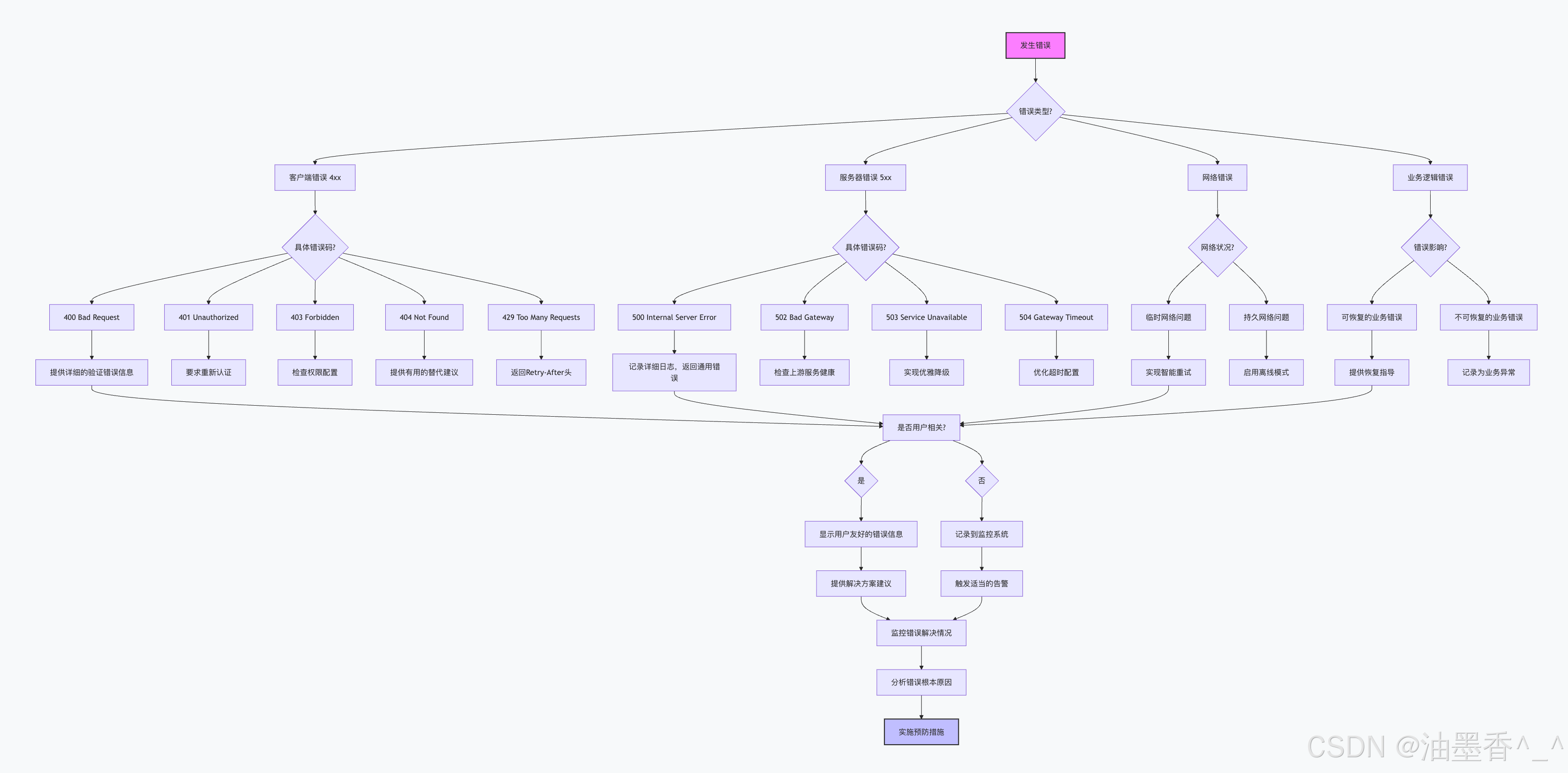

第34章:状态码与错误处理策略

34.1 错误处理哲学与原则

34.1.1 错误处理的三种范式

在软件工程中,错误处理有三种主要范式,每种范式对状态码的使用有着不同的理解:

python

# 错误处理的三种范式示例

from typing import Union, Optional

from dataclasses import dataclass

# 1. 返回码范式(C语言风格)

class ReturnCodeParadigm:

"""通过返回值表示成功或失败"""

def divide(self, a: float, b: float) -> Union[float, int]:

"""返回错误码而不是抛出异常"""

if b == 0:

return -1 # 错误码

return a / b

def process(self):

result = self.divide(10, 0)

if result == -1:

print("Division by zero error")

else:

print(f"Result: {result}")

# 2. 异常范式(Java/Python风格)

class ExceptionParadigm:

"""通过抛出异常表示错误"""

class DivisionByZeroError(Exception):

pass

def divide(self, a: float, b: float) -> float:

"""抛出异常而不是返回错误码"""

if b == 0:

raise self.DivisionByZeroError("Cannot divide by zero")

return a / b

def process(self):

try:

result = self.divide(10, 0)

print(f"Result: {result}")

except self.DivisionByZeroError as e:

print(f"Error: {e}")

# 3. 结果类型范式(函数式风格)

@dataclass

class Result[T, E]:

"""包含成功值或错误值的容器类型"""

value: Optional[T] = None

error: Optional[E] = None

is_success: bool = True

@classmethod

def success(cls, value: T) -> 'Result[T, E]':

return cls(value=value, is_success=True)

@classmethod

def failure(cls, error: E) -> 'Result[T, E]':

return cls(error=error, is_success=False)

def unwrap(self) -> T:

if not self.is_success:

raise ValueError(f"Result contains error: {self.error}")

return self.value

class ResultParadigm:

"""使用Result类型包装可能失败的操作"""

def divide(self, a: float, b: float) -> Result[float, str]:

"""返回包含结果或错误的Result对象"""

if b == 0:

return Result.failure("Division by zero")

return Result.success(a / b)

def process(self):

result = self.divide(10, 0)

if result.is_success:

print(f"Result: {result.unwrap()}")

else:

print(f"Error: {result.error}")

34.1.2 错误处理核心原则

java

// 错误处理的核心原则实现

public class ErrorHandlingPrinciples {

// 原则1: 快速失败(Fail Fast)

public static class FailFastPrinciple {

public User validateAndCreate(String email, String password) {

// 尽早验证,尽早失败

if (email == null || email.isEmpty()) {

throw new ValidationException("Email is required");

}

if (!isValidEmail(email)) {

throw new ValidationException("Invalid email format");

}

if (password == null || password.length() < 8) {

throw new ValidationException("Password must be at least 8 characters");

}

// 所有验证通过后才执行业务逻辑

return new User(email, password);

}

}

// 原则2: 明确错误类型(Be Specific)

public static class SpecificErrorPrinciple {

public enum ErrorType {

USER_NOT_FOUND,

INSUFFICIENT_PERMISSIONS,

INVALID_INPUT,

NETWORK_ERROR,

DATABASE_ERROR

}

@Data

public static class SpecificError {

private final ErrorType type;

private final String message;

private final Map<String, Object> context;

private final Instant timestamp;

public SpecificError(ErrorType type, String message,

Map<String, Object> context) {

this.type = type;

this.message = message;

this.context = context;

this.timestamp = Instant.now();

}

public HttpStatus toHttpStatus() {

switch (type) {

case USER_NOT_FOUND:

return HttpStatus.NOT_FOUND;

case INSUFFICIENT_PERMISSIONS:

return HttpStatus.FORBIDDEN;

case INVALID_INPUT:

return HttpStatus.BAD_REQUEST;

case NETWORK_ERROR:

case DATABASE_ERROR:

return HttpStatus.INTERNAL_SERVER_ERROR;

default:

return HttpStatus.INTERNAL_SERVER_ERROR;

}

}

}

}

// 原则3: 可恢复性设计(Design for Recovery)

public static class RecoveryDesignPrinciple {

private final RetryTemplate retryTemplate;

private final CircuitBreaker circuitBreaker;

public RecoveryDesignPrinciple() {

this.retryTemplate = new RetryTemplate();

this.circuitBreaker = new CircuitBreaker();

}

public Result<Order> placeOrderWithRecovery(OrderRequest request) {

return circuitBreaker.executeSupplier(() -> {

return retryTemplate.execute(context -> {

try {

return Result.success(placeOrder(request));

} catch (TemporaryFailureException e) {

// 记录重试

context.setAttribute("retry_count",

context.getRetryCount() + 1);

throw e;

}

});

});

}

private Order placeOrder(OrderRequest request) {

// 模拟可能失败的操作

return new Order();

}

}

// 原则4: 错误隔离(Error Isolation)

public static class ErrorIsolationPrinciple {

private final ExecutorService isolatedExecutor;

public ErrorIsolationPrinciple() {

// 创建独立的线程池来隔离可能失败的操作

this.isolatedExecutor = Executors.newFixedThreadPool(3,

new ThreadFactoryBuilder()

.setNameFormat("isolated-task-%d")

.setUncaughtExceptionHandler((t, e) -> {

// 隔离的异常处理,不会影响主线程

logIsolatedError(t.getName(), e);

})

.build());

}

public CompletableFuture<Void> executeIsolated(Runnable task) {

return CompletableFuture.runAsync(task, isolatedExecutor)

.exceptionally(throwable -> {

// 处理异常,但不会传播到调用者

logIsolatedError("isolated-task", throwable);

return null;

});

}

private void logIsolatedError(String taskName, Throwable throwable) {

System.err.printf("Isolated error in %s: %s%n",

taskName, throwable.getMessage());

}

}

// 原则5: 优雅降级(Graceful Degradation)

public static class GracefulDegradationPrinciple {

private final CacheService cacheService;

private final FallbackService fallbackService;

public GracefulDegradationPrinciple() {

this.cacheService = new CacheService();

this.fallbackService = new FallbackService();

}

public Product getProduct(String productId) {

try {

// 1. 首先尝试主服务

return productService.getProduct(productId);

} catch (ServiceUnavailableException e) {

// 2. 主服务失败,尝试缓存

Product cached = cacheService.getProduct(productId);

if (cached != null) {

return cached;

}

// 3. 缓存也没有,使用降级服务

return fallbackService.getBasicProductInfo(productId);

}

}

}

}

34.2 状态码驱动的错误分类

34.2.1 错误分类体系

typescript

// 基于状态码的错误分类体系

type ErrorCategory =

| 'CLIENT_ERROR' // 4xx 错误

| 'SERVER_ERROR' // 5xx 错误

| 'NETWORK_ERROR' // 网络相关错误

| 'VALIDATION_ERROR' // 验证错误

| 'BUSINESS_ERROR' // 业务逻辑错误

| 'SECURITY_ERROR' // 安全相关错误

| 'INTEGRATION_ERROR' // 集成错误

| 'TIMEOUT_ERROR'; // 超时错误

interface ErrorClassification {

category: ErrorCategory;

severity: 'LOW' | 'MEDIUM' | 'HIGH' | 'CRITICAL';

retryable: boolean;

userFacing: boolean;

logLevel: 'DEBUG' | 'INFO' | 'WARN' | 'ERROR' | 'FATAL';

suggestedAction: string;

}

class StatusCodeClassifier {

private static readonly CLASSIFICATION_MAP: Map<number, ErrorClassification> = new Map([

// 4xx 错误分类

[400, {

category: 'CLIENT_ERROR',

severity: 'MEDIUM',

retryable: false,

userFacing: true,

logLevel: 'WARN',

suggestedAction: 'Fix request parameters and retry'

}],

[401, {

category: 'SECURITY_ERROR',

severity: 'MEDIUM',

retryable: true,

userFacing: true,

logLevel: 'WARN',

suggestedAction: 'Authenticate and retry with valid credentials'

}],

[403, {

category: 'SECURITY_ERROR',

severity: 'MEDIUM',

retryable: false,

userFacing: true,

logLevel: 'WARN',

suggestedAction: 'Request appropriate permissions'

}],

[404, {

category: 'CLIENT_ERROR',

severity: 'LOW',

retryable: false,

userFacing: true,

logLevel: 'DEBUG',

suggestedAction: 'Check resource identifier and retry'

}],

[409, {

category: 'BUSINESS_ERROR',

severity: 'MEDIUM',

retryable: true,

userFacing: true,

logLevel: 'WARN',

suggestedAction: 'Resolve conflict and retry'

}],

[422, {

category: 'VALIDATION_ERROR',

severity: 'MEDIUM',

retryable: false,

userFacing: true,

logLevel: 'WARN',

suggestedAction: 'Fix validation errors and retry'

}],

[429, {

category: 'CLIENT_ERROR',

severity: 'MEDIUM',

retryable: true,

userFacing: true,

logLevel: 'WARN',

suggestedAction: 'Wait and retry after rate limit resets'

}],

// 5xx 错误分类

[500, {

category: 'SERVER_ERROR',

severity: 'HIGH',

retryable: true,

userFacing: false,

logLevel: 'ERROR',

suggestedAction: 'Retry after some time or contact support'

}],

[502, {

category: 'INTEGRATION_ERROR',

severity: 'HIGH',

retryable: true,

userFacing: false,

logLevel: 'ERROR',

suggestedAction: 'Retry after upstream service recovers'

}],

[503, {

category: 'SERVER_ERROR',

severity: 'HIGH',

retryable: true,

userFacing: true,

logLevel: 'ERROR',

suggestedAction: 'Retry after service maintenance completes'

}],

[504, {

category: 'TIMEOUT_ERROR',

severity: 'MEDIUM',

retryable: true,

userFacing: false,

logLevel: 'WARN',

suggestedAction: 'Retry with longer timeout or check network'

}]

]);

static classify(statusCode: number): ErrorClassification {

const classification = this.CLASSIFICATION_MAP.get(statusCode);

if (!classification) {

// 默认分类

if (statusCode >= 400 && statusCode < 500) {

return {

category: 'CLIENT_ERROR',

severity: 'MEDIUM',

retryable: false,

userFacing: true,

logLevel: 'WARN',

suggestedAction: 'Review request and retry'

};

} else if (statusCode >= 500) {

return {

category: 'SERVER_ERROR',

severity: 'HIGH',

retryable: true,

userFacing: false,

logLevel: 'ERROR',

suggestedAction: 'Retry after some time'

};

}

throw new Error(`Unsupported status code: ${statusCode}`);

}

return classification;

}

static getErrorResponse(statusCode: number, errorDetails?: any): ErrorResponse {

const classification = this.classify(statusCode);

return {

statusCode,

error: {

code: `HTTP_${statusCode}`,

message: this.getDefaultMessage(statusCode),

category: classification.category,

severity: classification.severity,

retryable: classification.retryable,

timestamp: new Date().toISOString(),

details: errorDetails,

suggestedAction: classification.suggestedAction

}

};

}

private static getDefaultMessage(statusCode: number): string {

const messages: Record<number, string> = {

400: 'Bad Request',

401: 'Unauthorized',

403: 'Forbidden',

404: 'Not Found',

409: 'Conflict',

422: 'Unprocessable Entity',

429: 'Too Many Requests',

500: 'Internal Server Error',

502: 'Bad Gateway',

503: 'Service Unavailable',