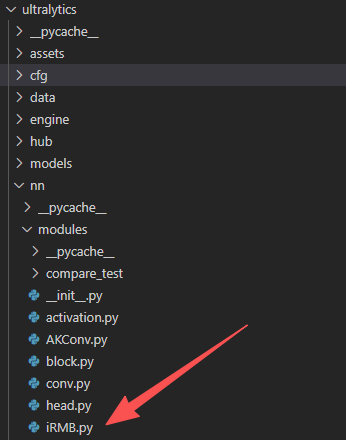

iRMB倒置残差块注意力机制简介

iRMB(Inverted Residual Mobile Block)的框架原理,是一种结合轻量级CNN和注意力机制的方法,用于改进移动设备上的目标检测模型。IRMB通过倒置残差块和元移动块实现高效信息处理,同时保持模型轻量化。本文中提出一个新的主干网络EMO,主要思想是将轻量级的CNN架构与基于注意力的模型结构相结合。

目录

1. 简介

2. iRMB主要思想

3. iRMB结构

原始论文:https://arxiv.org/pdf/2301.01146

代码地址:https://github.com/zhangzjn/EMO

1. 简介

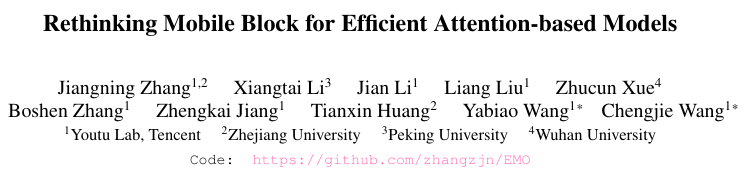

倒置残差块(IRB)作为轻量级CNN的基础设施,在基于注意力的研究中尚未有对应的部分。本文从统一的视角重新考虑了基于高效IRB和Transformer有效组件的轻量级基础设施,将基于CNN的IRB扩展到基于注意力的模型,并抽象出一个残差的元移动块(MMB)用于轻量级模型设计。遵循简单但有效的设计准则,本文推导出了现代化的倒置残差移动块(IRMB),并以此构建了类似ResNet的高效模型(EMO)用于下游任务。

2. iRMB主要思想

iRMB(Inverted Residual Mobile Block)的主要思想是将轻量级的CNN架构与基于注意力的模型结构相结合(优点类似于ACmix),以创建高效的移动网络。Irmb通过重新考虑导致残差(IRB)和Transformer的有效组件,实现了一种统一的视角,从而扩展了CNN的IRB到基于注意力的模型。Irmb的设计目标是在保持模型轻量级的同时,实现对计算资源的有效利用和高准确率。这一方法通过在下游任务上的广泛实验得到验证,展示出其在轻量级模型领域的优越性能。

iRMB的主要创新点在于以下三个点:

结合CNN的轻量级特征和Transformer的动态模型能力,创新提出了iRMB结构,适用于移动设备上的密集预测任务

使用倒置残差块设计,扩展了传统CNN的IRB到基于注意力的模型,增强了模型处理长距离信息的能力

提出了元移动块(Meta-Mobile Block),通过不同的扩展比率和高效操作符,实现了模型的模块化设计,使得模型更加灵活和高效

3. IRMB结构

iRMB结构的主要创新点是它结合了卷积神经网络的轻量级特性和Transformer模型的动态处理能力。这种结构特别适用于移动设备上的密集预测任务,因为它旨在计算能力有限的环境中提供高效的性能。IRMB通过其倒置残差设计改进了信息流的处理,允许在保持模型轻量的同时捕捉和利用长距离依赖,这对于图像分类,对象检测和语义分割等任务至关重要。这种设计使得模型在资源受限的设备上也能够高效运行,同时保持或提高预测准确性。

4.iRMB模块代码实现

import math

import torch

import torch.nn as nn

import torch.nn.functional as F

from functools import partial

from einops import rearrange

from timm.models._efficientnet_blocks import SqueezeExcite

from timm.models.layers import DropPath

__all__ = ['iRMB', 'C2PSA_iRMB', 'C3k2_iRMB']

inplace = True # 全局变量

class LayerNorm2d(nn.Module):

def __init__(self, normalized_shape, eps=1e-6, elementwise_affine=True):

super().__init__()

self.norm = nn.LayerNorm(normalized_shape, eps, elementwise_affine)

def forward(self, x):

x = rearrange(x, 'b c h w -> b h w c').contiguous()

x = self.norm(x)

x = rearrange(x, 'b h w c -> b c h w').contiguous()

return x

def get_norm(norm_layer='in_1d'):

eps = 1e-6

norm_dict = {

'none': nn.Identity,

'in_1d': partial(nn.InstanceNorm1d, eps=eps),

'in_2d': partial(nn.InstanceNorm2d, eps=eps),

'in_3d': partial(nn.InstanceNorm3d, eps=eps),

'bn_1d': partial(nn.BatchNorm1d, eps=eps),

'bn_2d': partial(nn.BatchNorm2d, eps=eps),

# 'bn_2d': partial(nn.SyncBatchNorm, eps=eps),

'bn_3d': partial(nn.BatchNorm3d, eps=eps),

'gn': partial(nn.GroupNorm, eps=eps),

'ln_1d': partial(nn.LayerNorm, eps=eps),

'ln_2d': partial(LayerNorm2d, eps=eps),

}

return norm_dict[norm_layer]

def get_act(act_layer='relu'):

act_dict = {

'none': nn.Identity,

'relu': nn.ReLU,

'relu6': nn.ReLU6,

'silu': nn.SiLU

}

return act_dict[act_layer]

class ConvNormAct(nn.Module):

def __init__(self, dim_in, dim_out, kernel_size, stride=1, dilation=1, groups=1, bias=False,

skip=False, norm_layer='bn_2d', act_layer='relu', inplace=True, drop_path_rate=0.):

super(ConvNormAct, self).__init__()

self.has_skip = skip and dim_in == dim_out

padding = math.ceil((kernel_size – stride) / 2)

self.conv = nn.Conv2d(dim_in, dim_out, kernel_size, stride, padding, dilation, groups, bias)

self.norm = get_norm(norm_layer)(dim_out)

self.act = get_act(act_layer)(inplace=inplace)

self.drop_path = DropPath(drop_path_rate) if drop_path_rate else nn.Identity()

def forward(self, x):

shortcut = x

x = self.conv(x)

x = self.norm(x)

x = self.act(x)

if self.has_skip:

x = self.drop_path(x) + shortcut

return x

class iRMB(nn.Module):

def __init__(self, dim_in, norm_in=True, has_skip=True, exp_ratio=1.0, norm_layer='bn_2d',

act_layer='relu', v_proj=True, dw_ks=3, stride=1, dilation=1, se_ratio=0.0, dim_head=8, window_size=7,

attn_s=True, qkv_bias=False, attn_drop=0., drop=0., drop_path=0., v_group=False, attn_pre=False):

super().__init__()

dim_out = dim_in

self.norm = get_norm(norm_layer)(dim_in) if norm_in else nn.Identity()

dim_mid = int(dim_in * exp_ratio)

self.has_skip = (dim_in == dim_out and stride == 1) and has_skip

self.attn_s = attn_s

if self.attn_s:

assert dim_in % dim_head == 0, 'dim should be divisible by num_heads'

self.dim_head = dim_head

self.window_size = window_size

self.num_head = dim_in // dim_head

self.scale = self.dim_head ** -0.5

self.attn_pre = attn_pre

self.qk = ConvNormAct(dim_in, int(dim_in * 2), kernel_size=1, bias=qkv_bias, norm_layer='none',

act_layer='none')

self.v = ConvNormAct(dim_in, dim_mid, kernel_size=1, groups=self.num_head if v_group else 1, bias=qkv_bias,

norm_layer='none', act_layer=act_layer, inplace=inplace)

self.attn_drop = nn.Dropout(attn_drop)

else:

if v_proj:

self.v = ConvNormAct(dim_in, dim_mid, kernel_size=1, bias=qkv_bias, norm_layer='none',

act_layer=act_layer, inplace=inplace)

else:

self.v = nn.Identity()

self.conv_local = ConvNormAct(dim_mid, dim_mid, kernel_size=dw_ks, stride=stride, dilation=dilation,

groups=dim_mid, norm_layer='bn_2d', act_layer='silu', inplace=inplace)

self.se = SqueezeExcite(dim_mid, rd_ratio=se_ratio, act_layer=get_act(act_layer)) if se_ratio > 0.0 else nn.Identity()

self.proj_drop = nn.Dropout(drop)

self.proj = ConvNormAct(dim_mid, dim_out, kernel_size=1, norm_layer='none', act_layer='none', inplace=inplace)

self.drop_path = DropPath(drop_path) if drop_path else nn.Identity()

def forward(self, x):

shortcut = x

x = self.norm(x)

B, C, H, W = x.shape

if self.attn_s:

# padding

if self.window_size <= 0:

window_size_W, window_size_H = W, H

else:

window_size_W, window_size_H = self.window_size, self.window_size

pad_l, pad_t = 0, 0

pad_r = (window_size_W – W % window_size_W) % window_size_W

pad_b = (window_size_H – H % window_size_H) % window_size_H

x = F.pad(x, (pad_l, pad_r, pad_t, pad_b, 0, 0,))

n1, n2 = (H + pad_b) // window_size_H, (W + pad_r) // window_size_W

x = rearrange(x, 'b c (h1 n1) (w1 n2) -> (b n1 n2) c h1 w1', n1=n1, n2=n2).contiguous()

# attention

b, c, h, w = x.shape

qk = self.qk(x)

qk = rearrange(qk, 'b (qk heads dim_head) h w -> qk b heads (h w) dim_head', qk=2, heads=self.num_head,

dim_head=self.dim_head).contiguous()

q, k = qk[0], qk[1]

attn_spa = (q @ k.transpose(-2, -1)) * self.scale

attn_spa = attn_spa.softmax(dim=-1)

attn_spa = self.attn_drop(attn_spa)

if self.attn_pre:

x = rearrange(x, 'b (heads dim_head) h w -> b heads (h w) dim_head', heads=self.num_head).contiguous()

x_spa = attn_spa @ x

x_spa = rearrange(x_spa, 'b heads (h w) dim_head -> b (heads dim_head) h w', heads=self.num_head, h=h,

w=w).contiguous()

x_spa = self.v(x_spa)

else:

v = self.v(x)

v = rearrange(v, 'b (heads dim_head) h w -> b heads (h w) dim_head', heads=self.num_head).contiguous()

x_spa = attn_spa @ v

x_spa = rearrange(x_spa, 'b heads (h w) dim_head -> b (heads dim_head) h w', heads=self.num_head, h=h,

w=w).contiguous()

# unpadding

x = rearrange(x_spa, '(b n1 n2) c h1 w1 -> b c (h1 n1) (w1 n2)', n1=n1, n2=n2).contiguous()

if pad_r > 0 or pad_b > 0:

x = x[:, :, :H, :W].contiguous()

else:

x = self.v(x)

x = x + self.se(self.conv_local(x)) if self.has_skip else self.se(self.conv_local(x))

x = self.proj_drop(x)

x = self.proj(x)

x = (shortcut + self.drop_path(x)) if self.has_skip else x

return x

def autopad(k, p=None, d=1): # kernel, padding, dilation

"""Pad to 'same' shape outputs."""

if d > 1:

k = d * (k – 1) + 1 if isinstance(k, int) else [d * (x – 1) + 1 for x in k] # actual kernel-size

if p is None:

p = k // 2 if isinstance(k, int) else [x // 2 for x in k] # auto-pad

return p

class Conv(nn.Module):

"""Standard convolution with args(ch_in, ch_out, kernel, stride, padding, groups, dilation, activation)."""

default_act = nn.SiLU() # default activation

def __init__(self, c1, c2, k=1, s=1, p=None, g=1, d=1, act=True):

"""Initialize Conv layer with given arguments including activation."""

super().__init__()

self.conv = nn.Conv2d(c1, c2, k, s, autopad(k, p, d), groups=g, dilation=d, bias=False)

self.bn = nn.BatchNorm2d(c2)

self.act = self.default_act if act is True else act if isinstance(act, nn.Module) else nn.Identity()

def forward(self, x):

"""Apply convolution, batch normalization and activation to input tensor."""

return self.act(self.bn(self.conv(x)))

def forward_fuse(self, x):

"""Perform transposed convolution of 2D data."""

return self.act(self.conv(x))

class PSABlock(nn.Module):

"""

PSABlock class implementing a Position-Sensitive Attention block for neural networks.

This class encapsulates the functionality for applying multi-head attention and feed-forward neural network layers

with optional shortcut connections.

Attributes:

attn (Attention): Multi-head attention module.

ffn (nn.Sequential): Feed-forward neural network module.

add (bool): Flag indicating whether to add shortcut connections.

Methods:

forward: Performs a forward pass through the PSABlock, applying attention and feed-forward layers.

Examples:

Create a PSABlock and perform a forward pass

>>> psablock = PSABlock(c=128, attn_ratio=0.5, num_heads=4, shortcut=True)

>>> input_tensor = torch.randn(1, 128, 32, 32)

>>> output_tensor = psablock(input_tensor)

"""

def __init__(self, c, attn_ratio=0.5, num_heads=4, shortcut=True) -> None:

"""Initializes the PSABlock with attention and feed-forward layers for enhanced feature extraction."""

super().__init__()

self.attn = iRMB(c)

self.ffn = nn.Sequential(Conv(c, c * 2, 1), Conv(c * 2, c, 1, act=False))

self.add = shortcut

def forward(self, x):

"""Executes a forward pass through PSABlock, applying attention and feed-forward layers to the input tensor."""

x = x + self.attn(x) if self.add else self.attn(x)

x = x + self.ffn(x) if self.add else self.ffn(x)

return x

class C2PSA_iRMB(nn.Module):

"""

C2PSA module with attention mechanism for enhanced feature extraction and processing.

This module implements a convolutional block with attention mechanisms to enhance feature extraction and processing

capabilities. It includes a series of PSABlock modules for self-attention and feed-forward operations.

Attributes:

c (int): Number of hidden channels.

cv1 (Conv): 1×1 convolution layer to reduce the number of input channels to 2*c.

cv2 (Conv): 1×1 convolution layer to reduce the number of output channels to c.

m (nn.Sequential): Sequential container of PSABlock modules for attention and feed-forward operations.

Methods:

forward: Performs a forward pass through the C2PSA module, applying attention and feed-forward operations.

Notes:

This module essentially is the same as PSA module, but refactored to allow stacking more PSABlock modules.

Examples:

>>> c2psa = C2PSA(c1=256, c2=256, n=3, e=0.5)

>>> input_tensor = torch.randn(1, 256, 64, 64)

>>> output_tensor = c2psa(input_tensor)

"""

def __init__(self, c1, c2, n=1, e=0.5):

"""Initializes the C2PSA module with specified input/output channels, number of layers, and expansion ratio."""

super().__init__()

assert c1 == c2

self.c = int(c1 * e)

self.cv1 = Conv(c1, 2 * self.c, 1, 1)

self.cv2 = Conv(2 * self.c, c1, 1)

self.m = nn.Sequential(*(PSABlock(self.c, attn_ratio=0.5, num_heads=self.c // 64) for _ in range(n)))

def forward(self, x):

"""Processes the input tensor 'x' through a series of PSA blocks and returns the transformed tensor."""

a, b = self.cv1(x).split((self.c, self.c), dim=1)

b = self.m(b)

return self.cv2(torch.cat((a, b), 1))

class Bottleneck(nn.Module):

"""Standard bottleneck."""

def __init__(self, c1, c2, shortcut=True, g=1, k=(3, 3), e=0.5):

"""Initializes a standard bottleneck module with optional shortcut connection and configurable parameters."""

super().__init__()

c_ = int(c2 * e) # hidden channels

self.cv1 = Conv(c1, c_, k[0], 1)

self.cv2 = Conv(c_, c2, k[1], 1, g=g)

self.add = shortcut and c1 == c2

def forward(self, x):

"""Applies the YOLO FPN to input data."""

return x + self.cv2(self.cv1(x)) if self.add else self.cv2(self.cv1(x))

class C3(nn.Module):

"""CSP Bottleneck with 3 convolutions."""

def __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5):

"""Initialize the CSP Bottleneck with given channels, number, shortcut, groups, and expansion values."""

super().__init__()

c_ = int(c2 * e) # hidden channels

self.cv1 = Conv(c1, c_, 1, 1)

self.cv2 = Conv(c1, c_, 1, 1)

self.cv3 = Conv(2 * c_, c2, 1) # optional act=FReLU(c2)

self.m = nn.Sequential(*(Bottleneck(c_, c_, shortcut, g, k=((1, 1), (3, 3)), e=1.0) for _ in range(n)))

def forward(self, x):

"""Forward pass through the CSP bottleneck with 2 convolutions."""

return self.cv3(torch.cat((self.m(self.cv1(x)), self.cv2(x)), 1))

class C3k(C3):

"""C3k is a CSP bottleneck module with customizable kernel sizes for feature extraction in neural networks."""

def __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5, k=3):

"""Initializes the C3k module with specified channels, number of layers, and configurations."""

super().__init__(c1, c2, n, shortcut, g, e)

c_ = int(c2 * e) # hidden channels

# self.m = nn.Sequential(*(RepBottleneck(c_, c_, shortcut, g, k=(k, k), e=1.0) for _ in range(n)))

self.m = nn.Sequential(*(Bottleneck(c_, c_, shortcut, g, k=(k, k), e=1.0) for _ in range(n)))

class C2f(nn.Module):

"""Faster Implementation of CSP Bottleneck with 2 convolutions."""

def __init__(self, c1, c2, n=1, shortcut=False, g=1, e=0.5):

"""Initializes a CSP bottleneck with 2 convolutions and n Bottleneck blocks for faster processing."""

super().__init__()

self.c = int(c2 * e) # hidden channels

self.cv1 = Conv(c1, 2 * self.c, 1, 1)

self.cv2 = Conv((2 + n) * self.c, c2, 1) # optional act=FReLU(c2)

self.m = nn.ModuleList(Bottleneck(self.c, self.c, shortcut, g, k=((3, 3), (3, 3)), e=1.0) for _ in range(n))

def forward(self, x):

"""Forward pass through C2f layer."""

y = list(self.cv1(x).chunk(2, 1))

y.extend(m(y[-1]) for m in self.m)

return self.cv2(torch.cat(y, 1))

def forward_split(self, x):

"""Forward pass using split() instead of chunk()."""

y = list(self.cv1(x).split((self.c, self.c), 1))

y.extend(m(y[-1]) for m in self.m)

return self.cv2(torch.cat(y, 1))

class C3k2(C2f):

"""Faster Implementation of CSP Bottleneck with 2 convolutions."""

def __init__(self, c1, c2, n=1, c3k=False, e=0.5, g=1, shortcut=True):

"""Initializes the C3k2 module, a faster CSP Bottleneck with 2 convolutions and optional C3k blocks."""

super().__init__(c1, c2, n, shortcut, g, e)

self.m = nn.ModuleList(

C3k(self.c, self.c, 2, shortcut, g) if c3k else Bottleneck(self.c, self.c, shortcut, g) for _ in range(n)

)

class C3k_iRMB(C3k):

def __init__(self, c1, c2, n=1, shortcut=False, g=1, e=0.5, k=3):

super().__init__(c1, c2, n, shortcut, g, e, k)

c_ = int(c2 * e) # hidden channels

self.m = nn.Sequential(*(iRMB(c_, c_) for _ in range(n)))

class C3k2_iRMB(C3k2):

def __init__(self, c1, c2, n=1, c3k=False, e=0.5, g=1, shortcut=True):

super().__init__(c1, c2, n, c3k, e, g, shortcut)

self.m = nn.ModuleList(C3k_iRMB(self.c, self.c, 2, shortcut, g) if c3k else iRMB(self.c, self.c) for _ in range(n))

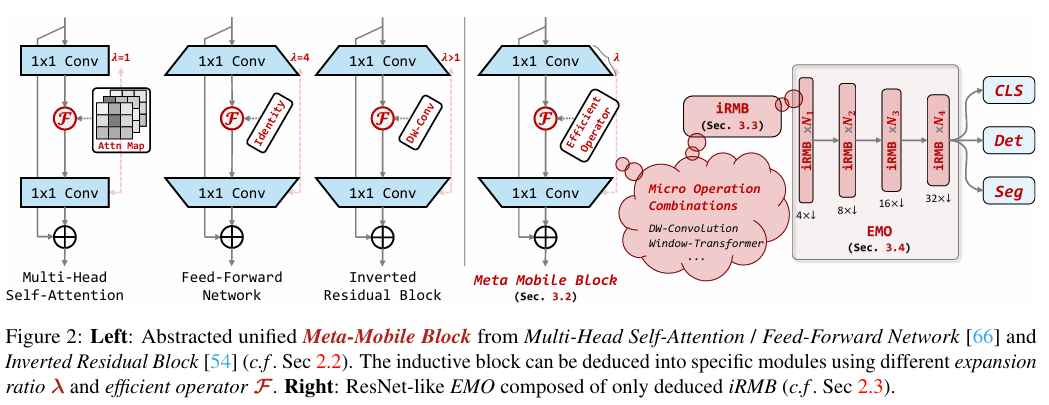

5.具体改进操骤

5.1 在ultralytics/nn/modules下新建iRMB.py文件

在iRMB.py文件里添加给出的iRMB代码

添加完iRMB代码后,在ultralytics/nn/modules/__init__.py文件中引用

from .iRMB import *

然后,在ultralytics/nn/tasks.py里引用

from .modules import *

5.2 在ultralytics/nn/tasks.py修改

在tasks.py找到parse_model函数(可以ctrl+f 直接搜索parse_model位置)添加:

elif m in {iRMB}:

c2 = ch[f]

args = [c2, *args]

6.创建YAML配置文件

此处以YOLOv11代码为例,创建YOLO11_iRMB.yaml

# Ultralytics YOLO 🚀, AGPL-3.0 license

# YOLO11 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect

# Parameters

nc: 80 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolo11n.yaml' will call yolo11.yaml with scale 'n'

# [depth, width, max_channels]

n: [0.50, 0.25, 1024] # summary: 319 layers, 2624080 parameters, 2624064 gradients, 6.6 GFLOPs

s: [0.50, 0.50, 1024] # summary: 319 layers, 9458752 parameters, 9458736 gradients, 21.7 GFLOPs

m: [0.50, 1.00, 512] # summary: 409 layers, 20114688 parameters, 20114672 gradients, 68.5 GFLOPs

l: [1.00, 1.00, 512] # summary: 631 layers, 25372160 parameters, 25372144 gradients, 87.6 GFLOPs

x: [1.00, 1.50, 512] # summary: 631 layers, 56966176 parameters, 56966160 gradients, 196.0 GFLOPs

# YOLO11n backbone

backbone:

# [from, repeats, module, args]

– [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

– [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

– [-1, 2, C3k2, [256, False, 0.25]]

– [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

– [-1, 2, C3k2, [512, False, 0.25]]

– [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

– [-1, 2, C3k2, [512, True]]

– [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32

– [-1, 2, C3k2, [1024, True]]

– [-1, 1, SPPF, [1024, 5]] # 9

– [-1, 2, C2PSA, [1024]] # 10

# YOLO11n head

head:

– [-1, 1, nn.Upsample, [None, 2, "nearest"]]

– [[-1, 6], 1, Concat, [1]] # cat backbone P4

– [-1, 2, C3k2, [512, False]] # 13

– [-1, 1, nn.Upsample, [None, 2, "nearest"]]

– [[-1, 4], 1, Concat, [1]] # cat backbone P3

– [-1, 2, C3k2, [256, False]] # 16

– [-1, 1, iRMB, []] # 17 小目标检测层增加注意力机制

– [-1, 1, Conv, [256, 3, 2]]

– [[-1, 13], 1, Concat, [1]] # cat head P4

– [-1, 2, C3k2, [512, False]] # 20 (P4/16-medium)

– [-1, 1, iRMB, []] # 21 中目标检测层增加注意力机制

– [-1, 1, Conv, [512, 3, 2]]

– [[-1, 10], 1, Concat, [1]] # cat head P5

– [-1, 2, C3k2, [1024, True]] # 24

– [-1, 1, iRMB, []] # 25 大目标检测层增加注意力机制

– [[17, 21, 25], 1, Detect, [nc]] # Detect(P3, P4, P5)

以上是一种改进方法,也可以在C3k2模块、C2PSA模块等处进行改进,构建新的的C3k2_iRMB、C2PSA_iRMB模块。

YOLO11_C2PSA_iRMB.yaml

# Ultralytics YOLO 🚀, AGPL-3.0 license

# YOLO11 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect

# Parameters

nc: 80 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolo11n.yaml' will call yolo11.yaml with scale 'n'

# [depth, width, max_channels]

n: [0.50, 0.25, 1024] # summary: 319 layers, 2624080 parameters, 2624064 gradients, 6.6 GFLOPs

s: [0.50, 0.50, 1024] # summary: 319 layers, 9458752 parameters, 9458736 gradients, 21.7 GFLOPs

m: [0.50, 1.00, 512] # summary: 409 layers, 20114688 parameters, 20114672 gradients, 68.5 GFLOPs

l: [1.00, 1.00, 512] # summary: 631 layers, 25372160 parameters, 25372144 gradients, 87.6 GFLOPs

x: [1.00, 1.50, 512] # summary: 631 layers, 56966176 parameters, 56966160 gradients, 196.0 GFLOPs

# YOLO11n backbone

backbone:

# [from, repeats, module, args]

– [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

– [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

– [-1, 2, C3k2, [256, False, 0.25]]

– [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

– [-1, 2, C3k2, [512, False, 0.25]]

– [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

– [-1, 2, C3k2, [512, True]]

– [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32

– [-1, 2, C3k2, [1024, True]]

– [-1, 1, SPPF, [1024, 5]] # 9

– [-1, 2, C2PSA_iRMB, [1024]] # 10

# YOLO11n head

head:

– [-1, 1, nn.Upsample, [None, 2, "nearest"]]

– [[-1, 6], 1, Concat, [1]] # cat backbone P4

– [-1, 2, C3k2, [512, False]] # 13

– [-1, 1, nn.Upsample, [None, 2, "nearest"]]

– [[-1, 4], 1, Concat, [1]] # cat backbone P3

– [-1, 2, C3k2, [256, False]] # 16 (P3/8-small)

– [-1, 1, Conv, [256, 3, 2]]

– [[-1, 13], 1, Concat, [1]] # cat head P4

– [-1, 2, C3k2, [512, False]] # 19 (P4/16-medium)

– [-1, 1, Conv, [512, 3, 2]]

– [[-1, 10], 1, Concat, [1]] # cat head P5

– [-1, 2, C3k2, [1024, True]] # 22 (P5/32-large)

– [[16, 19, 22], 1, Detect, [nc]] # Detect(P3, P4, P5)

YOLO11_C3k2_iRMB.yaml

# Ultralytics YOLO 🚀, AGPL-3.0 license

# YOLO11 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect

# Parameters

nc: 80 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolo11n.yaml' will call yolo11.yaml with scale 'n'

# [depth, width, max_channels]

n: [0.50, 0.25, 1024] # summary: 319 layers, 2624080 parameters, 2624064 gradients, 6.6 GFLOPs

s: [0.50, 0.50, 1024] # summary: 319 layers, 9458752 parameters, 9458736 gradients, 21.7 GFLOPs

m: [0.50, 1.00, 512] # summary: 409 layers, 20114688 parameters, 20114672 gradients, 68.5 GFLOPs

l: [1.00, 1.00, 512] # summary: 631 layers, 25372160 parameters, 25372144 gradients, 87.6 GFLOPs

x: [1.00, 1.50, 512] # summary: 631 layers, 56966176 parameters, 56966160 gradients, 196.0 GFLOPs

# YOLO11n backbone

backbone:

# [from, repeats, module, args]

– [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

– [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

– [-1, 2, C3k2_iRMB, [256, False, 0.25]]

– [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

– [-1, 2, C3k2_iRMB, [512, False, 0.25]]

– [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

– [-1, 2, C3k2_iRMB, [512, True]]

– [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32

– [-1, 2, C3k2_iRMB, [1024, True]]

– [-1, 1, SPPF, [1024, 5]] # 9

– [-1, 2, C2PSA, [1024]] # 10

# YOLO11n head

head:

– [-1, 1, nn.Upsample, [None, 2, "nearest"]]

– [[-1, 6], 1, Concat, [1]] # cat backbone P4

– [-1, 2, C3k2, [512, False]] # 13

– [-1, 1, nn.Upsample, [None, 2, "nearest"]]

– [[-1, 4], 1, Concat, [1]] # cat backbone P3

– [-1, 2, C3k2, [256, False]] # 16 (P3/8-small)

– [-1, 1, Conv, [256, 3, 2]]

– [[-1, 13], 1, Concat, [1]] # cat head P4

– [-1, 2, C3k2, [512, False]] # 19 (P4/16-medium)

– [-1, 1, Conv, [512, 3, 2]]

– [[-1, 10], 1, Concat, [1]] # cat head P5

– [-1, 2, C3k2, [1024, True]] # 22 (P5/32-large)

– [[16, 19, 22], 1, Detect, [nc]] # Detect(P3, P4, P5)

7. 模型训练

import warnings

warnings.filterwarnings('ignore')

from ultralytics import YOLO

if __name__ == '__main__':

model = YOLO('YOLO11_iRMB.yaml')

# model.load('yolo11n.pt') # loading pretrain weights

model.train(data='dataset/data.yaml',

cache=False,

imgsz=640,

epochs=300,

batch=32,

close_mosaic=0,

workers=4, # Windows下出现莫名其妙卡主的情况可以尝试把workers设置为0

# device='0',

optimizer='SGD', # using SGD

# patience=0, # set 0 to close earlystop.

# resume=True, # 断点续训,YOLO初始化时选择last.pt

# amp=False, # close amp

# fraction=0.2,

project='runs/train',

name='exp',

)

网硕互联帮助中心

网硕互联帮助中心

评论前必须登录!

注册