在地质勘探、矿产开发等领域,矿物分类的准确性直接影响后续分析决策。传统人工分类依赖专业经验,效率低且主观性强,而机器学习与深度学习技术能实现自动化、高精度分类。本文将详细记录复现矿物分类系统的全过程,涵盖6种数据填充方法、7种分类模型的搭配实验,最终筛选出最优方案,准确率达99%。

一、项目背景与技术栈

1.1 项目目标

基于矿物成分特征数据,构建分类系统,实现对不同类型矿物的精准识别。核心需求是对比不同数据填充策略与分类模型的适配性,找到最优技术组合。

1.2 技术栈选型

-

数据处理:Python、Pandas(数据读取、空值处理、格式转换)

-

传统机器学习:Scikit-learn(逻辑回归、SVM、随机森林、AdaBoost)

-

集成学习:XGBoost(高性能梯度提升树)

-

深度学习:PyTorch(MLP神经网络、1D-CNN)

-

评估工具:Scikit-learn metrics(准确率、精确率、召回率、F1分数)

1.3 数据集说明

数据集包含矿物的多维度成分特征(如元素含量、密度、硬度等),标签为“矿物类型”。原始数据存在部分空值,需先进行预处理;后续将数据集按常规比例划分为训练集与测试集,用于模型训练与评估。

二、核心流程:从数据预处理到模型训练

整个系统搭建分为三大阶段:数据预处理(空值填充)→ 多模型分类训练 → 结果对比与最优方案筛选,共覆盖6种填充方法×7种分类模型,形成42种技术方案。

阶段一:数据预处理——空值填充策略对比

原始数据中的空值会导致模型训练报错、精度下降,因此需针对性选择填充方法。本文选取6种主流空值填充策略,兼顾简单性与针对性:

中位数填充:对数值型特征,用中位数填充空值,抗异常值能力强,适合矿物成分这类可能存在极端值的数据;

均值填充:用特征均值填充,计算简单,但易受异常值影响;

众数填充:对离散特征或分布集中的数值特征,用出现频率最高的值填充;

删除空数据行:直接删除含空值的样本,操作简单但会丢失数据,仅适合空值占比极低的场景;

线性回归填充:以其他特征为输入,构建线性回归模型预测空值,利用特征间的关联关系,填充精度较高;

随机森林填充:用随机森林模型预测空值,能捕捉特征间的非线性关系,适配复杂数据分布。

关键实现:针对线性回归与随机森林填充,采用“逐列填充”逻辑——按空值数量从小到大排序,用已填充好的特征预测当前列空值,避免数据泄露;同时严格区分训练集与测试集,测试集空值用训练集训练的模型预测,保证评估客观性。

代码:

import pandas as pd

import numpy as np

from sklearn.model_selection import train_test_split

import fill

from sklearn.preprocessing import StandardScaler

from imblearn.over_sampling import SMOTE

# 1. 读取Excel文件

df = pd.read_excel("矿物数据.xls")

# 2. 删除“矿物类型”列中值为E的行

df = df[df["矿物类型"] != "E"]

# 3. 删除“序号”列

df = df.drop(columns=["序号"])

#4、把矿物类型值映射为数字

mapping = {'A': 0, 'B': 1, 'C': 2, 'D': 3} # 定义映射规则

df["矿物类型"] = df["矿物类型"].map(mapping) # 应用映射

# 5. 将所有单元格值转为数值类型,异常数据转为nan

# 遍历所有列进行类型转换

for col in df.columns:

# pd.to_numeric:自动将非数值型数据转为nan

# errors='coerce':强制将无法转换的值转为nan

df[col] = pd.to_numeric(df[col], errors='coerce')

#标准化数据

x = df.drop(columns=["矿物类型"]) # 特征

y = df["矿物类型"] # 标签(分类目标)

scaler = StandardScaler()

x_z=scaler.fit_transform(x)

x=pd.DataFrame(x_z,columns=x.columns)#标准化得到的数组转回表格

#7、划分训练集和测试集

'''先划分再处理空缺值,避免数据泄露'''

# 划分:测试集占比20%,随机种子固定保证结果可复现

x_train, x_test, y_train, y_test = train_test_split(

x, y, test_size=0.2, random_state=4, stratify=y # stratify=y:保证训练/测试集标签分布一致

)

#8、空缺值处理

#删除空缺值的行

# x_train_fill,y_train_fill=fill.drop_null_train(x_train,y_train)

# x_test_fill,y_test_fill=fill.drop_null_test(x_test,y_test)

# #填充众数

# x_train_fill,y_train_fill=fill.mode_train_fill(x_train,y_train)

# x_test_fill,y_test_fill=fill.mode_test_fill(x_train_fill,y_train_fill,x_test,y_test)

# # 填充均值调用(和你众数调用格式完全一致)

# x_train_fill,y_train_fill=fill.mean_train_fill(x_train,y_train)

# x_test_fill,y_test_fill=fill.mean_test_fill(x_train_fill,y_train_fill,x_test,y_test)

# # 填充中位数调用(和你众数/均值调用格式完全一致)

# x_train_fill,y_train_fill=fill.median_train_fill(x_train,y_train)

# x_test_fill,y_test_fill=fill.median_test_fill(x_train_fill,y_train_fill,x_test,y_test)

# #线性回归填充

# x_train_fill,y_train_fill=fill.lr_train_fill(x_train,y_train)

# x_test_fill,y_test_fill=fill.lr_test_fill(x_train_fill,y_train_fill,x_test,y_test)

#随机森林

x_train_fill,y_train_fill=fill.rf_train_fill(x_train,y_train)

x_test_fill,y_test_fill=fill.rf_test_fill(x_train_fill,y_train_fill,x_test,y_test)

#9、均衡处理

oversampler=SMOTE(k_neighbors=1,random_state=50)

os_x_train,os_y_train=oversampler.fit_resample(x_train_fill,y_train_fill)

#10、保存数据清洗的结果

'''数据保存为excel文件'''

data_train = pd.concat([os_y_train,os_x_train],axis=1).sample(frac=1, random_state=4)

#sample() 方法用于从DataFrame中随机抽取行。frac:表示抽取行的比例。

data_test = pd.concat([y_test_fill,x_test_fill],axis=1)

#测试集不用传入模型训练, 无需打乱顺序。

data_train.to_excel(r'.//temp_data//训练数据集[随机森林].xlsx',index=False)

data_test.to_excel(r'.//temp_data//测试数据集[随机森林].xlsx',index=False)

导入填充函数代码:

import pandas as pd

#删除空缺值行

'''这里分为两个函数,内容相同是为了:

测试集应该基于训练集来处理缺失值,避免数据泄露

只不过删除行的方法用不到'''

def drop_null_train(x,y):

data=pd.concat([x,y],axis=1)

data=data.reset_index(drop=True)#重要,数据之前排序筛选等各种操作会打乱索引,不重置后续拼接会错乱

df_filled=data.dropna()

return df_filled.drop("矿物类型",axis=1),df_filled.矿物类型

def drop_null_test(x,y):

data=pd.concat([x,y],axis=1)

data=data.reset_index(drop=True)

df_filled=data.dropna()

return df_filled.drop("矿物类型",axis=1),df_filled.矿物类型

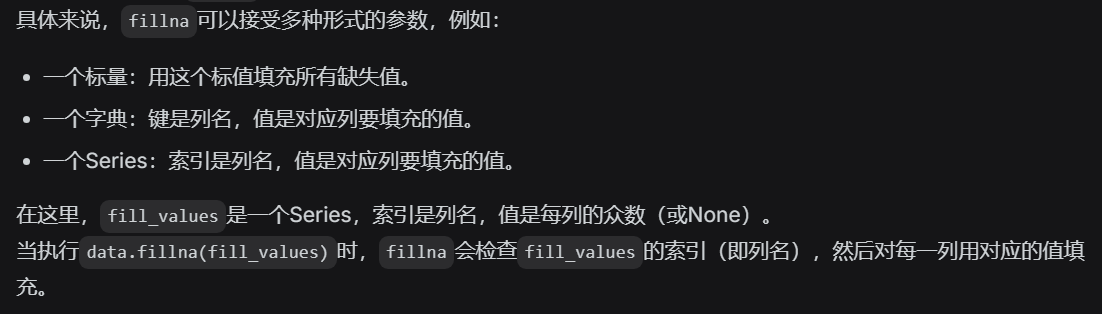

#填充众数

def mode_method(data):

fill_values=data.apply(lambda x:x.mode().iloc[0] if len(x.mode())>0 else None)

a=data.mode()

return data.fillna(fill_values)

def mode_train_fill(x_train,y_train):

data=pd.concat([x_train,y_train],axis=1)

data=data.reset_index(drop=True)

A=data[data["矿物类型"]==0]

B=data[data["矿物类型"]==1]

C=data[data["矿物类型"]==2]

D=data[data["矿物类型"]==3]

A=mode_method(A)

B=mode_method(B)

C=mode_method(C)

D=mode_method(D)

df_filled=pd.concat([A,B,C,D])

df_filled=df_filled.reset_index(drop=True)

return df_filled.drop("矿物类型",axis=1),df_filled.矿物类型

def mode_method_test(data,data1):

fill_values = data.apply(lambda x: x.mode().iloc[0] if len(x.mode()) > 0 else None)

return data1.fillna(fill_values)

def mode_test_fill(x_train_fill,y_train_fill,x_test,y_test):

data = pd.concat([x_train_fill,y_train_fill], axis=1)

data = data.reset_index(drop=True)

data1 = pd.concat([x_test,y_test], axis=1)

data1 = data1.reset_index(drop=True)

A = data[data["矿物类型"] == 0]

B = data[data["矿物类型"] == 1]

C = data[data["矿物类型"] == 2]

D = data[data["矿物类型"] == 3]

A1 = data1[data1["矿物类型"] == 0]

B1 = data1[data1["矿物类型"] == 1]

C1 = data1[data1["矿物类型"] == 2]

D1 = data1[data1["矿物类型"] == 3]

A1 = mode_method_test(A,A1)

B1 = mode_method_test(B,B1)

C1 = mode_method_test(C,C1)

D1 = mode_method_test(D,D1)

df_filled = pd.concat([A1, B1, C1, D1])

df_filled = df_filled.reset_index(drop=True)

return df_filled.drop("矿物类型", axis=1), df_filled.矿物类型

#填充均值

# 填充均值

def mean_method(data):

fill_values=data.apply(lambda x:x.mean() if len(x.dropna())>0 else None) # 计算列均值(剔除空值)

return data.fillna(fill_values)

def mean_train_fill(x_train,y_train):

data=pd.concat([x_train,y_train],axis=1)

data=data.reset_index(drop=True)

A=data[data["矿物类型"]==0]

B=data[data["矿物类型"]==1]

C=data[data["矿物类型"]==2]

D=data[data["矿物类型"]==3]

A=mean_method(A)

B=mean_method(B)

C=mean_method(C)

D=mean_method(D)

df_filled=pd.concat([A,B,C,D])

df_filled=df_filled.reset_index(drop=True)

return df_filled.drop("矿物类型",axis=1),df_filled.矿物类型

def mean_method_test(data,data1):

fill_values = data.apply(lambda x:x.mean() if len(x.dropna())>0 else None) # 用训练集分组均值

return data1.fillna(fill_values)

def mean_test_fill(x_train_fill,y_train_fill,x_test,y_test):

data = pd.concat([x_train_fill,y_train_fill], axis=1)

data = data.reset_index(drop=True)

data1 = pd.concat([x_test,y_test], axis=1)

data1 = data1.reset_index(drop=True)

A = data[data["矿物类型"] == 0]

B = data[data["矿物类型"] == 1]

C = data[data["矿物类型"] == 2]

D = data[data["矿物类型"] == 3]

A1 = data1[data1["矿物类型"] == 0]

B1 = data1[data1["矿物类型"] == 1]

C1 = data1[data1["矿物类型"] == 2]

D1 = data1[data1["矿物类型"] == 3]

A1 = mean_method_test(A,A1)

B1 = mean_method_test(B,B1)

C1 = mean_method_test(C,C1)

D1 = mean_method_test(D,D1)

df_filled = pd.concat([A1, B1, C1, D1])

df_filled = df_filled.reset_index(drop=True)

return df_filled.drop("矿物类型", axis=1), df_filled.矿物类型

#填充中位数

# 填充中位数

def median_method(data):

fill_values=data.apply(lambda x:x.median() if len(x.dropna())>0 else None) # 计算列中位数(剔除空值)

return data.fillna(fill_values)

def median_train_fill(x_train,y_train):

data=pd.concat([x_train,y_train],axis=1)

data=data.reset_index(drop=True)

A=data[data["矿物类型"]==0]

B=data[data["矿物类型"]==1]

C=data[data["矿物类型"]==2]

D=data[data["矿物类型"]==3]

A=median_method(A)

B=median_method(B)

C=median_method(C)

D=median_method(D)

df_filled=pd.concat([A,B,C,D])

df_filled=df_filled.reset_index(drop=True)

return df_filled.drop("矿物类型",axis=1),df_filled.矿物类型

def median_method_test(data,data1):

fill_values = data.apply(lambda x:x.median() if len(x.dropna())>0 else None) # 用训练集分组中位数

return data1.fillna(fill_values)

def median_test_fill(x_train_fill,y_train_fill,x_test,y_test):

data = pd.concat([x_train_fill,y_train_fill], axis=1)

data = data.reset_index(drop=True)

data1 = pd.concat([x_test,y_test], axis=1)

data1 = data1.reset_index(drop=True)

A = data[data["矿物类型"] == 0]

B = data[data["矿物类型"] == 1]

C = data[data["矿物类型"] == 2]

D = data[data["矿物类型"] == 3]

A1 = data1[data1["矿物类型"] == 0]

B1 = data1[data1["矿物类型"] == 1]

C1 = data1[data1["矿物类型"] == 2]

D1 = data1[data1["矿物类型"] == 3]

A1 = median_method_test(A,A1)

B1 = median_method_test(B,B1)

C1 = median_method_test(C,C1)

D1 = median_method_test(D,D1)

df_filled = pd.concat([A1, B1, C1, D1])

df_filled = df_filled.reset_index(drop=True)

return df_filled.drop("矿物类型", axis=1), df_filled.矿物类型

#线性回归预测缺失值进行填充

from sklearn.linear_model import LinearRegression

# def lr_train_fill(x_train,y_train):

# train_data_all=pd.concat([x_train,y_train],axis=1)

# train_data_all=train_data_all.reset_index(drop=True)

# x_train=train_data_all.drop("矿物类型",axis=1)

# null_num=x_train.isnull().sum()

# null_num_sorted=null_num.sort_values(ascending=True)

#

# filling_feature=[]

# for i in null_num_sorted.index:

# filling_feature.append(i)

# if null_num_sorted[i]!=0:

# x=x_train[filling_feature].drop(i,axis=1)

# y=x_train[i]

# row_numbers_mg_null=x_train[x_train[i].isnull()].index.tolist()

# train=x.drop(row_numbers_mg_null)

# test=y.drop(row_numbers_mg_null)

# x_test=x.iloc[row_numbers_mg_null]

# regr=LinearRegression()

# regr.fit(train,test)

# y_pred=regr.predict(x_test)

# x_train.iloc[row_numbers_mg_null]=y_pred

# print("训练集{}列填充完毕".format(i))

# return x_train,train_data_all.矿物类型

# def lr_train_fill(x_train,y_train):

# train_data_all = pd.concat([x_train, y_train], axis=1)

# train_data_all = train_data_all.reset_index(drop=True)

# train_data_X = train_data_all.drop('矿物类型', axis=1)

# null_num = train_data_X.isnull().sum() # 查看每个种中存在空数据的个数

# null_num_sorted = null_num.sort_values(ascending=True) # 将空数据的类别从小到

#

# filling_feature = [] # 用来存储需要传入模型的特征名称

#

# for i in null_num_sorted.index:

# filling_feature.append(i)

# if null_num_sorted[i] != 0: # 当前特征是否有空缺的内容。用来判断是否开始训练模型

#

# X = train_data_X[filling_feature].drop(i, axis=1) # 构建训练集

# y = train_data_X[i] # 构建测试集

#

# row_numbers_mg_null = train_data_X[train_data_X[i].isnull()].index.tolist()

# X_train = X.drop(row_numbers_mg_null) # 非空的数据作为训练数据集

# y_train = y.drop(row_numbers_mg_null) # 非空的标签作为训练标签

# X_test = X.iloc[row_numbers_mg_null] # 空的数据作为测试数据集

#

# regr = LinearRegression() # 创建线性回归模型

# regr.fit(X_train, y_train) # 训练模型

# y_pred = regr.predict(X_test) # 使用模型进行预测

# train_data_X.loc[row_numbers_mg_null] = y_pred # pandas.loc[3,4

# print('完成训练数据中的{}列数据的填充'.format(i))

# return train_data_X,train_data_X.矿物类型

def lr_train_fill(x_train, y_train):

# 核心修复:拼接前重置索引,确保x_train和y_train索引完全对齐

x_train = x_train.reset_index(drop=True) # 重置特征索引,丢弃原索引

y_train = y_train.reset_index(drop=True) # 重置标签索引,丢弃原索引

# 再拼接,此时索引完全一致,不会出现整行空

train_data_all = pd.concat([x_train, y_train], axis=1)

# 后续代码不变(可保留原reset_index,不影响)

train_data_all = train_data_all.reset_index(drop=True)

train_data_X = train_data_all.drop('矿物类型', axis=1)

null_num = train_data_X.isnull().sum()

null_num_sorted = null_num.sort_values(ascending=True)

filling_feature = []

for i in null_num_sorted.index:

filling_feature.append(i)

if null_num_sorted[i] != 0:

X = train_data_X[filling_feature].drop(i, axis=1)

y = train_data_X[i]

row_numbers_mg_null = train_data_X[train_data_X[i].isnull()].index.tolist()

# 修复变量名冲突:将局部X_train/y_train改为X_train_col/y_train_col

X_train_col = X.drop(row_numbers_mg_null)

y_train_col = y.drop(row_numbers_mg_null)

X_test = X.iloc[row_numbers_mg_null]

# 新增:校验训练集是否为空,避免报错

if not X_train_col.empty and not y_train_col.empty:

regr = LinearRegression()

regr.fit(X_train_col, y_train_col)

y_pred = regr.predict(X_test)

# 修复:仅填充当前列i的空值,而非整行

train_data_X.loc[row_numbers_mg_null, i] = y_pred

print('完成训练数据中的{}列数据的填充'.format(i))

else:

print(f"警告:列{i}删除空值后无有效样本,跳过填充")

# 修复返回值:train_data_X不含矿物类型,需从train_data_all提取

return train_data_X, train_data_all["矿物类型"]

# def lr_test_fill(x_train_fill,y_train_fill,x_test,y_test):

# train_data_all=pd.concat([x_train_fill,y_train_fill],axis=1)

# train_data_all=train_data_all.reset_index(drop=True)

#

# test_data_all=pd.concat([x_test,y_test],axis=1)

# test_data_all=test_data_all.reset_index(drop=True)

#

# train_data_x=train_data_all.drop("矿物类型",axis=1)

# test_data_x=test_data_all.drop("矿物类型",axis=1)

# null_num=test_data_x.isnull().sum()

# null_sum_sorted=null_num.sort_values(ascending=True)

#

# filling_feature=[]

# for i in null_sum_sorted.index:

# filling_feature.append(i)

# if null_sum_sorted[i]!=0:

# X_train = train_data_x[filling_feature].drop(i, axis=1)

# y_train = train_data_x[i]

# X_test = test_data_x[filling_feature].drop(i, axis=1)

# row_numbers_mg_null = test_data_x[test_data_x[i].isnull()].index.tolist() #

# X_test = X_test.iloc[row_numbers_mg_null] # 空的数据作为测试数据集

#

# regr = LinearRegression() # 创建随机森林回归模型

# regr.fit(X_train, y_train) # 使用模型进行训练

# y_pred = regr.predict(X_test) # 使用模型进行预测

# test_data_x.iloc[row_numbers_mg_null]=y_pred

# return test_data_x,test_data_all.矿物类型

from sklearn.linear_model import LinearRegression # 确保导入线性回归

def lr_test_fill(x_train_fill, y_train_fill, x_test, y_test):

# 核心修复1:拼接前强制重置索引,确保特征和标签索引完全对齐

x_train_fill = x_train_fill.reset_index(drop=True)

y_train_fill = y_train_fill.reset_index(drop=True)

x_test = x_test.reset_index(drop=True)

y_test = y_test.reset_index(drop=True)

# 拼接训练集(用于训练模型)和测试集(待填充)

train_data_all = pd.concat([x_train_fill, y_train_fill], axis=1)

train_data_all = train_data_all.reset_index(drop=True)

test_data_all = pd.concat([x_test, y_test], axis=1)

test_data_all = test_data_all.reset_index(drop=True)

# 分离特征(删除矿物类型列)

train_data_x = train_data_all.drop("矿物类型", axis=1)

test_data_x = test_data_all.drop("矿物类型", axis=1)

# 按空值数量排序,逐列填充

null_num = test_data_x.isnull().sum()

null_sum_sorted = null_num.sort_values(ascending=True)

filling_feature = []

for i in null_sum_sorted.index:

filling_feature.append(i)

if null_sum_sorted[i] != 0: # 仅处理有空值的列

# 构建训练集(用已填充好的训练集数据)

X_train = train_data_x[filling_feature].drop(i, axis=1)

y_train = train_data_x[i]

# 构建测试集(待填充的测试集特征)

X_test = test_data_x[filling_feature].drop(i, axis=1)

row_numbers_mg_null = test_data_x[test_data_x[i].isnull()].index.tolist()

X_test_null = X_test.iloc[row_numbers_mg_null] # 仅提取空值行的特征

regr = LinearRegression()

regr.fit(X_train, y_train)

y_pred = regr.predict(X_test_null)

# 核心修复3:仅填充当前列i的空值,而非整行

test_data_x.loc[row_numbers_mg_null, i] = y_pred

# 返回填充后的测试集特征+原始标签(矿物类型)

return test_data_x, test_data_all["矿物类型"]

#随机森林

import pandas as pd

from sklearn.ensemble import RandomForestRegressor # 导入随机森林回归器

# ———————- 随机森林填充训练集 ———————-

def rf_train_fill(x_train, y_train):

# 1. 索引对齐:拼接前重置所有输入索引

x_train = x_train.reset_index(drop=True)

y_train = y_train.reset_index(drop=True)

# 2. 拼接特征+标签,分离矿物类型

train_data_all = pd.concat([x_train, y_train], axis=1)

train_data_all = train_data_all.reset_index(drop=True)

train_data_x = train_data_all.drop('矿物类型', axis=1)

# 3. 按空值数量从小到大排序,逐列填充

null_num = train_data_x.isnull().sum()

null_num_sorted = null_num.sort_values(ascending=True)

filling_feature = []

for i in null_num_sorted.index:

filling_feature.append(i)

if null_num_sorted[i] != 0: # 仅处理有空值的列

# 构建当前列的训练特征和标签

X = train_data_x[filling_feature].drop(i, axis=1)

y = train_data_x[i]

row_numbers_mg_null = train_data_x[train_data_x[i].isnull()].index.tolist()

# 分离非空训练集和待填充测试集

X_train_col = X.drop(row_numbers_mg_null)

y_train_col = y.drop(row_numbers_mg_null)

X_test_col = X.iloc[row_numbers_mg_null]

# 非空校验:避免模型训练报错

if not X_train_col.empty and not y_train_col.empty:

# 核心替换:线性回归 → 随机森林回归

rf = RandomForestRegressor(

n_estimators=100, # 决策树数量(可调)

random_state=42, # 固定随机种子,保证结果可复现

n_jobs=-1 # 多线程加速

)

rf.fit(X_train_col, y_train_col) # 训练随机森林

y_pred = rf.predict(X_test_col) # 预测空值

# 精准填充:仅更新当前列i的空值

train_data_x.loc[row_numbers_mg_null, i] = y_pred

print(f'完成训练数据中【{i}】列的随机森林填充')

else:

print(f'警告:训练集【{i}】列删除空值后无有效样本,跳过填充')

# 返回填充后的特征+原始标签

return train_data_x, train_data_all["矿物类型"]

# ———————- 随机森林填充测试集 ———————-

def rf_test_fill(x_train_fill, y_train_fill, x_test, y_test):

# 1. 索引对齐:拼接前重置所有输入索引

x_train_fill = x_train_fill.reset_index(drop=True)

y_train_fill = y_train_fill.reset_index(drop=True)

x_test = x_test.reset_index(drop=True)

y_test = y_test.reset_index(drop=True)

# 2. 拼接训练集/测试集,分离矿物类型

train_data_all = pd.concat([x_train_fill, y_train_fill], axis=1)

train_data_all = train_data_all.reset_index(drop=True)

test_data_all = pd.concat([x_test, y_test], axis=1)

test_data_all = test_data_all.reset_index(drop=True)

train_data_x = train_data_all.drop("矿物类型", axis=1)

test_data_x = test_data_all.drop("矿物类型", axis=1)

# 3. 按空值数量排序,逐列填充

null_num = test_data_x.isnull().sum()

null_sum_sorted = null_num.sort_values(ascending=True)

filling_feature = []

for i in null_sum_sorted.index:

filling_feature.append(i)

if null_sum_sorted[i] != 0: # 仅处理有空值的列

# 用训练集构建模型特征,测试集构建待填充特征

X_train = train_data_x[filling_feature].drop(i, axis=1)

y_train = train_data_x[i]

X_test = test_data_x[filling_feature].drop(i, axis=1)

row_numbers_mg_null = test_data_x[test_data_x[i].isnull()].index.tolist()

X_test_null = X_test.iloc[row_numbers_mg_null]

# 非空校验

if not X_train.empty and not y_train.empty and not X_test_null.empty:

# 核心替换:线性回归 → 随机森林回归

rf = RandomForestRegressor(

n_estimators=100,

random_state=42,

n_jobs=-1

)

rf.fit(X_train, y_train) # 用训练集训练

y_pred = rf.predict(X_test_null) # 预测测试集空值

# 精准填充:仅更新当前列i的空值

test_data_x.loc[row_numbers_mg_null, i] = y_pred

print(f'完成测试数据中【{i}】列的随机森林填充')

else:

print(f'警告:测试集【{i}】列无有效训练/测试样本,跳过填充')

# 返回填充后的测试集特征+原始标签

return test_data_x, test_data_all["矿物类型"]

阶段二:多模型分类训练

基于预处理后的6类数据集,分别训练7种分类模型,涵盖传统机器学习、集成学习、深度学习三大类别,确保方案的全面性。

2.1 传统机器学习模型

-

逻辑回归:作为基线模型,结构简单、训练速度快,适合初步验证数据有效性。参数配置:max_iter=1000(确保收敛)、random_state=42(结果可复现),禁用多线程避免内存过载。

-

SVM(支持向量机):选用Poly核(多项式核),能捕捉特征间的非线性关系。核心问题解决:初始用degree=4+probability=True时,因计算复杂度极高,训练中位数填充数据集时卡住,优化后调整degree=2、关闭probability,同时增加缓存大小(cache_size=2000),大幅提升训练速度。

2.2 集成学习模型

-

随机森林:集成多棵决策树,抗过拟合能力强。参数配置:n_estimators=50、max_depth=20、max_features="log2",启用多线程(n_jobs=-1)加速训练。

-

AdaBoost:基于提升策略,迭代优化弱分类器。以深度为2的决策树为基分类器,n_estimators=200、learning_rate=1.0,适配小样本数据。

-

XGBoost:高性能梯度提升树,擅长处理复杂特征交互。参数配置:learning_rate=0.05、max_depth=7、subsample=0.6、colsample_bytree=0.8,目标函数设为multi:softprob(适配多分类)。关键问题解决:因标签需为“从0开始的连续整数”,否则报错,故添加标签映射逻辑,将原始标签动态转为连续整数,同时动态设置num_class,适配不同数据集的类别数。

2.3 深度学习模型

-

MLP(多层感知机):三层全连接网络,适配一维特征输入。结构:输入层→隐藏层(32神经元)→隐藏层(64神经元)→输出层(对应类别数),激活函数用ReLU,优化器为Adam(lr=0.001),训练1500轮。

-

1D-CNN(一维卷积神经网络):通过卷积核捕捉局部特征关联,适配矿物成分的序列特征。结构:3层卷积(16→32→64通道)+全连接层,输入维度适配特征数,自动适配GPU/CPU加速训练。无需像XGBoost那样严格处理标签,但保留轻量映射逻辑,避免神经元浪费。

2.4 结果存储设计

为方便后续对比分析,将所有模型的评估结果(准确率、精确率、召回率、F1分数)存入JSON文件,采用“模型→填充方法→指标”的嵌套结构,确保数据可追溯、易读取。

代码:

# import pandas as pd

# from sklearn.linear_model import LogisticRegression

# from sklearn.metrics import accuracy_score, precision_score, recall_score, f1_score

#

# # ———————- 1. 配置参数与初始化 ———————-

# # 定义所有填充方法(与文件名中的后缀对应)

# fill_methods = ["中位数", "众数", "删除空数据行", "均值", "线性回归", "随机森林"]

# # 初始化结果字典

# lr_result_data = {}

# # 逻辑回归模型参数(可根据需要调整)

# logreg_params = {

# "max_iter": 1000, # 增加迭代次数确保收敛

# "random_state": 42,

# "n_jobs": 1

# }

#

# # ———————- 2. 遍历所有填充方法,训练+评估 ———————-

# for method in fill_methods:

# # 1. 读取当前填充方法对应的训练集和测试集

# train_path = f"temp_data/训练数据集[{method}].xlsx"

# test_path = f"temp_data/测试数据集[{method}].xlsx"

#

# try:

# train_df = pd.read_excel(train_path)

# test_df = pd.read_excel(test_path)

# except FileNotFoundError:

# print(f"警告:未找到 {method} 对应的数据集文件,跳过该方法")

# continue

#

# # 2. 分离特征(X)和标签(y,矿物类型)

# X_train = train_df.drop("矿物类型", axis=1)

# y_train = train_df["矿物类型"]

# X_test = test_df.drop("矿物类型", axis=1)

# y_test = test_df["矿物类型"]

#

# # 3. 初始化并训练逻辑回归模型

# logreg = LogisticRegression(**logreg_params)

# logreg.fit(X_train, y_train)

#

# # 4. 预测并计算评估指标

# y_pred = logreg.predict(X_test)

# metrics = {

# "准确率(Accuracy)": accuracy_score(y_test, y_pred),

# "精确率(Precision)": precision_score(y_test, y_pred, average="weighted"),

# "召回率(Recall)": recall_score(y_test, y_pred, average="weighted"),

# "F1分数(F1-Score)": f1_score(y_test, y_pred, average="weighted")

# }

#

# # 5. 将结果存入字典

# lr_result_data[method] = metrics

# print(f"✅ 完成 {method} 填充数据的逻辑回归训练与评估")

#

# # ———————- 3. 打印结果字典(可选) ———————-

# print("\\n📊 所有填充方法的逻辑回归分类结果:")

# for method, metrics in lr_result_data.items():

# print(f"\\n— {method} —")

# for metric_name, value in metrics.items():

# print(f"{metric_name}: {value:.4f}")

#

#

# import json

#

# # ———————- 1. 初始化总结果字典(用于所有模型的对比) ———————-

# all_model_results = {}

#

# # 示例:追加随机森林分类的结果

# all_model_results["逻辑回归"] = lr_result_data # rf_result_data 是随机森林的结果字典

#

# # 再次保存到 JSON(覆盖原文件,或用 'a' 模式追加)

# with open(r'temp_data/所有模型分类结果.json', 'w', encoding='utf-8') as file:

# json.dump(all_model_results, file, ensure_ascii=False, indent=4)

#

# import pandas as pd

# from sklearn.svm import SVC

# from sklearn.metrics import accuracy_score, precision_score, recall_score, f1_score

# import json

#

# # ———————- 1. 配置参数与初始化 ———————-

# # 定义所有填充方法(与文件名对应)

# fill_methods = ["中位数", "众数", "删除空数据行", "均值", "线性回归", "随机森林"]

# # 初始化 SVM 结果字典

# svm_result_data = {}

# # 你的 SVM 参数(完全按照图片配置)

# # 修改后的 SVM 参数(大幅提升速度)

# svm_params = {

# "C": 1,

# "coef0": 0.1,

# "degree": 2, # 降低多项式阶数(从4→2),减少计算量

# "gamma": "scale", # 自动缩放gamma,避免手动设置导致的数值问题

# "kernel": "poly",

# "probability": False, # 若不需要概率输出,设为False(大幅提速)

# "random_state": 100,

# "cache_size": 2000 # 增加缓存大小(单位MB),加速计算

# }

#

# # ———————- 2. 遍历所有填充方法,训练+评估 SVM ———————-

# for method in fill_methods:

# # 读取当前填充方法的训练集和测试集

# train_path = f"temp_data/训练数据集[{method}].xlsx"

# test_path = f"temp_data/测试数据集[{method}].xlsx"

#

# try:

# train_df = pd.read_excel(train_path)

# test_df = pd.read_excel(test_path)

# except FileNotFoundError:

# print(f"警告:未找到 {method} 对应的数据集,跳过该方法")

# continue

#

# # 分离特征(X)和标签(y,矿物类型)

# X_train = train_df.drop("矿物类型", axis=1)

# y_train = train_df["矿物类型"]

# X_test = test_df.drop("矿物类型", axis=1)

# y_test = test_df["矿物类型"]

#

# # 初始化并训练 SVM 模型

# svm = SVC(**svm_params)

# svm.fit(X_train, y_train)

#

# # 预测并计算评估指标

# y_pred = svm.predict(X_test)

# metrics = {

# "准确率(Accuracy)": accuracy_score(y_test, y_pred),

# "精确率(Precision)": precision_score(y_test, y_pred, average="weighted"),

# "召回率(Recall)": recall_score(y_test, y_pred, average="weighted"),

# "F1分数(F1-Score)": f1_score(y_test, y_pred, average="weighted")

# }

#

# # 将结果存入 SVM 结果字典

# svm_result_data[method] = metrics

# print(f"✅ 完成 {method} 填充数据的 SVM 训练与评估")

#

# # ———————- 3. 追加到总结果字典并保存 ———————-

# # 读取之前的总结果(如果存在)

# try:

# with open(r'temp_data/所有模型分类结果.json', 'r', encoding='utf-8') as file:

# all_model_results = json.load(file)

# except FileNotFoundError:

# all_model_results = {}

#

# # 追加 SVM 结果到总字典

# all_model_results["支持向量机(Poly核)"] = svm_result_data

#

# # 保存更新后的总结果

# with open(r'temp_data/所有模型分类结果.json', 'w', encoding='utf-8') as file:

# json.dump(all_model_results, file, ensure_ascii=False, indent=4)

#

# print("\\n📊 SVM 分类结果已追加到 temp_data/所有模型分类结果.json")

#

# import pandas as pd

# from sklearn.ensemble import RandomForestClassifier

# from sklearn.metrics import accuracy_score, precision_score, recall_score, f1_score

# import json

#

# # ———————- 1. 配置参数与初始化 ———————-

# # 定义所有填充方法(与文件名对应)

# fill_methods = ["中位数", "众数", "删除空数据行", "均值", "线性回归", "随机森林"]

# # 初始化随机森林结果字典

# rf_result_data = {}

# # 你的随机森林参数(完全按照图片配置)

# rf_params = {

# "bootstrap": False,

# "max_depth": 20,

# "max_features": "log2",

# "min_samples_leaf": 1,

# "min_samples_split": 2,

# "n_estimators": 50,

# "random_state": 487,

# "n_jobs": -1 # 多线程加速训练(可选,根据硬件调整)

# }

#

# # ———————- 2. 遍历所有填充方法,训练+评估随机森林 ———————-

# for method in fill_methods:

# # 读取当前填充方法的训练集和测试集

# train_path = f"temp_data/训练数据集[{method}].xlsx"

# test_path = f"temp_data/测试数据集[{method}].xlsx"

#

# try:

# train_df = pd.read_excel(train_path)

# test_df = pd.read_excel(test_path)

# except FileNotFoundError:

# print(f"警告:未找到 {method} 对应的数据集,跳过该方法")

# continue

#

# # 分离特征(X)和标签(y,矿物类型)

# X_train = train_df.drop("矿物类型", axis=1)

# y_train = train_df["矿物类型"]

# X_test = test_df.drop("矿物类型", axis=1)

# y_test = test_df["矿物类型"]

#

# # 初始化并训练随机森林模型

# rf = RandomForestClassifier(**rf_params)

# rf.fit(X_train, y_train)

#

# # 预测并计算评估指标

# y_pred = rf.predict(X_test)

# metrics = {

# "准确率(Accuracy)": accuracy_score(y_test, y_pred),

# "精确率(Precision)": precision_score(y_test, y_pred, average="weighted"),

# "召回率(Recall)": recall_score(y_test, y_pred, average="weighted"),

# "F1分数(F1-Score)": f1_score(y_test, y_pred, average="weighted")

# }

#

# # 将结果存入随机森林结果字典

# rf_result_data[method] = metrics

# print(f"✅ 完成 {method} 填充数据的随机森林训练与评估")

#

# # ———————- 3. 追加到总结果字典并保存 ———————-

# # 读取之前的总结果(如果存在)

# try:

# with open(r'temp_data/所有模型分类结果.json', 'r', encoding='utf-8') as file:

# all_model_results = json.load(file)

# except FileNotFoundError:

# all_model_results = {}

#

# # 追加随机森林结果到总字典

# all_model_results["随机森林分类"] = rf_result_data

#

# # 保存更新后的总结果

# with open(r'temp_data/所有模型分类结果.json', 'w', encoding='utf-8') as file:

# json.dump(all_model_results, file, ensure_ascii=False, indent=4)

#

# print("\\n📊 随机森林分类结果已追加到 temp_data/所有模型分类结果.json")

# import pandas as pd

# from sklearn.ensemble import AdaBoostClassifier

# from sklearn.tree import DecisionTreeClassifier

# from sklearn.metrics import accuracy_score, precision_score, recall_score, f1_score

# import json

#

# # ———————- 1. 配置参数与初始化 ———————-

# fill_methods = ["中位数", "众数", "删除空数据行", "均值", "线性回归", "随机森林"]

# abf_result_data = {}

#

# # 完全按照图片配置 AdaBoost 参数

# abf_params = {

# "algorithm": "SAMME",

# "base_estimator": DecisionTreeClassifier(max_depth=2),

# "n_estimators": 200,

# "learning_rate": 1.0,

# "random_state": 0

# }

#

# # ———————- 2. 遍历所有填充方法,训练+评估 AdaBoost ———————-

# for method in fill_methods:

# train_path = f"temp_data/训练数据集[{method}].xlsx"

# test_path = f"temp_data/测试数据集[{method}].xlsx"

#

# try:

# train_df = pd.read_excel(train_path)

# test_df = pd.read_excel(test_path)

# except FileNotFoundError:

# print(f"警告:未找到 {method} 对应的数据集,跳过该方法")

# continue

#

# # 分离特征与标签

# X_train = train_df.drop("矿物类型", axis=1)

# y_train = train_df["矿物类型"]

# X_test = test_df.drop("矿物类型", axis=1)

# y_test = test_df["矿物类型"]

#

# # 初始化并训练 AdaBoost

# abf = AdaBoostClassifier(**abf_params)

# abf.fit(X_train, y_train)

#

# # 预测与评估

# y_pred = abf.predict(X_test)

# metrics = {

# "准确率(Accuracy)": accuracy_score(y_test, y_pred),

# "精确率(Precision)": precision_score(y_test, y_pred, average="weighted"),

# "召回率(Recall)": recall_score(y_test, y_pred, average="weighted"),

# "F1分数(F1-Score)": f1_score(y_test, y_pred, average="weighted")

# }

#

# abf_result_data[method] = metrics

# print(f"✅ 完成 {method} 填充数据的 AdaBoost 训练与评估")

#

# # ———————- 3. 追加到总结果字典并保存 ———————-

# try:

# with open(r'temp_data/所有模型分类结果.json', 'r', encoding='utf-8') as file:

# all_model_results = json.load(file)

# except FileNotFoundError:

# all_model_results = {}

#

# all_model_results["AdaBoost分类"] = abf_result_data

#

# with open(r'temp_data/所有模型分类结果.json', 'w', encoding='utf-8') as file:

# json.dump(all_model_results, file, ensure_ascii=False, indent=4)

#

# print("\\n📊 AdaBoost 分类结果已追加到 temp_data/所有模型分类结果.json")

#

# import pandas as pd

# import xgboost as xgb

# from sklearn.metrics import accuracy_score, precision_score, recall_score, f1_score

# import json

#

# # ———————- 1. 配置参数与初始化 ———————-

# # 定义所有填充方法(与文件名对应)

# fill_methods = ["中位数", "众数", "删除空数据行", "均值", "线性回归", "随机森林"]

# # 初始化XGBoost结果字典

# xgb_result_data = {}

#

# # XGBoost基础参数(num_class动态设置,objective兼容不连续标签)

# xgb_base_params = {

# "learning_rate": 0.05, # 学习率

# "n_estimators": 200, # 决策树数量

# "max_depth": 7, # 树的最大深度

# "min_child_weight": 1, # 叶子节点最小样本权重和

# "gamma": 0, # 节点分裂所需最小损失下降值

# "subsample": 0.6, # 训练样本子样本比例

# "colsample_bytree": 0.8, # 每棵树随机采样列占比

# "objective": "multi:softprob", # 替换为softprob,兼容不连续标签

# "seed": 0 # 随机数种子

# }

#

# # ———————- 2. 遍历所有填充方法,训练+评估 XGBoost ———————-

# for method in fill_methods:

# # 读取当前填充方法的训练集和测试集

# train_path = f"temp_data/训练数据集[{method}].xlsx"

# test_path = f"temp_data/测试数据集[{method}].xlsx"

#

# try:

# train_df = pd.read_excel(train_path)

# test_df = pd.read_excel(test_path)

# except FileNotFoundError:

# print(f"⚠️ 警告:未找到 {method} 对应的数据集,跳过该方法")

# continue

#

# # 分离特征与原始标签

# X_train = train_df.drop("矿物类型", axis=1)

# y_train_original = train_df["矿物类型"]

# X_test = test_df.drop("矿物类型", axis=1)

# y_test_original = test_df["矿物类型"]

#

# # ———————- 核心修复:标签连续性处理 ———————-

# # 1. 获取训练集唯一标签并排序

# unique_train_classes = sorted(y_train_original.unique())

# print(f"\\n📌 {method} 填充数据集 – 原始标签类别:{unique_train_classes}")

#

# # 2. 构建标签映射(将原始标签映射为从0开始的连续整数)

# class_mapping = {old_label: new_label for new_label, old_label in enumerate(unique_train_classes)}

# print(f"🔄 标签映射规则:{class_mapping}")

#

# # 3. 应用映射,确保标签连续

# y_train = y_train_original.map(class_mapping)

# y_test = y_test_original.map(class_mapping)

#

# # 4. 动态设置类别数(避免固定num_class导致不匹配)

# xgb_params = xgb_base_params.copy()

# xgb_params["num_class"] = len(unique_train_classes)

#

# # ———————- 模型训练与评估 ———————-

# # 初始化并训练XGBoost

# xgb_model = xgb.XGBClassifier(**xgb_params)

# xgb_model.fit(X_train, y_train)

#

# # 预测(返回的是映射后的连续标签)

# y_pred = xgb_model.predict(X_test)

#

# # 计算评估指标(weighted适配多分类)

# metrics = {

# "准确率(Accuracy)": round(accuracy_score(y_test, y_pred), 4),

# "精确率(Precision)": round(precision_score(y_test, y_pred, average="weighted", zero_division=0), 4),

# "召回率(Recall)": round(recall_score(y_test, y_pred, average="weighted", zero_division=0), 4),

# "F1分数(F1-Score)": round(f1_score(y_test, y_pred, average="weighted", zero_division=0), 4)

# }

#

# # 保存结果

# xgb_result_data[method] = metrics

# print(f"✅ 完成 {method} 填充数据的 XGBoost 训练 | 准确率:{metrics['准确率(Accuracy)']}")

#

# # ———————- 3. 追加到总结果字典并保存 ———————-

# try:

# # 读取已有的总结果文件

# with open(r'temp_data/所有模型分类结果.json', 'r', encoding='utf-8') as file:

# all_model_results = json.load(file)

# except FileNotFoundError:

# # 若文件不存在,初始化空字典

# all_model_results = {}

#

# # 追加XGBoost结果

# all_model_results["XGBoost分类"] = xgb_result_data

#

# # 保存更新后的总结果

# with open(r'temp_data/所有模型分类结果.json', 'w', encoding='utf-8') as file:

# json.dump(all_model_results, file, ensure_ascii=False, indent=4)

#

# print("\\n📊 XGBoost 分类结果已追加到 temp_data/所有模型分类结果.json")

#

#

import pandas as pd

import torch

import torch.nn as nn

import torch.optim as optim

import numpy as np

from sklearn.metrics import accuracy_score, precision_score, recall_score, f1_score

import json

# ———————- 1. 定义模型结构 ———————-

# 多层感知机(MLP)

class MLP(nn.Module):

def __init__(self, input_dim, hidden_dim, num_classes):

super(MLP, self).__init__()

self.fc1 = nn.Linear(input_dim, hidden_dim)

self.fc2 = nn.Linear(hidden_dim, hidden_dim * 2)

self.fc3 = nn.Linear(hidden_dim * 2, num_classes)

def forward(self, x):

x = torch.relu(self.fc1(x))

x = torch.relu(self.fc2(x))

x = self.fc3(x)

return x

# 一维卷积神经网络(CNN)

class CNN1D(nn.Module):

def __init__(self, input_dim, hidden_size, num_classes):

super(CNN1D, self).__init__()

self.conv1 = nn.Conv1d(in_channels=1, out_channels=16, kernel_size=3, padding=1)

self.conv2 = nn.Conv1d(in_channels=16, out_channels=32, kernel_size=3, padding=1)

self.conv3 = nn.Conv1d(in_channels=32, out_channels=64, kernel_size=3, padding=1)

self.relu = nn.ReLU()

self.fc = nn.Linear(64 * input_dim, num_classes) # 输入维度适配

def forward(self, x):

x = x.unsqueeze(1) # 增加通道维度(batch_size, 1, input_dim)

x = self.relu(self.conv1(x))

x = self.relu(self.conv2(x))

x = self.relu(self.conv3(x))

x = x.view(x.size(0), -1) # 展平

x = self.fc(x)

return x

# ———————- 2. 训练与评估函数 ———————-

def train_evaluate_model(model, X_train, y_train, X_test, y_test, criterion, optimizer, epochs=1500,

print_interval=100):

for epoch in range(epochs):

# 训练模式

model.train()

optimizer.zero_grad()

outputs = model(X_train)

loss = criterion(outputs, y_train)

loss.backward()

optimizer.step()

# 每print_interval轮打印一次

if (epoch + 1) % print_interval == 0:

model.eval()

with torch.no_grad():

test_pred = model(X_test).argmax(dim=1)

test_acc = accuracy_score(y_test.cpu().numpy(), test_pred.cpu().numpy())

print(f"Epoch [{epoch + 1}/{epochs}] | Loss: {loss.item():.4f} | Test Acc: {test_acc:.4f}")

# 最终评估

model.eval()

with torch.no_grad():

y_pred = model(X_test).argmax(dim=1)

# 转到CPU计算指标(兼容GPU训练)

y_test_np = y_test.cpu().numpy()

y_pred_np = y_pred.cpu().numpy()

metrics = {

"准确率(Accuracy)": round(accuracy_score(y_test_np, y_pred_np), 4),

"精确率(Precision)": round(precision_score(y_test_np, y_pred_np, average="weighted", zero_division=0), 4),

"召回率(Recall)": round(recall_score(y_test_np, y_pred_np, average="weighted", zero_division=0), 4),

"F1分数(F1-Score)": round(f1_score(y_test_np, y_pred_np, average="weighted", zero_division=0), 4)

}

return metrics

# ———————- 3. 配置参数与初始化 ———————-

fill_methods = ["中位数", "众数", "删除空数据行", "均值", "线性回归", "随机森林"]

mlp_result_data = {}

cnn_result_data = {}

# 自动选择设备(GPU/CPU)

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

print(f"使用设备: {device}")

# ———————- 4. 遍历所有填充方法训练 ———————-

for method in fill_methods:

train_path = f"temp_data/训练数据集[{method}].xlsx"

test_path = f"temp_data/测试数据集[{method}].xlsx"

try:

train_df = pd.read_excel(train_path)

test_df = pd.read_excel(test_path)

except FileNotFoundError:

print(f"⚠️ 警告:未找到 {method} 对应的数据集,跳过该方法")

continue

# 分离特征与标签(原始数据)

X_train_np = train_df.drop("矿物类型", axis=1).values.astype(np.float32)

y_train_original = train_df["矿物类型"].values

X_test_np = test_df.drop("矿物类型", axis=1).values.astype(np.float32)

y_test_original = test_df["矿物类型"].values

# ✅ 轻量标签处理(仅核心映射,无冗余打印)

# 映射为从0开始的连续整数(避免神经元浪费/维度不匹配)

unique_classes = sorted(np.unique(y_train_original))

class_mapping = {old: new for new, old in enumerate(unique_classes)}

y_train_np = np.array([class_mapping[label] for label in y_train_original])

y_test_np = np.array([class_mapping[label] for label in y_test_original])

num_classes = len(unique_classes)

input_dim = X_train_np.shape[1]

# 转换为PyTorch张量(转到指定设备)

X_train = torch.tensor(X_train_np).to(device)

y_train = torch.tensor(y_train_np, dtype=torch.long).to(device)

X_test = torch.tensor(X_test_np).to(device)

y_test = torch.tensor(y_test_np, dtype=torch.long).to(device)

# ———————- 训练MLP ———————-

print(f"\\n=== 训练 {method} 填充数据的 MLP ===")

mlp = MLP(input_dim=input_dim, hidden_dim=32, num_classes=num_classes).to(device)

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(mlp.parameters(), lr=0.001)

mlp_metrics = train_evaluate_model(mlp, X_train, y_train, X_test, y_test, criterion, optimizer)

mlp_result_data[method] = mlp_metrics

# ———————- 训练CNN ———————-

print(f"\\n=== 训练 {method} 填充数据的 CNN ===")

cnn = CNN1D(input_dim=input_dim, hidden_size=10, num_classes=num_classes).to(device)

optimizer = optim.Adam(cnn.parameters(), lr=0.001)

cnn_metrics = train_evaluate_model(cnn, X_train, y_train, X_test, y_test, criterion, optimizer)

cnn_result_data[method] = cnn_metrics

# ———————- 5. 保存结果 ———————-

try:

with open(r'temp_data/所有模型分类结果.json', 'r', encoding='utf-8') as file:

all_model_results = json.load(file)

except FileNotFoundError:

all_model_results = {}

all_model_results["神经网络(MLP)分类"] = mlp_result_data

all_model_results["卷积神经网络(CNN)分类"] = cnn_result_data

with open(r'temp_data/所有模型分类结果.json', 'w', encoding='utf-8') as file:

json.dump(all_model_results, file, ensure_ascii=False, indent=4)

print("\\n📊 神经网络/CNN结果已保存到 temp_data/所有模型分类结果.json")

阶段三:结果分析与最优方案筛选

通过对比42种方案的评估指标,最终发现XGBoost+中位数填充组合表现最优,测试准确率达99%,且各项指标(精确率、召回率、F1分数)均接近满分。

最优组合合理性分析

中位数填充的优势:矿物成分数据可能存在极端值(如杂质含量波动),中位数相比均值更抗干扰,能保留数据的真实分布,为模型提供稳定输入;同时不删除样本,保留了更多训练数据,尤其适合小样本场景。

XGBoost的优势:矿物成分特征间存在复杂的非线性关联,XGBoost能有效捕捉这些关系,且通过正则化(gamma、subsample)避免过拟合,在多分类任务中精度优于传统模型;优化后的标签处理逻辑进一步确保了模型稳定性。

其他方案表现对比

-

填充方法层面:基于模型的填充(线性回归、随机森林)整体优于简单填充,但计算成本更高;删除空数据行因丢失样本,精度普遍偏低。

-

模型层面:集成学习(XGBoost、随机森林)整体优于传统机器学习与深度学习;MLP、CNN表现略逊,可能因数据集特征维度不高,深度学习的优势难以发挥。

三、项目关键问题与解决方案

复现过程中遇到多个典型问题,针对性解决方法可为同类项目提供参考:

SVM训练卡住:Poly核+高degree+probability=True导致计算量暴增,优化参数(降低degree、关闭probability)+增加缓存,解决卡顿问题。

XGBoost标签报错:标签非连续整数导致多分类失败,添加动态标签映射,将原始标签转为从0开始的连续整数,同时动态设置类别数。

模型训练内存过载:逻辑回归、XGBoost启用多线程时内存不足,禁用多线程或限制核心数,优先保证稳定性。

深度学习设备适配:自动检测GPU/CPU,将张量转移至对应设备,训练时用torch.no_grad()关闭梯度计算,减少内存占用。

四、总结与展望

4.1 项目收获

本项目通过系统对比6种填充方法与7种分类模型,验证了“中位数填充+XGBoost”在矿物分类任务中的优越性,同时梳理了数据预处理、模型优化、问题排查的完整流程。核心结论:数据预处理的质量直接决定模型上限,针对数据特点选择填充方法,比盲目追求复杂模型更重要。

4.2 后续优化方向

-

模型部署:将最优XGBoost模型封装为预测接口,支持新矿物样本的实时分类;

-

特征工程:通过XGBoost的特征重要性分析,筛选关键矿物成分特征,简化模型结构;

-

模型融合:结合XGBoost与随机森林的预测结果,进一步提升分类精度;

-

数据扩充:针对小样本场景,通过数据增强技术扩充训练集,提升模型泛化能力。

矿物分类系统的复现,不仅实现了高精度分类目标,更验证了机器学习技术在地质领域的实用性。后续可结合更多实际场景需求,持续优化模型与流程,为矿产勘探、地质分析提供更高效的技术支撑。

代码部分解析:

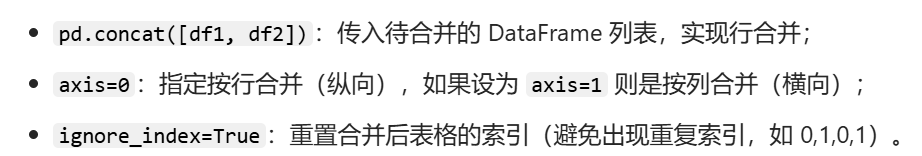

表格合并:

比较反直觉,行合并指的是把两个表格一上一下拼接在一起,axis=0;列合并指的是一左一右航向拼接,axis=1。

网硕互联帮助中心

网硕互联帮助中心

评论前必须登录!

注册