YOLOv11v10v8使用教程: YOLOv11入门到入土使用教程

YOLOv11改进汇总贴:YOLOv11及自研模型更新汇总

《SalM²: An Extremely Lightweight Saliency Mamba Model for Real-Time Cognitive Awareness of Driver Attention》

一、 模块介绍

论文链接:https://ojs.aaai.org/index.php/AAAI/article/view/32157

代码链接:https://github.com/zhao-chunyu/SaliencyMamba

论文速览:

驾驶场景下的驾驶员注意力识别是交通场景感知技术中的热门方向。它旨在了解人类驾驶员的注意力,以关注驾驶场景中的特定目标/物体。然而,交通场景不仅包含大量的视觉信息,还包含与驾驶任务相关的语义信息。现有方法缺乏对驾驶场景中存在的实际语义信息的关注。此外,交通场景是一个复杂且动态的过程,需要持续关注与当前驾驶任务相关的物体。现有模型受其基础框架的影响,往往具有大量参数和复杂的结构。因此,该文提出了一种基于最新Mamba框架的实时显著性Mamba网络。如图 1 所示,我们的模型使用了极少的参数(0.08M,其他模型仅为 0.09~11.16%),同时保持了 SOTA 性能或实现了 SOTA 模型 98% 以上的性能。

总结:本文更新其中的CrossModelAtt模块代码及使用方法

⭐⭐本文二创模块仅更新于付费群中,往期免费教程可看下方链接⭐⭐

YOLOv11及自研模型更新汇总(含免费教程)文章浏览阅读366次,点赞3次,收藏4次。群文件2024/11/08日更新。,群文件2024/11/08日更新。_yolo11部署自己的数据集https://xy2668825911.blog.csdn.net/article/details/143633356 https://xy2668825911.blog.csdn.net/article/details/143633356

https://xy2668825911.blog.csdn.net/article/details/143633356

二、二创融合模块

2.1 相关代码

# https://blog.csdn.net/StopAndGoyyy?spm=1011.2124.3001.5343

# SalM²: An Extremely Lightweight Saliency Mamba Model for Real-Time Cognitive Awareness of Driver Attention

# https://github.com/zhao-chunyu/SaliencyMamba

# https://ojs.aaai.org/index.php/AAAI/article/view/32157

class CrossModelAtt(nn.Module):

def __init__(self,):

super().__init__()

self.gamma = nn.Parameter(torch.zeros(1))

self.softmax = nn.Softmax(dim=-1)

def forward(self, x):

img_feat, text_feat = x

B, C, H, W = img_feat.shape

q = img_feat.view(B, C, -1)

k = text_feat.view(B, C, -1).permute(0, 2, 1)

attention_map = torch.bmm(q, k) # [B, C, C]

attention_map = self.softmax(attention_map)

v = text_feat.view(B, C, -1)

attention_info = torch.bmm(attention_map, v)

attention_info = attention_info.view(B, C, H, W)

output = self.gamma * attention_info + img_feat

return output

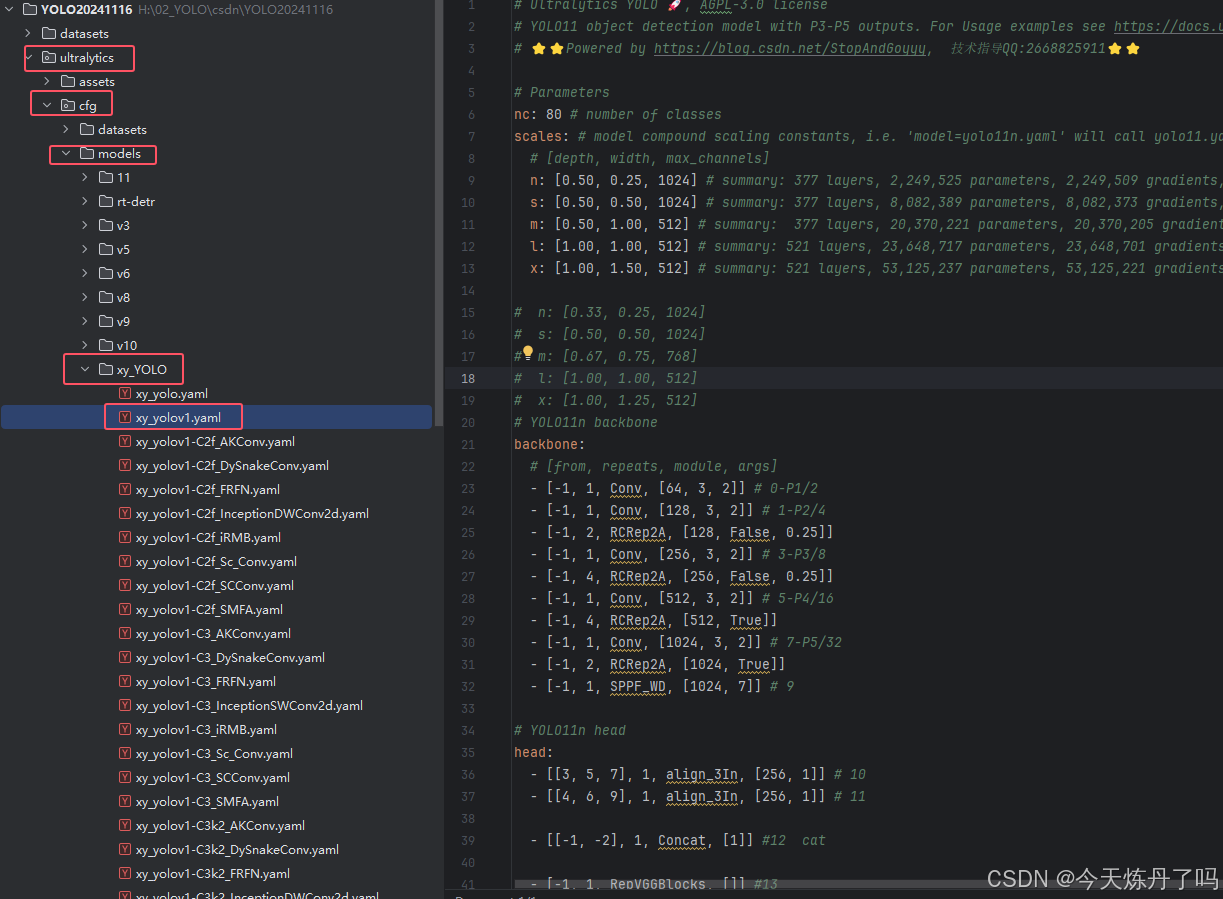

2.2更改yaml文件 (以自研模型为例)

yam文件解读:YOLO系列 “.yaml“文件解读_yolo yaml文件-CSDN博客

打开更改ultralytics/cfg/models/11路径下的YOLOv11.yaml文件,替换原有模块。

# Ultralytics YOLO 🚀, AGPL-3.0 license

# YOLO11 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect

# ⭐⭐Powered by https://blog.csdn.net/StopAndGoyyy, 技术指导QQ:2668825911⭐⭐

# Parameters

nc: 80 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolo11n.yaml' will call yolo11.yaml with scale 'n'

# [depth, width, max_channels]

n: [0.50, 0.25, 1024] # summary: 377 layers, 2,249,525 parameters, 2,249,509 gradients, 8.7 GFLOPs/258 layers, 2,219,405 parameters, 0 gradients, 8.5 GFLOPs

s: [0.50, 0.50, 1024] # summary: 377 layers, 8,082,389 parameters, 8,082,373 gradients, 29.8 GFLOPs/258 layers, 7,972,885 parameters, 0 gradients, 29.2 GFLOPs

m: [0.50, 1.00, 512] # summary: 377 layers, 20,370,221 parameters, 20,370,205 gradients, 103.0 GFLOPs/258 layers, 20,153,773 parameters, 0 gradients, 101.2 GFLOPs

l: [1.00, 1.00, 512] # summary: 521 layers, 23,648,717 parameters, 23,648,701 gradients, 124.5 GFLOPs/330 layers, 23,226,989 parameters, 0 gradients, 121.2 GFLOPs

x: [1.00, 1.50, 512] # summary: 521 layers, 53,125,237 parameters, 53,125,221 gradients, 278.9 GFLOPs/330 layers, 52,191,589 parameters, 0 gradients, 272.1 GFLOPs

# n: [0.33, 0.25, 1024]

# s: [0.50, 0.50, 1024]

# m: [0.67, 0.75, 768]

# l: [1.00, 1.00, 512]

# x: [1.00, 1.25, 512]

# YOLO11n backbone

backbone:

# [from, repeats, module, args]

– [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

– [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

– [-1, 2, RCRep2A, [128, False, 0.25]]

– [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

– [-1, 4, RCRep2A, [256, False, 0.25]]

– [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

– [-1, 4, RCRep2A, [512, True]]

– [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32

– [-1, 2, RCRep2A, [1024, True]]

– [-1, 1, CrossModelAtt, []] # 9

# YOLO11n head

head:

– [[3, 5, 7], 1, align_3In, [256, 1]] # 10

– [[4, 6, 9], 1, align_3In, [256, 1]] # 11

– [[-1, -2], 1, Concat, [1]] #12 cat

– [-1, 1, RepVGGBlocks, []] #13

– [-1, 1, nn.Upsample, [None, 2, "nearest"]] #14

– [[-1, 4], 1, Concat, [1]] #15 cat

– [-1, 1, Conv, [256, 3]] # 16

– [13, 1, Conv, [512, 3]] #17

– [13, 1, Conv, [1024, 3, 2]] #18

– [[16, 17, 18], 1, Detect, [nc]] # Detect(P3, P4, P5)

# ⭐⭐Powered by https://blog.csdn.net/StopAndGoyyy, 技术指导QQ:2668825911⭐⭐

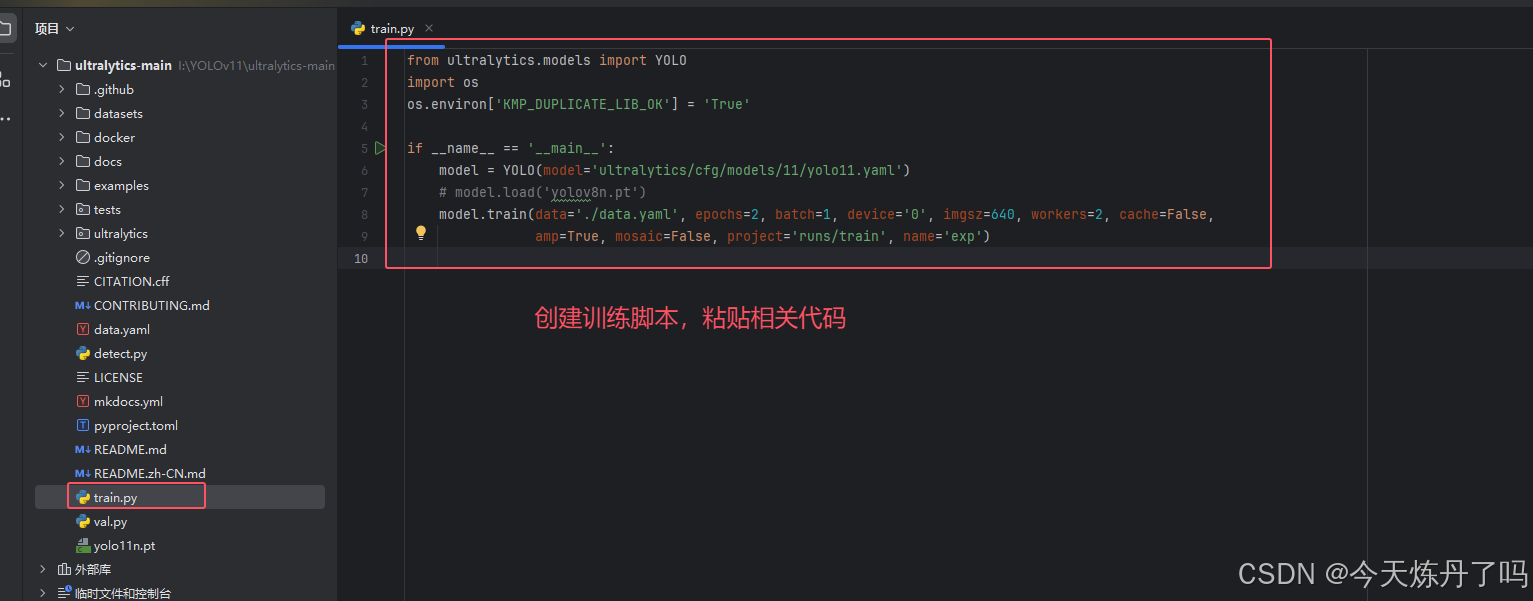

2.3 修改train.py文件

创建Train脚本用于训练。

from ultralytics.models import YOLO

import os

os.environ['KMP_DUPLICATE_LIB_OK'] = 'TRUE'

if __name__ == '__main__':

model = YOLO(model='ultralytics/cfg/models/xy_YOLO/xy_yolov1.yaml')

# model = YOLO(model='ultralytics/cfg/models/11/yolo11l.yaml')

model.train(data='./datasets/data.yaml', epochs=1, batch=1, device='0', imgsz=320, workers=1, cache=False,

amp=True, mosaic=False, project='run/train', name='exp',)

在train.py脚本中填入修改好的yaml路径,运行即可训练,数据集创建教程见下方链接。

YOLOv11入门到入土使用教程(含结构图)_yolov11使用教程-CSDN博客

网硕互联帮助中心

网硕互联帮助中心

评论前必须登录!

注册