Python Future 编程哲学:从承诺到现实的异步之旅

开篇:一个关于"承诺"的编程故事

2019 年深秋,我在重构一个数据采集系统时陷入了困境。系统需要同时调用 50 个第三方 API,等待它们返回后再做聚合分析。最初的同步代码简单粗暴:

results = []

for api in api_list:

result = call_api(api) # 每次等待 2-5 秒

results.append(result)

# 总耗时:50 * 3秒 ≈ 150秒

那天下午,我盯着监控面板上缓慢爬升的进度条,突然想起餐厅点餐的场景:你不会站在后厨门口等厨师炒完一道菜再点下一道,而是一次性点完所有菜,拿着一张"承诺单"(订单号)去座位等待。当菜做好了,服务员会叫号通知你。

这就是 Future 的编程哲学:用一个"承诺对象"代表尚未完成的计算结果。今天,我想带你深入理解 Python 中两种 Future 的异同,以及这种"承诺式编程"如何改变我们处理异步任务的方式。

第一章:理解 Future 的本质

什么是 Future?

Future 是一个代理对象,代表一个可能还没完成的操作。它有三个核心特征:

# 餐厅类比的代码表达

class OrderTicket: # 这就是 Future

def __init__(self, dish_name):

self.dish_name = dish_name

self.status = "pending" # 初始状态

self.result = None

def is_ready(self):

return self.status == "done"

def get_dish(self):

if not self.is_ready():

raise Exception("菜还没做好,请稍等!")

return self.result

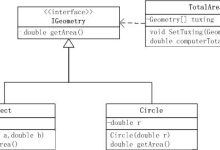

Python 中的两种 Future

Python 标准库提供了两套 Future 实现:

它们的设计初衷不同,但编程哲学一致。

第二章:concurrent.futures.Future——线程/进程的承诺

基础用法:线程池中的 Future

from concurrent.futures import ThreadPoolExecutor, as_completed

import requests

import time

def fetch_url(url):

"""模拟网络请求"""

print(f"开始请求:{url}")

response = requests.get(url, timeout=10)

print(f"完成请求:{url}")

return {

'url': url,

'status': response.status_code,

'length': len(response.text)

}

# 传统同步方式

def sync_fetch(urls):

start = time.time()

results = [fetch_url(url) for url in urls]

print(f"同步耗时:{time.time() – start:.2f}秒")

return results

# Future 并发方式

def future_fetch(urls, max_workers=5):

start = time.time()

results = []

with ThreadPoolExecutor(max_workers=max_workers) as executor:

# 提交任务,立即返回 Future 对象

future_to_url = {

executor.submit(fetch_url, url): url

for url in urls

}

# 按完成顺序处理结果

for future in as_completed(future_to_url):

url = future_to_url[future]

try:

result = future.result(timeout=15) # 获取结果

results.append(result)

except Exception as e:

print(f"✗ {url} 失败: {e}")

results.append({'url': url, 'error': str(e)})

print(f"Future 并发耗时:{time.time() – start:.2f}秒")

return results

# 测试对比

if __name__ == "__main__":

test_urls = [

"https://httpbin.org/delay/2",

"https://httpbin.org/delay/3",

"https://httpbin.org/delay/1",

"https://httpbin.org/delay/2",

"https://httpbin.org/delay/1"

]

# sync_fetch(test_urls) # 约 9 秒

future_fetch(test_urls, max_workers=5) # 约 3 秒

深入探索:Future 的状态机

from concurrent.futures import ThreadPoolExecutor

import time

def long_task(n):

"""耗时任务"""

time.sleep(n)

return f"任务完成,耗时 {n} 秒"

# 演示 Future 的生命周期

with ThreadPoolExecutor() as executor:

future = executor.submit(long_task, 3)

# 状态 1:pending

print(f"任务已提交")

print(f"是否运行中:{future.running()}") # False 或 True

print(f"是否完成:{future.done()}") # False

# 尝试获取结果(会阻塞)

time.sleep(1)

print(f"等待 1 秒后…")

print(f"是否完成:{future.done()}") # 仍是 False

# 状态 2:done

result = future.result() # 阻塞直到完成

print(f"结果:{result}")

print(f"是否完成:{future.done()}") # True

# 多次调用 result() 不会重复执行任务

print(f"再次获取:{future.result()}")

实战技巧:回调机制

def process_result(future):

"""Future 完成时的回调函数"""

try:

result = future.result()

print(f"✓ 回调处理:{result['url']} – {result['status']}")

except Exception as e:

print(f"✗ 回调失败:{e}")

def fetch_with_callback(urls):

"""使用回调处理结果"""

with ThreadPoolExecutor(max_workers=5) as executor:

for url in urls:

future = executor.submit(fetch_url, url)

# 注册回调:任务完成后自动调用

future.add_done_callback(process_result)

print("所有任务已提交,主线程可以做其他事情…")

time.sleep(5) # 模拟主线程继续工作

concurrent.futures.Future 的特点总结

优势:

- 简单易用,适合线程池/进程池场景

- 天然支持多核并行(ProcessPoolExecutor)

- 与传统同步代码兼容性好

局限:

- 基于线程/进程,资源开销较大

- 不适合超高并发场景(如 10000+ 连接)

- 阻塞式的 result() 调用可能影响性能

第三章:asyncio.Future——协程的承诺

基础用法:异步世界的 Future

import asyncio

import aiohttp

import time

async def async_fetch_url(session, url):

"""异步网络请求"""

print(f"开始请求:{url}")

async with session.get(url, timeout=10) as response:

text = await response.text()

print(f"完成请求:{url}")

return {

'url': url,

'status': response.status,

'length': len(text)

}

async def asyncio_future_fetch(urls):

"""使用 asyncio.Future 的方式"""

start = time.time()

results = []

async with aiohttp.ClientSession() as session:

# 创建任务(是 Future 的子类)

tasks = [

asyncio.create_task(async_fetch_url(session, url))

for url in urls

]

# 等待所有任务完成

for task in asyncio.as_completed(tasks):

try:

result = await task

results.append(result)

except Exception as e:

print(f"✗ 任务失败: {e}")

print(f"asyncio 耗时:{time.time() – start:.2f}秒")

return results

# 运行异码

if __name__ == "__main__":

test_urls = [

"https://httpbin.org/delay/2",

"https://httpbin.org/delay/3",

"https://httpbin.org/delay/1"

] * 10 # 30 个请求

asyncio.run(asyncio_future_fetch(test_urls)) # 约 3 秒(高并发)

深入探索:手动创建 asyncio.Future

async def manual_future_demo():

"""手动操作 asyncio.Future"""

loop = asyncio.get_event_loop()

# 创建一个空的 Future

future = loop.create_future()

async def set_result_later():

"""2 秒后设置结果"""

await asyncio.sleep(2)

future.set_result("异步任务完成!")

# 启动后台任务

asyncio.create_task(set_result_later())

print("Future 已创建,等待结果…")

print(f"是否完成:{future.done()}") # False

# 等待 Future 完成

result = await future

print(f"结果:{result}")

print(f"是否完成:{future.done()}") # True

asyncio.run(manual_future_demo())

实战案例:带超时的异步爬虫

async def fetch_with_timeout(session, url, timeout=5):

"""带超时控制的异步请求"""

try:

# asyncio.wait_for 也返回 Future

result = await asyncio.wait_for(

async_fetch_url(session, url),

timeout=timeout

)

return result

except asyncio.TimeoutError:

return {'url': url, 'error': 'timeout'}

async def robust_async_crawler(urls, concurrency=10, timeout=5):

"""健壮的异步爬虫:并发控制 + 超时 + 重试"""

semaphore = asyncio.Semaphore(concurrency)

async def fetch_with_limit(session, url):

async with semaphore: # 限制并发数

for attempt in range(3): # 重试 3 次

try:

return await fetch_with_timeout(session, url, timeout)

except Exception as e:

if attempt == 2:

return {'url': url, 'error': str(e)}

await asyncio.sleep(2 ** attempt) # 指数退避

async with aiohttp.ClientSession() as session:

tasks = [fetch_with_limit(session, url) for url in urls]

results = await asyncio.gather(*tasks, return_exceptions=True)

return results

# 测试

if __name__ == "__main__":

urls = [f"https://httpbin.org/delay/{i%5}" for i in range(50)]

results = asyncio.run(robust_async_crawler(urls, concurrency=20))

print(f"成功:{sum(1 for r in results if 'error' not in r)}/50")

asyncio.Future 的特点总结

优势:

- 单线程高并发,资源占用极低

- 适合 I/O 密集型任务(网络、文件)

- 与 async/await 语法无缝集成

局限:

- 需要生态支持(必须用 aiohttp 等异步库)

- 学习曲线陡峭,调试复杂

- CPU 密集型任务无优势

第四章:两种 Future 的核心异同

相同点:承诺的哲学

# 共同的接口设计

class GenericFuture:

def done(self) –> bool:

"""是否完成"""

pass

def result(self, timeout=None):

"""获取结果(可能阻塞)"""

pass

def exception(self, timeout=None):

"""获取异常"""

pass

def add_done_callback(self, fn):

"""添加完成回调"""

pass

def cancel(self) –> bool:

"""尝试取消任务"""

pass

关键差异对比表

| 运行模型 | 多线程/多进程 | 单线程协程 |

| 阻塞方式 | result() 阻塞线程 | await 让出控制权 |

| 并发上限 | 受线程数限制(通常 < 1000) | 轻松支持 10000+ |

| 适用场景 | CPU 密集 + I/O 密集 | I/O 密集型 |

| 资源开销 | 高(每线程 MB) | 低(每协程 ~1KB) |

| 生态依赖 | 标准库即可 | 需要异步库支持 |

| 调试难度 | 较简单 | 复杂(需理解事件循环) |

实战对比:相同任务的不现

import time

from concurrent.futures import ThreadPoolExecutor

import asyncio

import aiohttp

# 模拟任务:请求 100 个 URL

test_urls = [f"https://httpbin.org/delay/1?id={i}" for i in range(100)]

# 方案 1:concurrent.futures

def test_concurrent_futures():

start = time.time()

with ThreadPoolExecutor(max_workers=20) as executor:

futures = [executor.submit(requests.get, url) for url in test_urls]

results = [f.result() for f in futures]

print(f"ThreadPoolExecutor: {time.time() – start:.2f}秒")

# 方案 2:asyncio

async def test_asyncio():

start = time.time()

async with aiohttp.ClientSession() as session:

tasks = [session.get(url) for url in test_urls]

results = await asyncio.gather(*tasks)

print(f"asyncio: {time.time() – start:.2f}秒")

# 结果:

# ThreadPoolExecutor: ~6-8 秒(受线程切换开销影响)

# asyncio: ~4-5 秒(单线程高并发)

第五章:混合使用——最佳实践

场景 1:在 asyncio 中运行阻塞代码

import asyncio

from concurrent.futures import ThreadPoolExecutor

def blocking_io_task(n):

"""阻塞式 I/O(如读取大文件)"""

time.sleep(n)

return f"阻塞任务完成:{n}秒"

async def async_main():

"""在异步代码中运行阻塞函数"""

loop = asyncio.get_event_loop()

# 使行器

with ThreadPoolExecutor() as pool:

# 将阻塞函数转为 asyncio.Future

result = await loop.run_in_executor(

pool,

blocking_io_task,

3

)

print(result)

asyncio.run(async_main())

场景 2:在多线程中使用 asyncio

from concurrent.futures import ThreadPoolExecutor

import asyncio

async def async_worker(worker_id):

"""异步工作函数"""

await asyncio.sleep(1)

return f"Worker {worker_id} 完成"

def run_async_in_thread(worker_id):

"""在线程中运行异步代码"""

return asyncio.run(async_worker(worker_id))

# 主线程启动多个线程,每个线程运行异步任务

with ThreadPoolExecutor(max_workers=5) as executor:

futures = [

executor.submit(run_async_in_thread, i)

for i in range(10)

]

results = [f.result() for f in futures]

print(results)

场景 3:混合并发架构

async def hybrid_crawler(urls):

"""混合架构:asyncio 爬取 + 多进程处理"""

from concurrent.futures import ProcessPoolExecutor

# 阶段 1:异步高并发爬取

async with aiohttp.ClientSession() as session:

tasks = [session.get(url) for url in urls]

responses = await asyncio.gather(*tasks)

html_contents = [await r.text() for r in responses]

# 阶段 2:CPU 密集型处理(多进程)

def cpu_intensive_parse(html):

# 复杂的数据提取、NLP 分析等

import re

return len(re.findall(r'\\w+', html))

loop = asyncio.get_event_loop()

with ProcessPoolExecutor() as pool:

# 将进程池的 Future 转为 asyncio.Future

tasks = [

loop.run_in_executor(pool, cpu_intensive_parse, html)

for html in html_contents

]

results = await asyncio.gather(*tasks)

return results

第六章:从 Future 到 Promise——编程范式的演进

JavaScript 的 Promise 对比

// JavaScript Promise

fetch('https://api.example.com/data')

.then(response => response.json())

.then(data => console.log(data))

.catch(error => console.error(error));

# Python asyncio.Future (类似)

async def python_future():

async with aiohttp.ClientSession() as session:

response = await session.get('https://api.example.com/data')

data = await response.json()

print(data)

Future 体现的编程哲学

# 组合多个 Future

async def combined_futures():

# 同时等待多个任务

results = await asyncio.gather(

fetch_data_from_api1(),

fetch_data_from_api2(),

fetch_data_from_api3()

)

# 任何一个完成就返回

result = await asyncio.wait(

[task1, task2, task3],

return_when=asyncio.FIRST_COMPLETED

)

第七章:实战决策指南

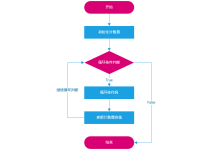

决策树

def choose_future_type(task_characteristics):

"""

选择 Future 类型的决策树

参数:

task_characteristics = {

'type': 'io' 或 'cpu',

'concurrency': 并发数,

'has_async_lib': 是否有异步库支持,

'complexity': 'simple' 或 'complex'

}

"""

if task_characteristics['type'] == 'cpu':

return "concurrent.futures.ProcessPoolExecutor"

# I/O 密集型

if task_characteristics['concurrency'] < 100:

if task_characteristics['complexity'] == 'simple':

return "concurrent.futures.ThreadPoolExecutor(简单够用)"

else:

return "asyncio(更高效)"

# 高并发场景

if task_characteristics['has_async_lib']:

return "asyncio.Future(必选)"

else:

return "考虑引入异步库或用 ThreadPoolExecutor(上限约 500 并发)"

# 示例决策

print(choose_future_type({

'type': 'io',

'concurrency': 1000,

'has_async_lib': True,

'complexity': 'complex'

}))

# 输出:asyncio.Future(必选)

常见陷阱与解决方案

# 陷阱 1:在 asyncio 中忘记 await

async def wrong_way():

future = asyncio.create_task(some_async_func())

# 错误:没有 await,任务可能还没完成就继续了

print(future.result()) # 抛出 InvalidStateError

async def right_way():

future = asyncio.create_task(some_async_func())

result = await future # 正确:等待完成

print(result)

# 陷阱 2:混用阻塞和非阻塞调用

async def blocking_mistake():

# 错误:requests.get 会阻塞整个事件循环

response = requests.get('https://example.com')

# 正确:使用 aiohttp

async with aiohttp.ClientSession() as session:

response = await session.get('https://example.com')

# 陷阱 3:忘记异常处理

async def handle_errors_properly():

tasks = [risky_async_func() for _ in range(10)]

# 错误:一个失败导致全部失败

# results = await asyncio.gather(*tasks)

# 正确:捕获异常

results = await asyncio.gather(*tasks, return_exceptions=True)

for i, result in enumerate(results):

if isinstance(result, Exception):

print(f"任务 {i} 失败:{result}")

终章:我的实战建议

经过多年踩坑,我总结出这些心得:

1. 从需求出发,不要过度设计

# 小项目(< 50 个请求)

def simple_enough(urls):

return [requests.get(url) for url in urls]

# 中等项目(50-500 个)

def use_thread_pool(urls):

with ThreadPoolExecutor(max_workers=20) as executor:

return list(executor.map(requests.get, urls))

# 大项目(500+ 个或需要实时性)

async def use_asyncio(urls):

async with aiohttp.ClientSession() as session:

tasks = [session.get(url) for url in urls]

return await asyncio.gather(*tasks)

2. 监控 Future 的生命周期

import logging

logging.basicConfig(level=logging.INFO)

async def monitored_task(task_name):

"""带监控的异步任务"""

logging.info(f"[{task_name}] 开始")

try:

await asyncio.sleep(1)

logging.info(f"[{task_name}] 完成")

return f"{task_name} 成功"

except Exception as e:

logging.error(f"[{task_name}] 失败:{e}")

raise

3. 善用工具调试

# 调试 asyncio

import asyncio

# 启用调试模式

asyncio.run(main(), debug=True)

# 监控未完成的任务

async def check_pending_tasks():

tasks = [t for t in asyncio.all_tasks() if not t.done()]

print(f"当前有 {len(tasks)} 个未完成任务")

结语:Future 的未来

Future 不仅是一个编程工具,更是一种思维方式:将现在的行动与未来的结果解耦。这种"承诺式编程"在现代软件架构中无处不在:

- 微服务中的异步消息队列

- 前端的 Promise/async-await

- 响应式编程的 Observable

你的选择:

- 简单任务 → concurrent.futures.ThreadPoolExecutor

- 高并发 I/O → asyncio.Future

- CPU 密集 → concurrent.futures.ProcessPoolExecutor

- 复杂系统 → 混合使用

讨论时间:

- 你在项目中如何选择并发方案?

- 遇到过哪些与 Future 相关的坑?

- 你认为 Python 的异步生态还需要哪些改进?

欢迎在评论区分享你的故事,让我们一起构建更高效的异步世界!

延伸阅读:

- PEP 3148 – concurrent.futures 设计文档

- PEP 3156 – asyncio 规范

- 《流畅的 Python》第 17-18 章:深入理解 Future

- Trio 框架:下一代异步 I/O 库

愿你的每个 Future 都能如期兑现承诺!🚀

网硕互联帮助中心

网硕互联帮助中心

评论前必须登录!

注册