作者:昇腾实战派 Sglang知识地图: https://blog.csdn.net/weixin_41406651/article/details/156754353?spm=1001.2014.3001.5502

背景概述

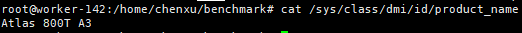

Qwen3-235B模型用Atlas 800I A3或Atlas 800T A3均可部署,本文档以Atlas 800T A3为例,记录了使用SGLang框架部署Qwen3-235B模型的1P2D(一个Prefill节点和两个Decode节点)配置方案。该配置针对大规模语言模型推理场景进行了优化,特别适用于需要高吞吐量的生产环境。

1. 版本与环境配置

- 机器型号: Atlas 800T A3

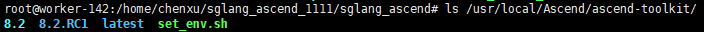

* Cann版本

* Cann版本  8.2.RC1

8.2.RC1 - Sglang分支(此分支有别于主干,是开发中的分支暂未合入 & 只有对qwen系列的优化)git clone https://github.com/ping1jing2/sglang.git

cd sglang

git checkout -b main_qwen origin/main_qwen - Docker镜像版本:# docker.io

docker pull lmsysorg/sglang:main-cann8.3.rc1-910b # a2

docker pull lmsysorg/sglang:main-cann8.3.rc1-a3 # a3

# 国内镜像站

docker pull swr.cn-southwest-2.myhuaweicloud.com/base_image/dockerhub/lmsysorg/sglang:main-cann8.3.rc1-910b # a2

docker pull swr.cn-southwest-2.myhuaweicloud.com/base_image/dockerhub/lmsysorg/sglang:main-cann8.3.rc1-a3 # a3

- triton-ascend & torch_npu更新:https://wiki.huawei.com/domains/119266/wiki/243400/WIKI202511259151404?title=_fde87425

- sgl-kernel-npu需要手动编译git clone https://github.com/sgl-project/sgl-kernel-npu

cd sgl-kernel-npu

bash build.sh

pip install output/sgl_kernel_npu*.whl - set_env.sh 注意:启动router时,操作前手动 source source /usr/local/Ascend/ascend-toolkit/set_env.shexport LD_LIBRARY_PATH=/usr/local/Ascend/driver/lib64:/usr/local/Ascend/driver/lib64/common:/usr/local/Ascend/driver/lib64/driver:$LD_LIBRARY_PATH

export ASCEND_TOOLKIT_HOME=/usr/local/Ascend/ascend-toolkit/latest

export LD_LIBRARY_PATH=${ASCEND_TOOLKIT_HOME}/lib64:${ASCEND_TOOLKIT_HOME}/lib64/plugin/opskernel:${ASCEND_TOOLKIT_HOME}/lib64/plugin/nnengine:${ASCEND_TOOLKIT_HOME}/opp/built-in/op_impl/ai_core/tbe/op_tiling/lib/linux/$(arch):$LD_LIBRARY_PATH

export LD_LIBRARY_PATH=${ASCEND_TOOLKIT_HOME}/tools/aml/lib64:${ASCEND_TOOLKIT_HOME}/tools/aml/lib64/plugin:$LD_LIBRARY_PATH

export PYTHONPATH=${ASCEND_TOOLKIT_HOME}/python/site-packages:${ASCEND_TOOLKIT_HOME}/opp/built-in/op_impl/ai_core/tbe:$PYTHONPATH

export PATH=${ASCEND_TOOLKIT_HOME}/bin:${ASCEND_TOOLKIT_HOME}/compiler/ccec_compiler/bin:${ASCEND_TOOLKIT_HOME}/tools/ccec_compiler/bin:$PATH

export ASCEND_AICPU_PATH=${ASCEND_TOOLKIT_HOME}

export ASCEND_OPP_PATH=${ASCEND_TOOLKIT_HOME}/opp

export TOOLCHAIN_HOME=${ASCEND_TOOLKIT_HOME}/toolkit

export ASCEND_HOME_PATH=${ASCEND_TOOLKIT_HOME}

2 服务启动脚本

服务启动配置

以下脚本支持P节点、D节点和sgl-router共用,只需修改P节点、D节点相关参数即可。

# 235b_run.sh

# docker exec -it sglang_perf_b150 bash

pkill -9 python | pkill -9 sglang

pkill -9 sglang

echo performance | tee /sys/devices/system/cpu/cpu*/cpufreq/scaling_governor

sysctl -w vm.swappiness=0

sysctl -w kernel.numa_balancing=0

sysctl -w kernel.sched_migration_cost_ns=50000

export SGLANG_SET_CPU_AFFINITY=1

# 设置PYTHONPATH

cd /home/sglang_ascend

export PYTHONPATH=${PWD}/python:$PYTHONPATH

unset https_proxy

unset http_proxy

unset HTTPS_PROXY

unset HTTP_PROXY

unset ASCEND_LAUNCH_BLOCKING

source /usr/local/Ascend/ascend-toolkit/latest/opp/vendors/customize/bin/set_env.bash

#export SGLANG_EXPERT_DISTRIBUTION_RECORDER_DIR=/home///sglang_ascend_1111/sglang_ascend/hot_map

export PYTORCH_NPU_ALLOC_CONF=expandable_segments:True

export SGLANG_DEEPEP_NUM_MAX_DISPATCH_TOKENS_PER_RANK=16

MODEL_PATH=/mnt/share/weights/Qwen3-235B-A22B-W8A8

# pd传输, IP设置为p节点首节点

export ASCEND_MF_STORE_URL="tcp://x.x.x.x:24667"

# p节点IP

P_IP=('x.x.x.x')

# D节点IP

#D_IP=('x.x.x.x')

D_IP=('x.x.x.x' 'x.x.x.x')

#export SGLANG_ENABLE_TORCH_COMPILE=1

export SGLANG_DISAGGREGATION_BOOTSTRAP_TIMEOUT=600

LOCAL_HOST1=`hostname -I|awk -F " " '{print$1}'`

LOCAL_HOST2=`hostname -I|awk -F " " '{print$2}'`

echo "${LOCAL_HOST1}"

echo "${LOCAL_HOST2}"

for i in "${!P_IP[@]}";

do

if [[ "$LOCAL_HOST1" == "${P_IP[$i]}" || "$LOCAL_HOST2" == "${P_IP[$i]}" ]];

then

echo "${P_IP[$i]}"

source /usr/local/Ascend/ascend-toolkit/set_env.sh

source /usr/local/Ascend/nnal/atb/set_env.sh

export HCCL_BUFFSIZE=3000

export TASK_QUEUE_ENABLE=2

export HCCL_SOCKET_IFNAME=lo

export GLOO_SOCKET_IFNAME=lo

export STREAMS_PER_DEVICE=32

export ENABLE_ASCEND_MOE_NZ=1

export DEEP_NORMAL_MODE_USE_INT8_QUANT=1

# export ENABLE_PROFILING=1

# P节点

python -m sglang.launch_server –model-path ${MODEL_PATH} –disaggregation-mode prefill \\

–host ${P_IP[$i]} –port 8000 –disaggregation-bootstrap-port 8995 –trust-remote-code \\

–nnodes 1 –node-rank $i –tp-size 16 –dp-size 8 –mem-fraction-static 0.6 \\

–disable-radix-cache \\

–ep-dispatch-algorithm static –init-expert-location /homesglang_ascend/hot_map/expert_distribution_recorder_1763480391.7582676.pt \\

–attention-backend ascend –device npu –quantization w8a8_int8 –disaggregation-transfer-backend ascend \\

–max-running-requests 128 –chunked-prefill-size 114688 –max-prefill-tokens 458880 \\

–disable-overlap-schedule –enable-dp-attention –tokenizer-worker-num 4 \\

–moe-a2a-backend deepep –deepep-mode normal –dtype bfloat16

NODE_RANK=$i

break

fi

done

for i in "${!D_IP[@]}";

do

if [[ "$LOCAL_HOST1" == "${D_IP[$i]}" || "$LOCAL_HOST2" == "${D_IP[$i]}" ]];

then

echo "${D_IP[$i]}"

source /usr/local/Ascend/ascend-toolkit/set_env.sh

source /usr/local/Ascend/nnal/atb/set_env.sh

export DP_ROUND_ROBIN=1

export SGLANG_DEEPEP_NUM_MAX_DISPATCH_TOKENS_PER_RANK=60

export HCCL_BUFFSIZE=512

export HCCL_SOCKET_IFNAME=data0.3001

export GLOO_SOCKET_IFNAME=data0.3001

export STREAMS_PER_DEVICE=32

# export ENABLE_ASCEND_MOE_NZ=1

# export ENABLE_PROFILING=1

# D节点

python -m sglang.launch_server –model-path ${MODEL_PATH} –disaggregation-mode decode \\

–host ${D_IP[$i]} –port 8001 –trust-remote-code \\

–nnodes 2 –node-rank $i –tp-size 32 –dp-size 16 –mem-fraction-static 0.83 –max-running-requests 960 \\

–attention-backend ascend –device npu –quantization w8a8_int8 –enable-dp-attention \\

–moe-a2a-backend ascend_fuseep –cuda-graph-bs 6 8 12 15 18 20 22 24 30 36 42 48 54 56 58 60 \\

–dist-init-addr 172.27.1.143:5000 \\

–disaggregation-transfer-backend ascend –watchdog-timeout 9000 –context-length 8192 \\

–prefill-round-robin-balance –enable-dp-lm-head –tokenizer-worker-num 4 –dtype bfloat16

NODE_RANK=$i

break

fi

done

exit 1

python -m sglang_router.launch_router \\

–pd-disaggregation \\

–policy cache_aware \\

–prefill http://x.x.x.x:8000 8995 \\

–decode http://x.x.x.x:8001 \\

–host 127.0.0.1 \\

–port 6688 \\

–mini-lb

3 ais_bench 配置

3.1 修改测试参数

调整Python测试代码配置:

vim ais_bench/benchmark/configs/models/vllm_api/vllm_api_stream_chat.py

# vllm_api_stream_chat.py

from ais_bench.benchmark.models import VLLMCustomAPIChatStream

from ais_bench.benchmark.utils.model_postprocessors import extract_non_reasoning_content

models = [

dict(

attr="service",

type=VLLMCustomAPIChatStream,

abbr='vllm-api-stream-chat',

path="/mnt/share/weights/Qwen3-235B-A22B-W8A8",

model="Qwen3",

request_rate = 10.5,

retry = 2,

host_ip = "127.0.0.1",

host_port = 6688,

max_out_len = 1500,

batch_size = 960, #880

trust_remote_code=False,

generation_kwargs = dict(

temperature = 0,

ignore_eos = True,

),

pred_postprocessor=dict(type=extract_non_reasoning_content)

)

]

3.2 执行测试命令

ais_bench –models vllm_api_stream_chat –datasets gsm8k_gen_0_shot_cot_str_perf –debug –summarizer default_perf –mode perf –num-prompts 4240

网硕互联帮助中心

网硕互联帮助中心

评论前必须登录!

注册