背景:昇腾服务器操作系统已安装,npu驱动版本为23.0.0,8张NPU卡私有化部署,docker环境已安装。

步骤一:容器镜像准备

镜像下载链接:镜像仓库网

下载:docker pull swr.cn-central-221.ovaijisuan.com/wh-aicc-fae/mindie:910A-ascend_24.1.rc3-cann_8.0.t63-py_3.10-ubuntu_20.04-aarch64-mindie_1.0.T71.05

步骤二:权重准备

权重下载链接:QwQ-32B-W8A8

在下载前,请先通过如下命令安装ModelScope

pip install modelscope

命令行下载 下载完整模型库到指定目录

modelscope download –model yuqian1104/QwQ-32B-W8A8 –local_dir ./dir

步骤三:容器创建

挂载2张npu卡进行容器启动:

docker run -it -d –net=host –shm-size=128g \\

–name QwQ-32B-W8A8 \\

–device=/dev/davinci_manager \\

–device=/dev/hisi_hdc \\

–device=/dev/devmm_svm \\

–device=/dev/davinci0 \\ #指定对应npu卡

–device=/dev/davinci1 \\ #指定对应npu卡

-v /etc/localtime:/etc/localtime:ro \\

-v /usr/local/Ascend/driver:/usr/local/Ascend/driver:ro \\

-v /usr/local/sbin:/usr/local/sbin:ro \\

-v /model:/model \\ #权重存放路径

swr.cn-central-221.ovaijisuan.com/wh-aicc-fae/mindie:910A-ascend_24.1.rc3-cann_8.0.t63-py_3.10-ubuntu_20.04-aarch64-mindie_1.0.T71.02 /bin/bash

步骤四:纯模型推理

依赖配置

transformers版本升级至4.45.0,或将tokenizers版本升级至0.20.0。

对话测试

进入llm_model路径

ATB_SPEED_HOME_PATH默认/usr/local/Ascend/llm_model,以情况而定

cd $ATB_SPEED_HOME_PATH

执行对话测试

torchrun –nproc_per_node 4 \\

–master_port 20037 \\

-m examples.run_pa \\

–model_path {权重路径} \\

–trust_remote_code \\

–max_output_length 32

性能测试

进入ModelTest路径

cd $ATB_SPEED_HOME_PATH/tests/modeltest/

运行测试脚本

bash run.sh pa_[data_type] performance [case_pair] [batch_size] ([prefill_batch_size]) [model_name] ([is_chat_model]) (lora [lora_data_path]) [weight_dir] ([trust_remote_code]) [chip_num] ([parallel_params]) ([max_position_embedding/max_sequence_length])

具体执行batch=1, 输入长度256, 输出长度256用例的4卡并行性能测试命令为:

bash run.sh pa_bf16 performance [[256,256]] 1 qwen ${weight_path} 4

步骤五:模型服务化推理

模型推理参考链接:https://gitee.com/ascend/ModelZoo-PyTorch/tree/master/MindIE/LLM/QwQ/QwQ-32B

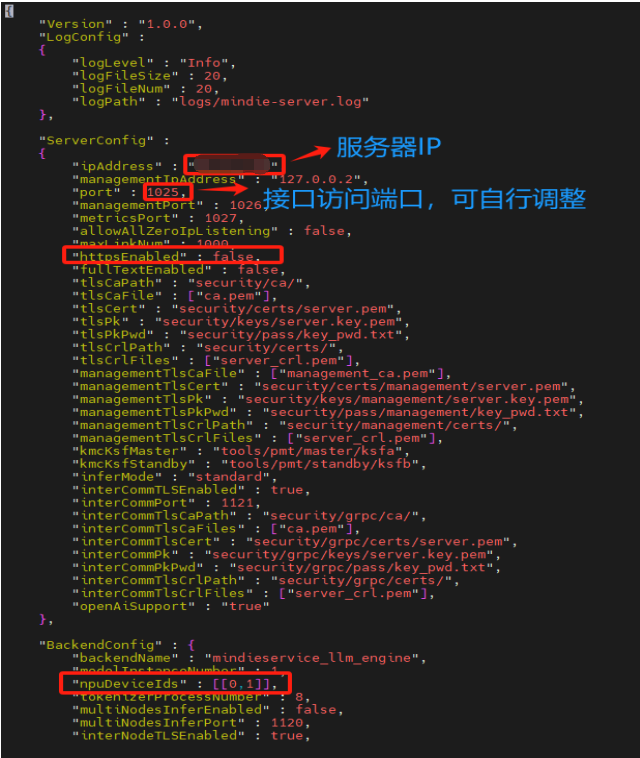

1)修改mindservice服务配置文件

/usr/local/Ascend/mindie/latest/mindie-service目录下vim conf/config.json

修改位置如下:

mindie server的config.json文件参数说明文档链接:

https://www.hiascend.com/document/detail/zh/mindie/100/envdeployment/instg/mindie_instg_0026.html

2)模型服务启动

/usr/local/Ascend/mindie/latest/mindie-service目录下采用后台启动方式进行服务启动

nohup ./bin/mindieservice_daemon > output.log 2>&1 &

该目录下采用 tail -f output.log查看服务启动情况

3)服务调用

示例:curl -H "Accept: application/json" -H "Content-type: application/json" -X POST -d '

{

"model": "DeepSeek-R1-Distill-Qwen-32B-W8A8",

"messages": [{

"role": "user",

"content": "你是谁?"

}],

"stream": false,

"presence_penalty": 1.03,

"frequency_penalty": 1.0,

"repetition_penalty": 1.0,

"temperature": 0.5,

"top_p": 0.95,

"top_k": 0,

"seed": null,

"stop": ["stop1", "stop2"],

"stop_token_ids": [2, 13],

"include_stop_str_in_output": false,

"skip_special_tokens": true,

"ignore_eos": false,

"max_tokens": 500

}' http://{ip#config.json文件中配置的ipaddres参数值}:{端口#config.json文件中配置的port参数值}/v1/chat/completions

推理接口文档:

https://www.hiascend.com/document/detail/zh/mindie/100/mindieservice/servicedev/mindie_service0076.html

网硕互联帮助中心

网硕互联帮助中心

评论前必须登录!

注册